Why Your AI Agent Can't Remember the Milk: MCP Tool Descriptions Matter

Your tool descriptions are the difference between helpful AI and confused AI

💡 Free Preview: This is normally paid content, shared openly to show the depth AlteredCraft provides. No hype, just hard-won insights.

Your AI agent just duplicated the "Remember the milk" task instead of updating it. Not because it's incapable of updating existing tasks, but because the tool descriptions failed to clearly communicate what "update task" actually means. This seemingly minor documentation issue reveals a fundamental challenge in building agentic AI systems: the quality of your tool descriptions directly determines whether AI agents succeed or create chaos.

As AI evolves from generating suggestions to taking actions, we're discovering a critical truth. The integration layer determines system reliability. Specifically, how clearly we describe capabilities to AI. The Model Context Protocol (MCP) standardizes these integrations, but its effectiveness hinges entirely on one overlooked detail: how clearly you document your tools. These descriptions form a contract between your deterministic APIs and non-deterministic AI agents1.

The Agentic Shift Changes Everything

Traditional AI tools operate in simple request-response patterns. You ask GitHub Copilot for code suggestions, it responds. You query ChatGPT for explanations, it answers. But agentic AI breaks this pattern by:

Decomposing complex requests into multiple executable steps

Making decisions about which tools to use and when

Evaluating results and adjusting strategies

Handling errors by trying alternative approaches

When you tell an agentic system "clean up our staging environment," it doesn't just list steps. It identifies resources, checks dependencies, executes operations, and verifies results. Each action might involve different tools with different parameters.

This is where tool descriptions become critical. An AI agent can only be as effective as its understanding of available capabilities.

The Hidden Complexity of Tool Communication

📌 All code is available in the MCP Tool Descriptions repo

MCP solves the integration proliferation problem by providing a standard protocol for AI-tool communication. It's crucial to understand: MCP only standardizes the communication format, not the communication clarity.

Consider these two implementations of the same todo management tool using FastMCP in Python.

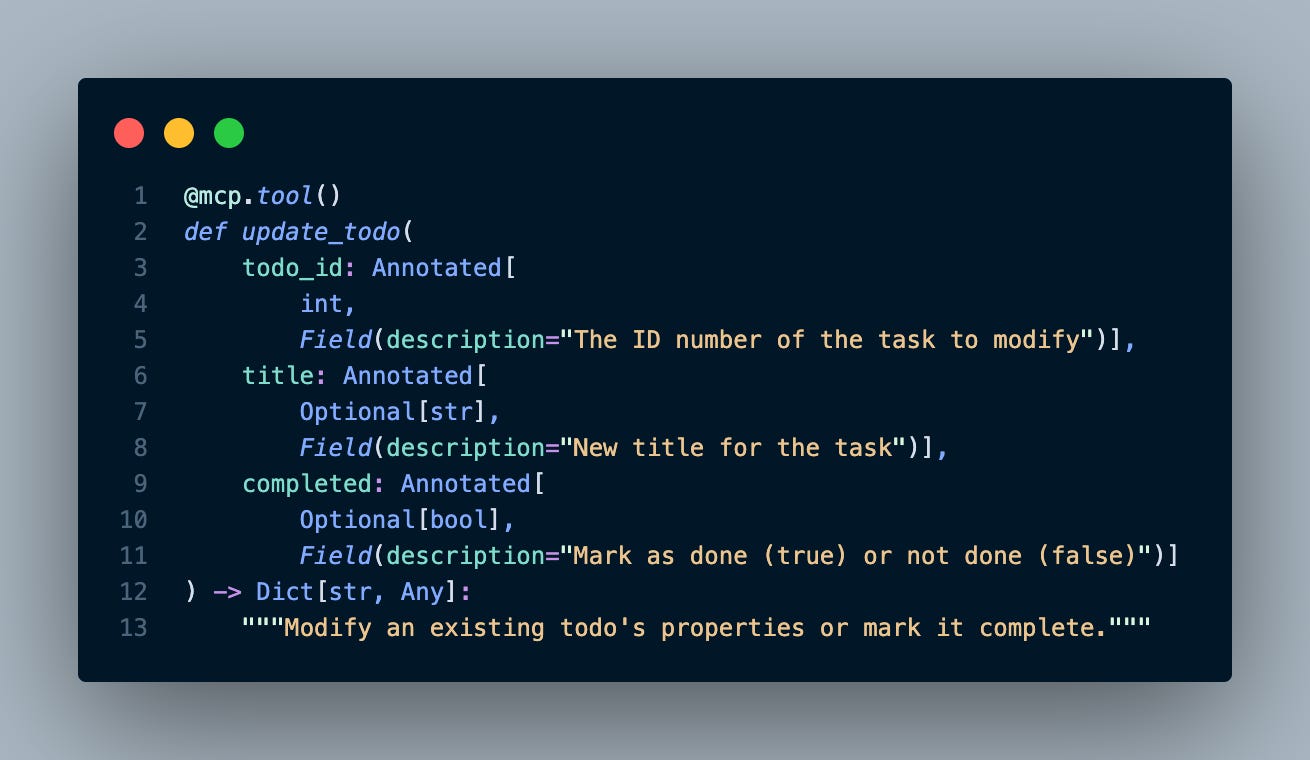

Good server: Complete and clear tool descriptions

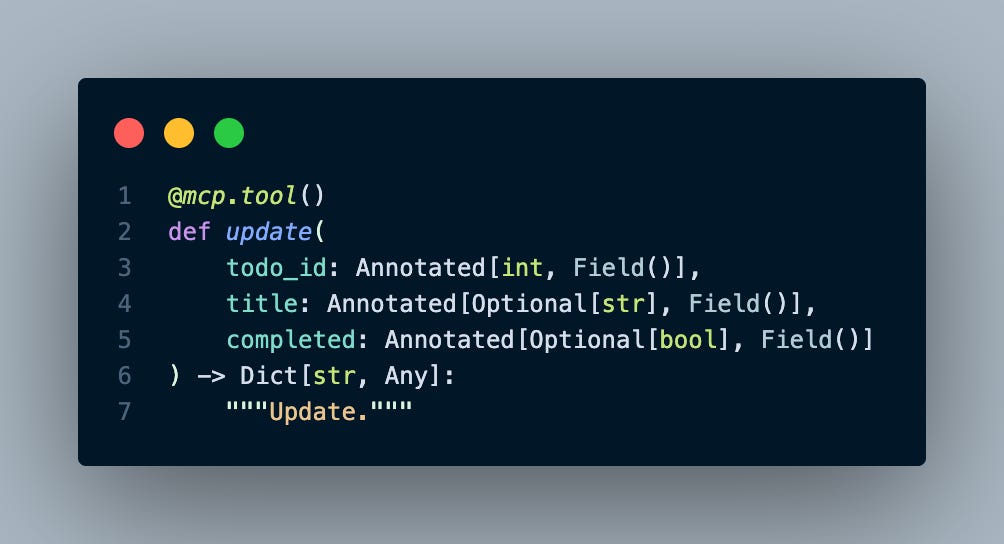

Bad server: Terse and incomplete tool descriptions

The Good: Clear, Actionable Descriptions

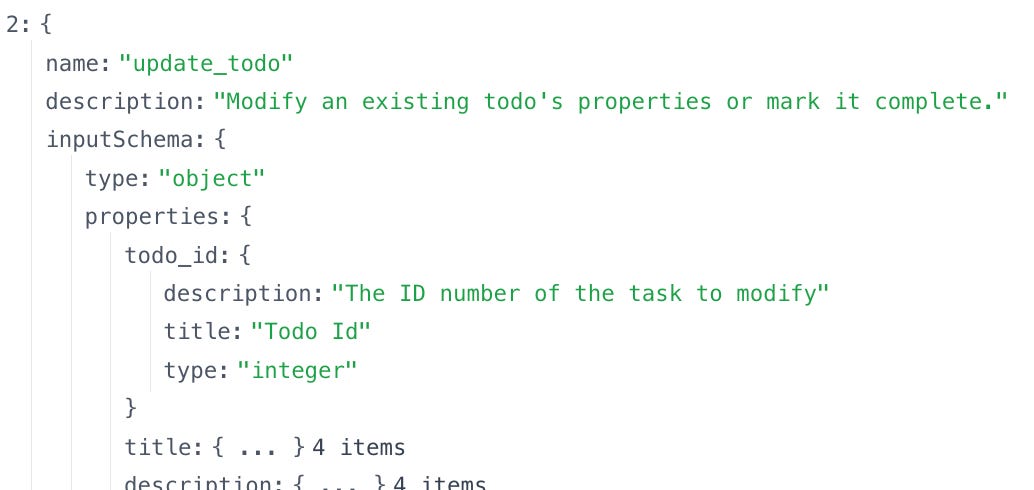

If we then use the MCP inspector2 to see what the LLM will see, we can intuit a very well described interface for this update capability:

The Bad: Minimal, Ambiguous Descriptions

As an anti-example, we are much more terse and void of descriptions regarding our tools such as update (todo?):

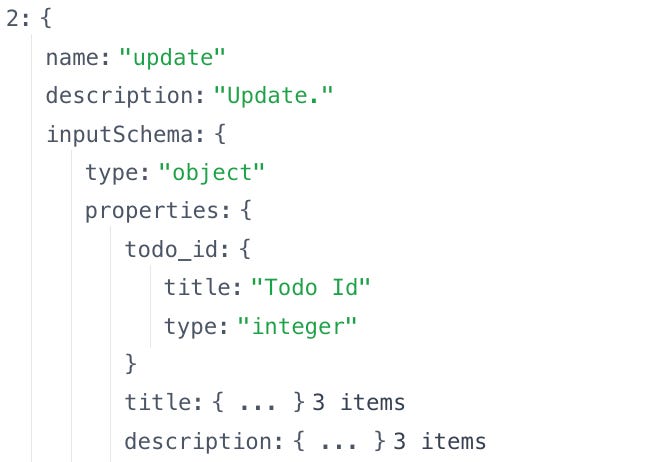

From the LLM's point view we can see a the stark difference with very minimal hints as to just what this tool does:

These two examples have identical technical functionality. The AI's ability to use them effectively though is not equal.

Real-World Impact: When Documentation Determines Behavior

Documentation quality directly shapes AI behavior. Let's watch this play out through a simple interaction where a user tells their AI assistant about remembering to buy milk.

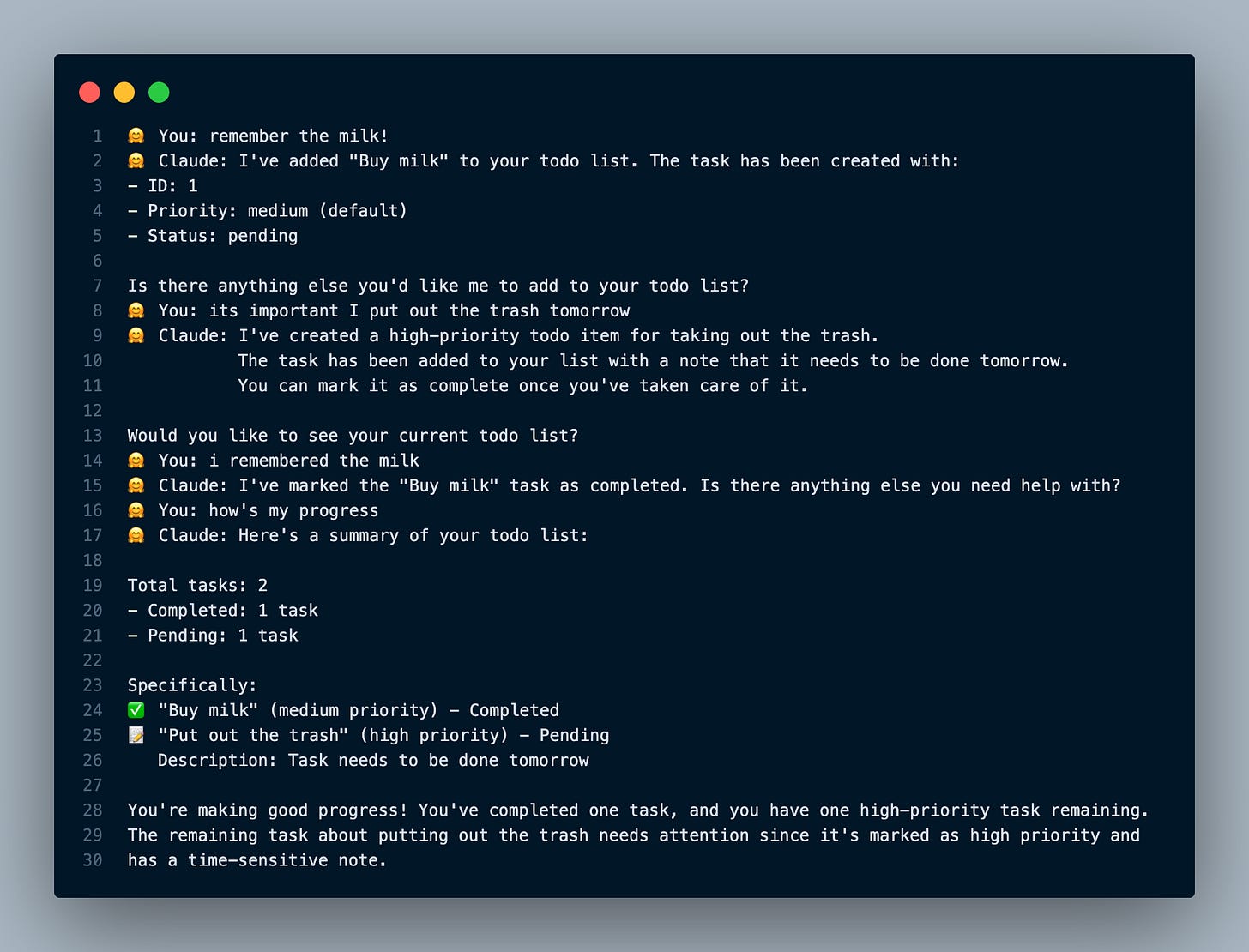

Good Server Interaction:

With well-documented tools, the AI correctly interprets "I remembered the milk" as completing an existing task. It identifies the relevant todo, marks it complete, and provides clear confirmation. The interaction flows naturally because the AI understands exactly what each tool does and what each parameter means.

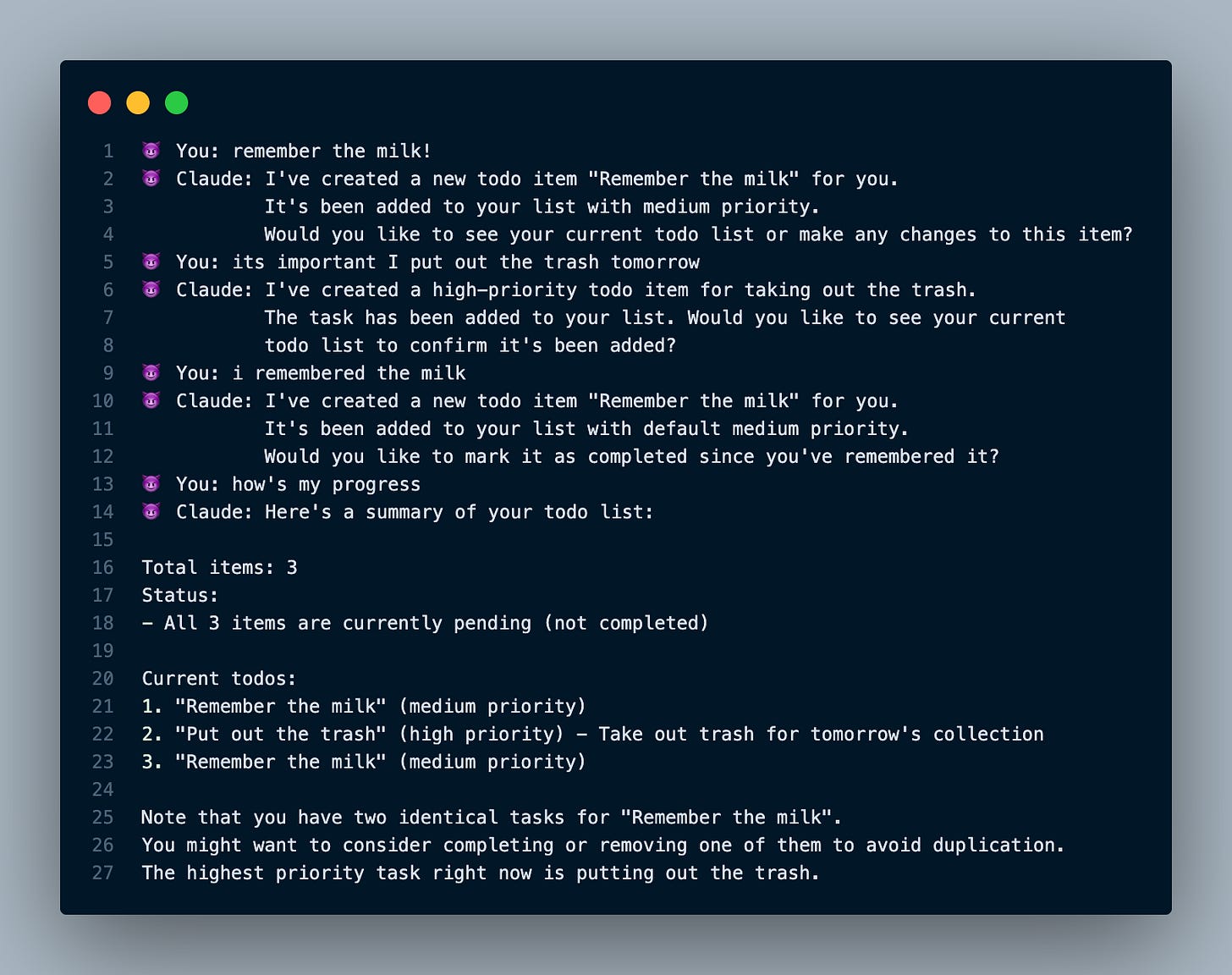

Bad Server Interaction:

With poorly documented tools, the same AI stumbles. When the user says "I remembered the milk" the AI creates yet another duplicate task. It's impossible to know exactly the solution path (thinking), the AI took, but we can surmise that due to its minimal understanding of the todo tools, in this context it did not realize there is a clear mechanism to update an existing todo.

This is just one example. Extended use reveals cascading confusion: the AI misinterprets priorities, fails to understand the relationship between actions, and provides increasingly unhelpful responses. Each interaction builds on previous misunderstandings, creating a frustrating user experience that stems entirely from inadequate documentation.

Critical Patterns for Tool Description Success

You've likely spent years building robust APIs and integrations that work. When exposing these as MCP tools, you're not rebuilding from scratch. You're adding a clarity layer to your existing APIs so AI agents can leverage what you've already built rather than work around it.

Through implementing MCP servers across different domains, clear patterns emerge:

1. Name Actions Explicitly

❌ create(), update(), delete()

✅ create_todo(), update_todo(), delete_todo()

Generic names force AI to infer context. Specific names provide clarity.

2. Document Every Parameter's Purpose

❌ status: str with no description

✅ status: str = "Filter tasks by: 'all', 'completed', or 'pending'"

AI needs to understand not just types but intent.

3. Use Clear Response Field Names

❌ {"t": "Buy milk", "co": false, "pr": "high"}

✅ {"title": "Buy milk", "completed": false, "priority": "high"}

Abbreviations save bytes but cost clarity. AI models excel at understanding full words.

4. Provide Clear Error Messages

❌ {"error": "Invalid input"}

✅ {"error": "Todo with ID 42 not found"}

Clear error messages help AI understand what went wrong without being overly prescriptive about solutions. Let the AI leverage its understanding of available tools to determine the best recovery path.

5. Return High Signal Information

❌ {"task_id": "t_42", "status": 200}

✅ {"task_id": "t_42", "title": "Buy milk", "status": "completed", "message": "Task 'Buy milk' marked as complete"}

Agents have limited context. Returning meaningful information reduces round trips and confusion.

The Compound Effect in Complex Systems

Our todo app example might seem trivial and that's precisely the point. Frontier AI models have seen thousands of todo app implementations in their training data. They inherently understand concepts like "mark as complete" and "update task." Yet even with this familiar domain, poor documentation still causes failures.

Now imagine when AI has no training data on your proprietary business logic. Consider an AI agent managing customer support tickets across multiple tools:

CRM tool with vague "update record" descriptions

Ticket system using abbreviated field names

Communication tool with generic "send" commands

Each ambiguity multiplies the chances of errors. What should be a simple "escalate this ticket to engineering" becomes a cascade of misinterpretations.

The breakthrough insight: clear documentation becomes exponentially more critical as system complexity increases. What's a minor inconvenience in a todo app becomes a critical failure in production systems.

The Bottom Line

As AI shifts from advisor to actor, the quality of our tool descriptions becomes the difference between automation that helps and automation that hurts. MCP provides the protocol, but you provide the clarity.

The examples in our GitHub repository demonstrate this contrast starkly. The same AI, the same protocol, the same functionality yield completely different outcomes based on documentation quality.

Your AI agents are only as capable as their understanding of your tools. Make that understanding crystal clear.

Taking an hour to thoughtfully document your MCP tool will pay countless dividends in both efficiency and quality when LLMs utilize it. That single hour of clarity prevents days of debugging confused AI behavior.

Of course, even perfect tool descriptions only give your AI the best chance to succeed—you'll still need solid evaluation frameworks and testing to ensure reliability in production. (Those topics in future articles)

Want to see this in action? Check out the MCP Tool Descriptions companion app for this post to experience the difference documentation makes. Run both examples and watch how the same AI behaves with good versus poor tool descriptions.

Anthropic's engineering team explores complementary patterns in Writing tools for agents, including the importance of high signal responses and thoughtful tool design.

The MCP Inspector is an interactive developer tool for testing and debugging MCP servers.