Building for the Agentic Era: How MCP is Redefining AI Integration

How the open protocol enabling agentic AI is transforming system integration

As AI evolves from answering questions to taking actions, we're hitting a wall. Every team wants AI that doesn't just suggest code but deploys it, doesn't just identify issues but fixes them, doesn't just plan workflows but executes them. Yet we cannot build these integrations like it's 2010: custom endpoints, versioned APIs, and client libraries that often break with API updates.

💡 Free Preview: This is normally paid content, shared openly to show the depth AlteredCraft provides. No hype, just hard-won insights.

The Model Context Protocol (MCP) offers a fundamentally different approach. What makes MCP particularly valuable is how it naturally shifts developers from implementation details to capability design. Rather than asking "How do I connect this AI to this database?", MCP encourages "What capabilities should I expose?" It's similar to the mental shift that REST brought to web services, but designed for AI systems that need to discover, reason about, and compose capabilities autonomously. For developers, this represents the opportunity to define how AI systems will act within business logic for years to come.

This post explores MCP through the lens of real integration challenges, showing how it transforms the way we build AI-enabled systems. We'll examine the protocol's architecture, understand why it matters now, and walk through practical examples from our working implementation on GitHub.

Understanding Agentic AI

Before diving into MCP's architecture, let's clarify what makes AI "agentic." This distinction drives everything about MCP's design.

Traditional AI tools such as chat interfaces work in a request-response pattern: you ask, they respond. Powerful, but limited to single interactions.

Agentic AI breaks this pattern. These systems:

Decompose complex requests into executable steps

Make multiple API calls to achieve a goal

Evaluate results and adjust strategies

Handle errors by trying alternative approaches

When you ask an agentic system to "clean up our staging environment," it doesn't just list the steps. It identifies unused resources, checks dependencies, executes cleanup operations, verifies the results, and reports back. Each step might involve different services, different permissions, different error conditions.

This is why traditional integrations fail. You can't predefine every possible workflow an AI might need. MCP solves this by providing an open standard for making capabilities discoverable and composable at runtime.

The Integration Challenge and MCP's Solution

Consider a common scenario: Your company wants to integrate AI into customer support, sales workflows, and product analytics. The traditional approach means building custom APIs for each system, creating bespoke integrations, and maintaining a web of AI-specific endpoints that become technical debt over time.

MCP offers a different approach. By building standardized interfaces, any AI system can discover and use your services. When the company switches AI platforms, these integrations continue working. When AI capabilities evolve from simple queries to complex agentic workflows, they integrate seamlessly. When executives ask 'How quickly can we add AI to X system?', the answer becomes "immediately".

What MCP Actually Is

MCP stands for Model Context Protocol. At its core, MCP is the infrastructure layer, (protocol), that enables AI systems to discover and interact with external services in a standardized way.

The analogy to REST APIs is helpful: before REST, every system had its own HTTP integration approach. REST provided a standard way for web applications to communicate. MCP does the same thing for AI integrations, creating a common protocol for AI systems to discover and use external capabilities.

But unlike REST, which was designed for request-response patterns between deterministic clients and servers, MCP is built for the agentic era. Here's the crucial difference: with REST, your client is code that does exactly what it's programmed to do. With MCP, your "client" is an AI that interprets, reasons, and makes creative decisions.

You're not exposing endpoints to machines—you're exposing capabilities to an intelligence.

This fundamental shift explains why MCP works differently. AI systems need to discover, reason about, and compose multiple capabilities to achieve goals. They need rich descriptions to understand intent, not just parameter types. They need to handle ambiguity and make judgment calls about which tools to use when.

The Three Core Components

There are more mechanisms defined in the MCP specification1, but here we will focus on these core three used to expose capabilities of an MCP server:

1. Tools - Functions AI can execute (like "search_customer_records" or "deploy_to_staging")

Implementation focus: You design the actions that AI can perform

2. Resources - Data AI can access (like "product_catalog" or "compliance_policies")

Implementation focus: You control what information AI has access to

3. Prompts - Conversation templates that guide AI behavior

Implementation focus: You shape how AI interacts with users and systems

Here's the crucial insight: The complexity lives in your domain logic, not in the integration layer. MCP handles discovery, typing, and communication standards. You focus on what your system should do, not how AI connects to it.

This separation powers the real transformation: AI that discovers and combines your tools in ways you never explicitly programmed. Today it's achieving set operations on todos, tomorrow it's orchestrating your entire operational workflow.

The Architectural Perspective

Once you understand MCP's core components (tools, resources, and prompts), the next step is understanding how these pieces work together in practice. This is where MCP's architectural benefits become clear and where developers start engaging with system design rather than just feature implementation.

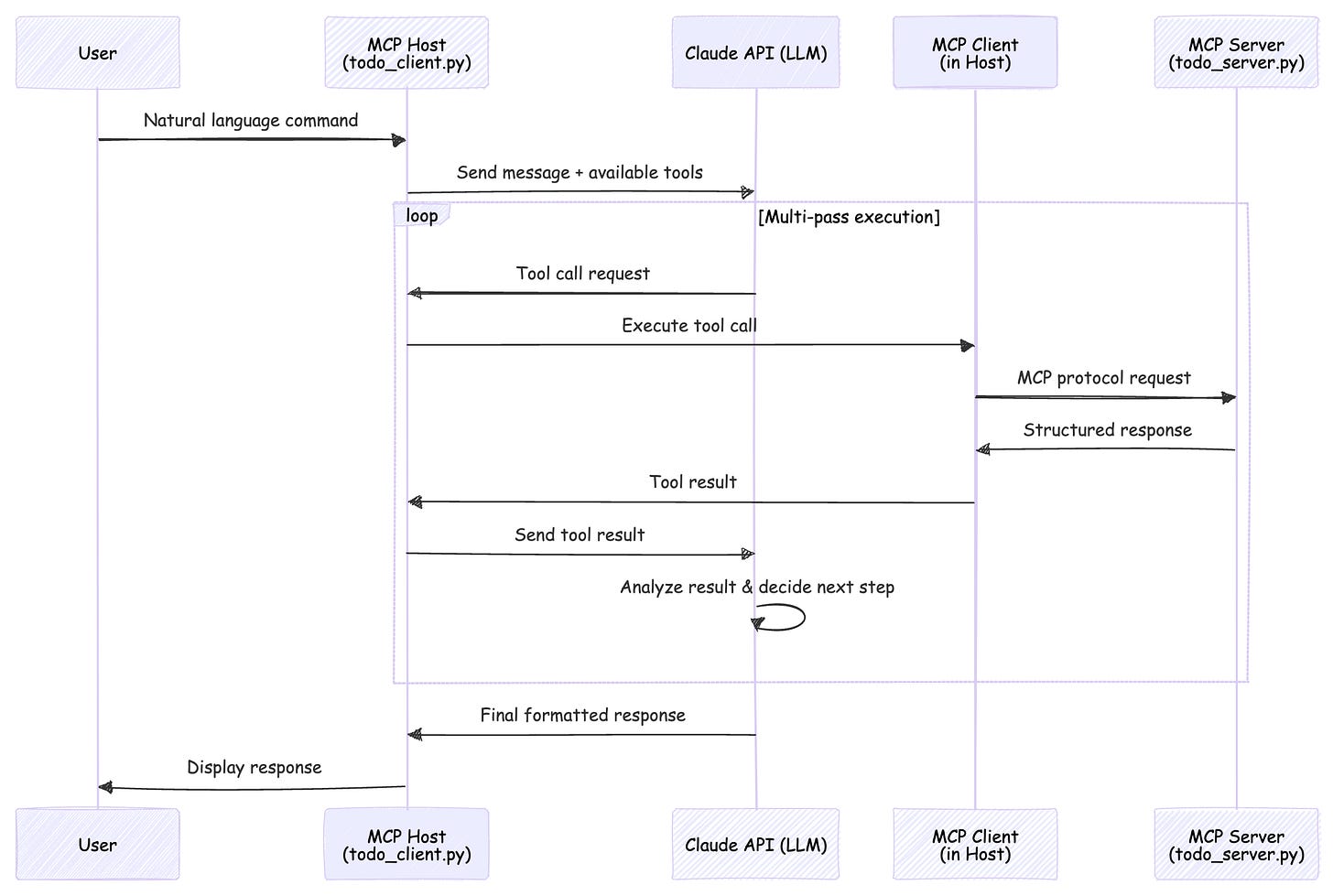

Figure 2: MCP enables paired with a multi-pass execution patterns where AI can make multiple tool calls to complete complex requests. The Host application orchestrates the conversation. In response to the user's question, Claude (or any LLM) decides which tools to use, and host app makes those calls to the the MCP Server and reports back the result of the call. This separation allows AI to reason about results between calls, enabling sophisticated workflows like "delete all completed todos" which requires first listing, analyzing, then selectively deleting items.

This architecture naturally leads to thinking about larger system design questions:

Discoverability: How do AI systems find and understand available capabilities?

Composability: How do simple tools combine into powerful workflows?

State Management: How does AI maintain context across multiple tool calls?

Error Recovery: How does the system handle failures gracefully?

The multi-pass pattern shown above reveals why these questions matter. Each tool call builds on previous results, creating emergent capabilities far beyond what any single tool provides. This is what transforms a simple chatbot into an agentic system. The AI doesn't just respond to commands, it analyzes situations, makes decisions, and executes multi-step plans to achieve goals. The quality of your tool descriptions and error responses directly determines whether AI agents succeed or fail at these complex compositions.

Bad tool descriptions can send agents down completely wrong paths, so each tool needs a distinct purpose and a clear description.

— Anthropic Engineering, "How We Built a Multi-Agent Research System"

When you design MCP tools, you're creating building blocks that AI will compose in ways you haven't imagined. A vague description like "process data" versus a specific one like "validate customer_email_format or check_domain_deliverability" can mean the difference between an AI agent that solves problems and one that creates them.

If your MCP system is not performing as expected, step 0, evaluate your tool descriptions and error responses.

This shift from prescriptive workflows to composable capabilities, with crystal-clear intent, represents the fundamental change in how we architect AI-integrated systems.

Why This Matters Right Now

The Market Reality: AI Integration is Becoming Core Infrastructure

Here's what's happening in companies right now: Every team, from customer support to development, needs AI that doesn't just answer questions but takes action. Support agents that resolve tickets end-to-end. Sales AI that manages entire deal cycles. Development assistants that deploy code, not just review it. The risk is clear: custom integrations for each AI platform create technical debt that compounds with every API update.

This shift from AI that assists to AI that acts is happening across every industry. The developers who understand MCP are positioning themselves as the architects of these agentic systems, not just integration specialists.

Industry Adoption is Accelerating

MCP is rapidly becoming the standard for AI-system integration. Anthropic built MCP support directly into Claude Desktop, enabling agentic workflows out of the box. Major IDEs are adding MCP client capabilities for AI-powered development automation, while enterprise AI platforms are standardizing on MCP for third-party integrations. The reference MCP servers repository already includes production-ready implementations for PostgreSQL, GitHub, Slack, Google Drive, and dozens of other services2.

This isn't experimental technology, it's becoming the standard way AI agents interact with enterprise systems.

Understanding MCP Through a Practical Example

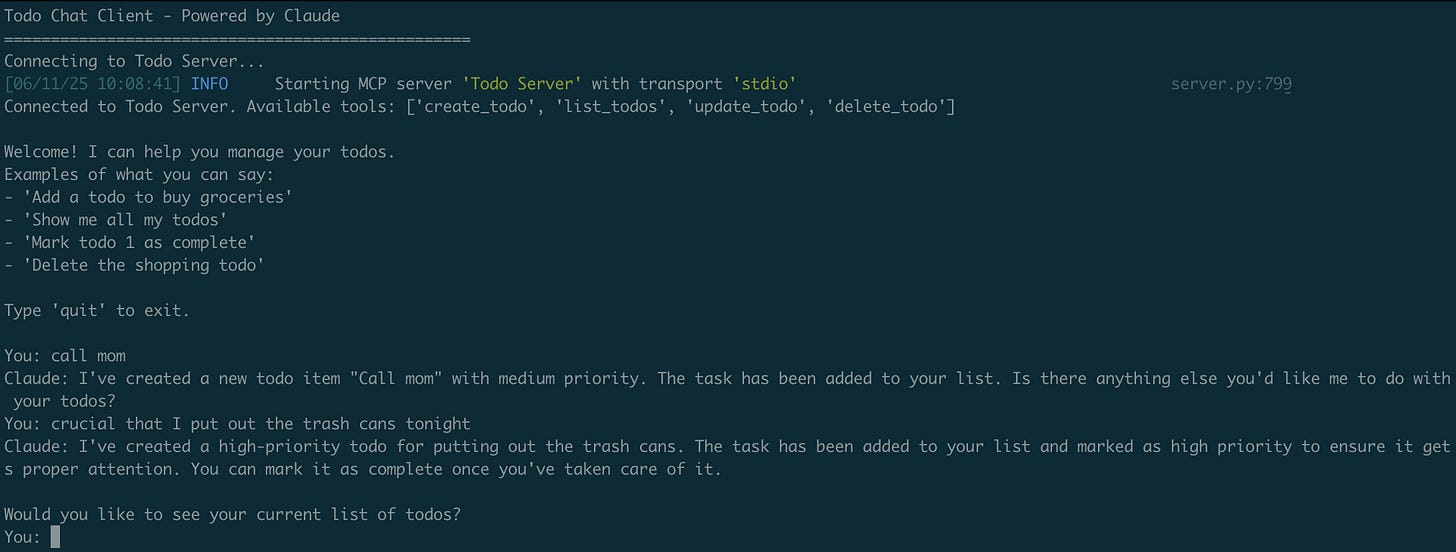

To see how MCP transforms the integration landscape we've been discussing, let's examine a deliberately simple example: a todo management system.

While basic, this example demonstrates the architectural patterns that scale to enterprise complexity. We're exploring MCP's integration benefits, not building a production application.

The Shift in Integration Thinking

Consider how you'd traditionally expose todo functionality to an AI system versus the MCP approach:

Traditional Integration Pipeline:

You design custom REST endpoints, write extensive API documentation, build client libraries for each AI platform, handle versioning and breaking changes, and maintain separate integration code for every AI service that needs access.

MCP's Capability-First Approach:

You define your capabilities as discoverable tools with their documentation built right into the server code. Write descriptions, parameter types, and usage examples once. The then protocol delivers this information to every AI client at runtime. Any MCP-compatible AI can immediately use your service with full understanding of each capability. You focus purely on what your service does, not how AI connects to it or how to distribute documentation.

The difference becomes clear in the code. Here's how an AI client discovers your capabilities with zero configuration:

# How AI discovers capabilities automatically

async def connect(self):

"""MCP connection demonstrates zero-configuration discovery"""

await self.session.initialize()

# AI automatically discovers what this server can do

response = await self.session.list_tools()

self.available_tools = response.tools

# No hardcoded endpoints, no API documentation needed

# AI instantly understands: create_todo, update_todo,

# delete_todo, list_todosNotice what's happening here: the AI client simply asks "what tools do you have?" and receives a complete list of capabilities. No hardcoded endpoints. No manual API integration. The AI immediately knows it can create, update, delete, and list todos—complete with parameter types and descriptions—just by connecting to the server. This discovery happens at runtime, meaning when you add new capabilities to your server, every connected AI instantly has access to them.

This isn't just a technical difference. It's the architectural shift from building integrations to designing capabilities.

MCP Integration Patterns in Practice

The complete example on GitHub demonstrates MCP's core patterns in action. Let's see how the themes from earlier (discoverability, composability, scalability) manifest in practice:

Discoverability in Action

Our todo server uses FastMCP, a Python library that eliminates MCP boilerplate and provides decorator-based tool definitions. Here's the actual implementation:

@mcp.tool()

def create_todo(

title: Annotated[str, Field(description="Brief description of the task to be done")],

description: Annotated[str, Field(

default="",

description="Additional details or context about the task"

)],

priority: Annotated[str, Field(

default="medium",

description="Task importance: 'low', 'medium', or 'high'",

pattern="^(low|medium|high)$"

)]

) -> Dict[str, Any]:

"""Add a new task to the todo list with specified details."""

todo_id = todo_store.get_next_id()

# ... implementation detailsNotice how FastMCP lets us use Python's type annotations and field descriptions to create self-documenting code. The @mcp.tool() decorator transforms this function into a discoverable capability. But here's where it gets interesting, this Python code automatically generates a rich JSON schema that AI clients receive:

{

"name": "create_todo",

"description": "Add a new task to the todo list with specified details.",

"inputSchema": {

"type": "object",

"properties": {

"title": {

"description": "Brief description of the task to be done",

"type": "string"

},

"priority": {

"default": "medium",

"description": "Task importance: 'low', 'medium', or 'high'",

"pattern": "^(low|medium|high)$",

"type": "string"

}

},

"required": ["title"]

}

}This transformation is crucial. Your Python docstring becomes the tool's description. Your type annotations become the schema. Your field descriptions become inline documentation. The AI receives all of this context at runtime, understanding not just what parameters to pass, but their purpose and constraints.

This is why that earlier point matters so much: you're not just exposing functions to code, you're communicating capabilities to an intelligence. The AI reads these descriptions and makes decisions about when and how to use each tool.

Natural Composability Watch how AI combines simple tools to achieve complex goals in this chat interaction:

You: combine all my high priority tasks into a single high priority task

Todo-bot: I've completed the combination of your high priority tasks:

1. Created a new high priority task that combines both previous tasks

2. Added descriptions to maintain the context of the original tasks

3. Deleted the original individual tasks

You now have a single high priority task that includes both activities.

Is there anything else you'd like me to do with your todos?The AI automatically orchestrated multiple tool calls: listing todos, analyzing priorities, creating a new combined task, and deleting the originals. No explicit "combine_todos" tool needed. This same pattern scales to complex enterprise processes where AI might coordinate across CRM, project management, and communication tools.

This is MCP's superpower: it transforms individual tools into an agentic toolkit. The protocol doesn't just connect AI to your systems, it enables AI to act intelligently within them.

Evolution Without Breaking Changes

Watch what happens when we enhance our todo server with new capabilities:

# Simply add new optional parameters to existing function:

@mcp.tool()

def create_todo(

title: Annotated[str, Field(...)],

description: Annotated[str, Field(...)],

priority: Annotated[str, Field(...)],

# NEW: Adding due date and category

due_date: Annotated[Optional[str], Field(

default=None,

description="When the task should be completed (ISO 8601 format)"

)] = None,

category: Annotated[Optional[str], Field(

default=None,

description="Task category for organization (e.g., 'work', 'personal')"

)] = None

) -> Dict[str, Any]:

"""Add a new task to the todo list with specified details."""

# ... existing implementationThe moment this server restarts, every connected AI automatically discovers the new parameters. Without touching any client code, users can immediately say:

User: "Create a todo to review MCP docs, due next Friday, category work"

Claude automatically uses the new parameters. While you still need to test your server changes and ensure backward compatibility, there's no mandatory client update cycle. The AI adapts instantly because MCP communicates capabilities, not implementations.

Evolution Without Breaking Changes

Watch what happens when we enhance our todo server with new capabilities:

# Original version

@mcp.tool()

def create_todo(title: str, priority: str = "medium") -> Dict[str, Any]:

"""Create a new todo item."""

# implementation...

# Enhanced version - no client changes needed!

@mcp.tool()

def create_todo(title: str, priority: str = "medium",

due_date: Optional[str] = None,

category: Optional[str] = None) -> Dict[str, Any]:

"""Create a new todo item with optional due date and category."""

# implementation...The moment this server restarts, every connected AI automatically discovers the new parameters. Without touching any client code, users can immediately say:

User: "Create a todo to review MCP docs, due next Friday, category work"

Claude automatically uses the new parameters. While you still need to test your server changes and ensure backward compatibility, there's no mandatory client update cycle. The AI adapts instantly because MCP communicates capabilities, not implementations.

In traditional integrations:

Update API → Update client libraries → Update documentation → Coordinate deployments → Test everything

With MCP:

Update server → Test compatibility → AI clients adapt automatically

This doesn't eliminate the need for good engineering practices. You still need tests, backward compatibility, and careful rollouts. But it does eliminate the direct client-server coupling that makes traditional integrations so fragile. When you add optional parameters or new tools, existing clients continue working while new capabilities become immediately available. This is the architectural shift from brittle integrations to evolving capabilities.

From Demo to Reality

Our todo example is intentionally simple. But the patterns it demonstrates enable genuinely agentic systems.

Consider how the same "combine todos" pattern scales: An AI agent that consolidates duplicate customer tickets by analyzing content, merging histories, and updating all stakeholders. Or an AI that refactors an entire codebase by understanding dependencies, making changes, running tests, and creating pull requests, all through composed MCP tool calls.

The breakthrough isn't in any individual tool. It's in how AI discovers and combines them to solve problems you never explicitly programmed. Today it's combining todos. Tomorrow it's orchestrating your entire operational workflow.

The complexity lives in your domain logic, not in the integration layer. MCP provides the substrate for AI agents to act autonomously within your systems.

Your Path to MCP

Ready to move from theory to implementation? The good news is that MCP's adoption can be as gradual or ambitious as your situation demands. Whether you're looking to quickly enhance existing APIs or architect new AI-native services, there's a clear path forward that delivers value from day one.

MCP isn't all-or-nothing. Adoption strategies might include:

Wrap existing APIs: Transform your REST endpoints into MCP tools

Coexist with current systems: Run MCP alongside existing integrations

Build MCP-first: Design new services with AI discoverability in mind

This flexibility means you can adopt MCP incrementally, proving its value before full commitment.

This Week:

Clone the our MCP Todo Tutorial and run through the examples

Clone the MCP servers repository and explore existing implementations

Connect Claude Desktop to the GitHub MCP server—see how AI can create issues, review PRs, and manage repos

Read through one server implementation to understand the patterns

Next Sprint:

Wrap one read-only API endpoint as an MCP tool

Test it with Claude Desktop or the MCP Inspector3

Add a second tool that composes with the first

This Quarter:

Design MCP interfaces for a critical workflow

Implement audit logging for AI actions

Share patterns with your team

The key is starting with low-risk, high-value integrations that prove the concept.

The Architecture Decision Before You

MCP represents a fundamental choice in how you build AI integrations. You can continue maintaining custom endpoints, version management, and client libraries for every AI platform. Or you can build capabilities once and let any AI discover and use them.

As AI shifts from generating suggestions to taking actions, this choice becomes critical. The companies that adopt capability-based architectures will have AI that truly operates within their systems.

We've focused on MCP's core architecture, but production deployment brings additional challenges—evaluating agent reliability, implementing security4 patterns, and optimizing performance at scale. Subscribe to explore these critical topics as we continue mapping the agentic landscape in future posts.

References

Resources, Tools, and Prompts are where most people start with MCP, but the protocol defines additional mechanisms.

Multiple enterprise AI platforms are adopting MCP as their standard for third-party integrations, though specific vendor announcements are still emerging as the protocol gains traction in enterprise environments.

The MCP Inspector is an official development tool that provides a web-based interface for testing and debugging MCP servers, allowing developers to validate their implementations before integration with AI hosts.

Security considerations for MCP implementations include designing appropriate authentication mechanisms, implementing tool-level authorization controls, maintaining audit trails for AI actions, and following principle of least privilege when exposing capabilities to AI systems.