Weekly review: RAPTOR: Autonomous Security Research Framework, OpenCode, and much more

The week's curated set of relevant AI Tutorials, Tools, and News

Welcome back to Altered Craft’s weekly AI review for developers. Thanks for making this newsletter part of your week. This edition carries a strong Anthropic theme: they acquired Bun, published internal data on how their engineers use Claude, and researchers extracted Claude’s “soul document” from model weights. Beyond the Anthropic news, you’ll find practical guides on AI coding workflows and OpenRouter’s analysis of 100 trillion tokens showing programming now dominates LLM usage.

TUTORIALS & CASE STUDIES

Product Evals in Three Simple Steps

Estimated read time: 12 min

Eugene Yan presents a practical framework for building reliable LLM evaluation systems using binary labeling, single-dimension evaluators, and automated harnesses. Learn why “God Evaluators” fail and why investing four weeks in evaluation infrastructure enabled hundreds of experiments that manual review made impossible.

The opportunity: Teams struggling with LLM quality can move from gut-feel assessments to systematic iteration—the infrastructure investment pays back quickly when you can run experiments at scale.

The 75/25 Rule for AI-Assisted Development

Estimated read time: 5 min

Randal Olson, Ph.D., argues that planning should consume 75% of your AI coding workflow, with implementation just 25%. His three-phase approach shifts heavy thinking to high-reasoning models while fast, cheap models handle execution. When coding gets difficult, it signals planning needs strengthening.

Key point: This reframes AI coding struggles productively—difficulty during implementation becomes a signal to improve your planning, not a limitation of the tools.

Mastering Vibe Coding Without Sacrificing Code Quality

Estimated read time: 8 min

This guide teaches developers how to leverage AI code generation while maintaining production standards. Covers five critical gaps in AI-generated code—context awareness, integration, security, testing, and operability—plus a comprehensive review checklist for experienced developers.

What this enables: A concrete framework for reviewing AI-generated code systematically, helping you catch common issues before they reach production.

Cursor’s Official Guide for Non-Engineers

Estimated read time: 15 min

Cursor published an internal onboarding guide walking newcomers from installation through deployment. Originally created for GTM and non-engineering hires, it covers setup, project building, GitHub integration, and Vercel deployment in six steps.

Worth noting: This signals how far AI-assisted development has progressed. A resource you can share with non-technical teammates who want to build their own tools.

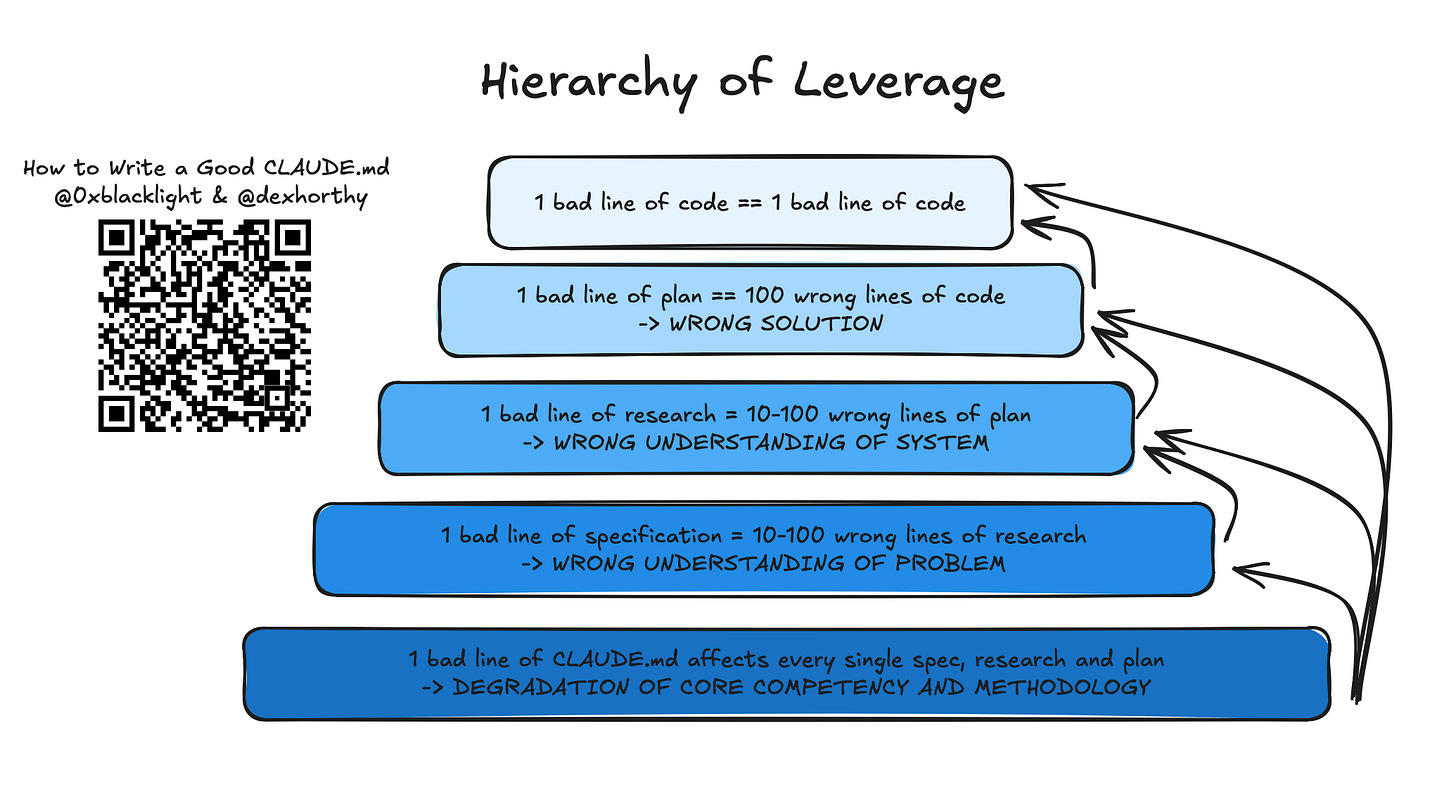

Writing Effective CLAUDE.md Files

Estimated read time: 6 min

Shifting from IDE tools to configuration, HumanLayer reveals that Claude Code can reliably follow only 150-200 instructions, with system prompts already consuming ~50. Their recommendation: keep CLAUDE.md under 300 lines, use progressive disclosure via separate files, and focus on universal instructions.

The takeaway: Less is genuinely more here. Bloated configuration files get ignored uniformly, so ruthless prioritization of your most important instructions improves results.

Google’s Context Engineering for Multi-Agent Systems

Estimated read time: 14 min

Google’s Agent Development Kit introduces context engineering as a discipline for scaling AI agents beyond simple context window expansion. The framework separates working context, sessions, memory, and artifacts—treating context as “a compiled view over a richer stateful system.”

Why now: As agent systems grow more complex, understanding how to architect context becomes essential—this provides vocabulary and patterns for production-grade multi-agent design.

TOOLS

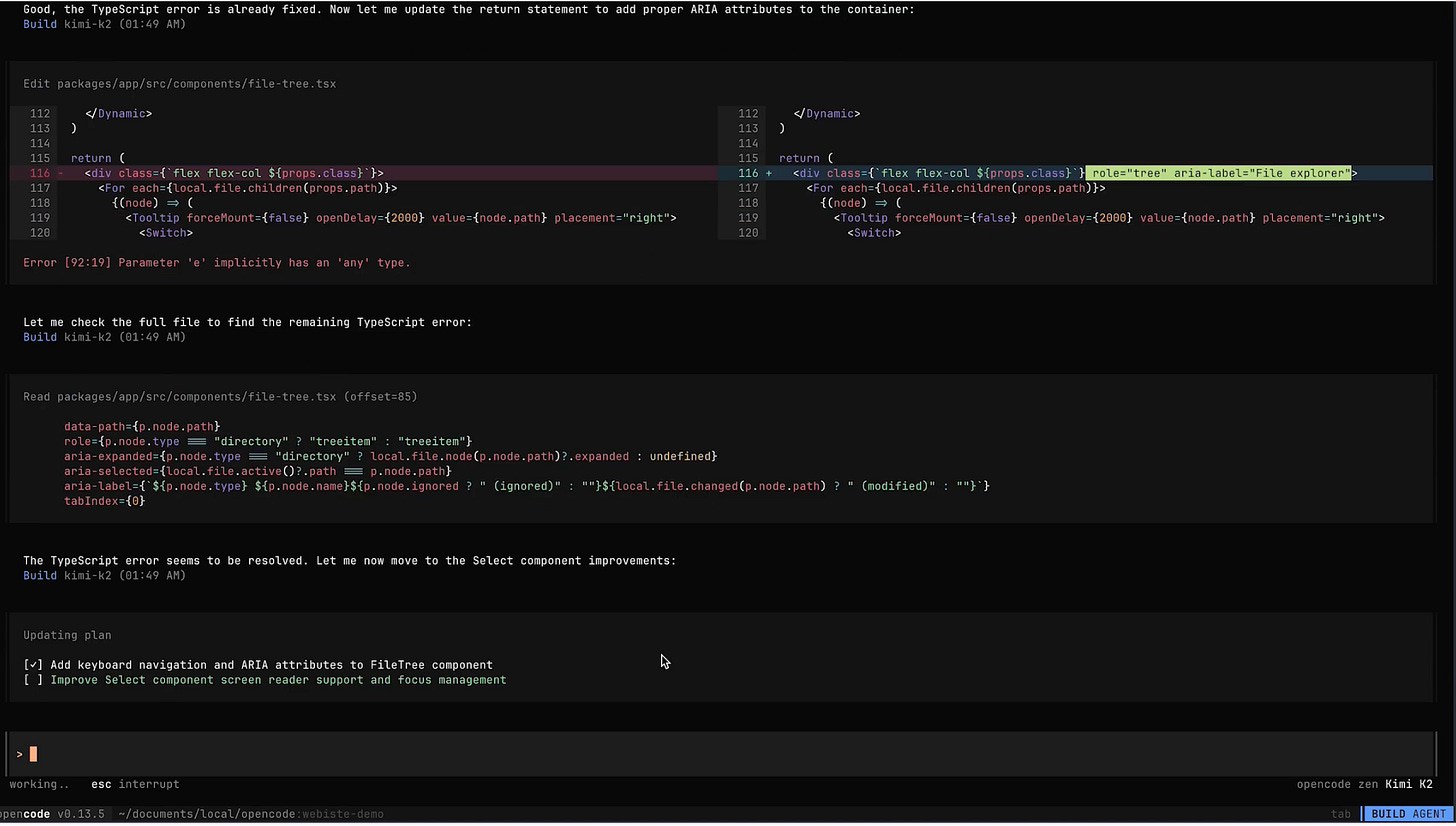

OpenCode: Open Source AI Coding Agent

Estimated read time: 3 min

OpenCode is a free, terminal-based AI coding agent supporting 75+ LLM providers including local models. Run multiple agents in parallel, share sessions, and maintain strict privacy—no account required. With 35,000 GitHub stars, it’s emerging as a compelling open alternative.

What’s interesting: Claude Code like expereince, 75+ models.

Claude Code Agents Plugin

Estimated read time: 6 min

For Claude Code users specifically, a comprehensive plugin provides 85 specialized agents, 15 multi-agent orchestrators, 47 skills, and 44 tools across 63 plugins. Features hybrid Haiku/Sonnet orchestration and granular loading averaging just 3.4 components per plugin.

The context: Claude Code’s extensibility ecosystem is maturing rapidly. This marketplace lets you compose specialized capabilities for complex workflows like full-stack feature development.

UI UX Pro Max: Design Intelligence for Coding Assistants

Estimated read time: 4 min

This AI skill provides searchable design databases for Claude Code, Cursor, and Windsurf. Includes 57 UI styles, 95 color palettes by industry, 56 font pairings, and 98 UX guidelines. Supports React, Vue, SwiftUI, and Flutter with natural language queries.

Why this matters: Bridges the gap between “I know what I want it to look like” and actually getting stylistically coherent code—useful for developers who aren’t design-focused.

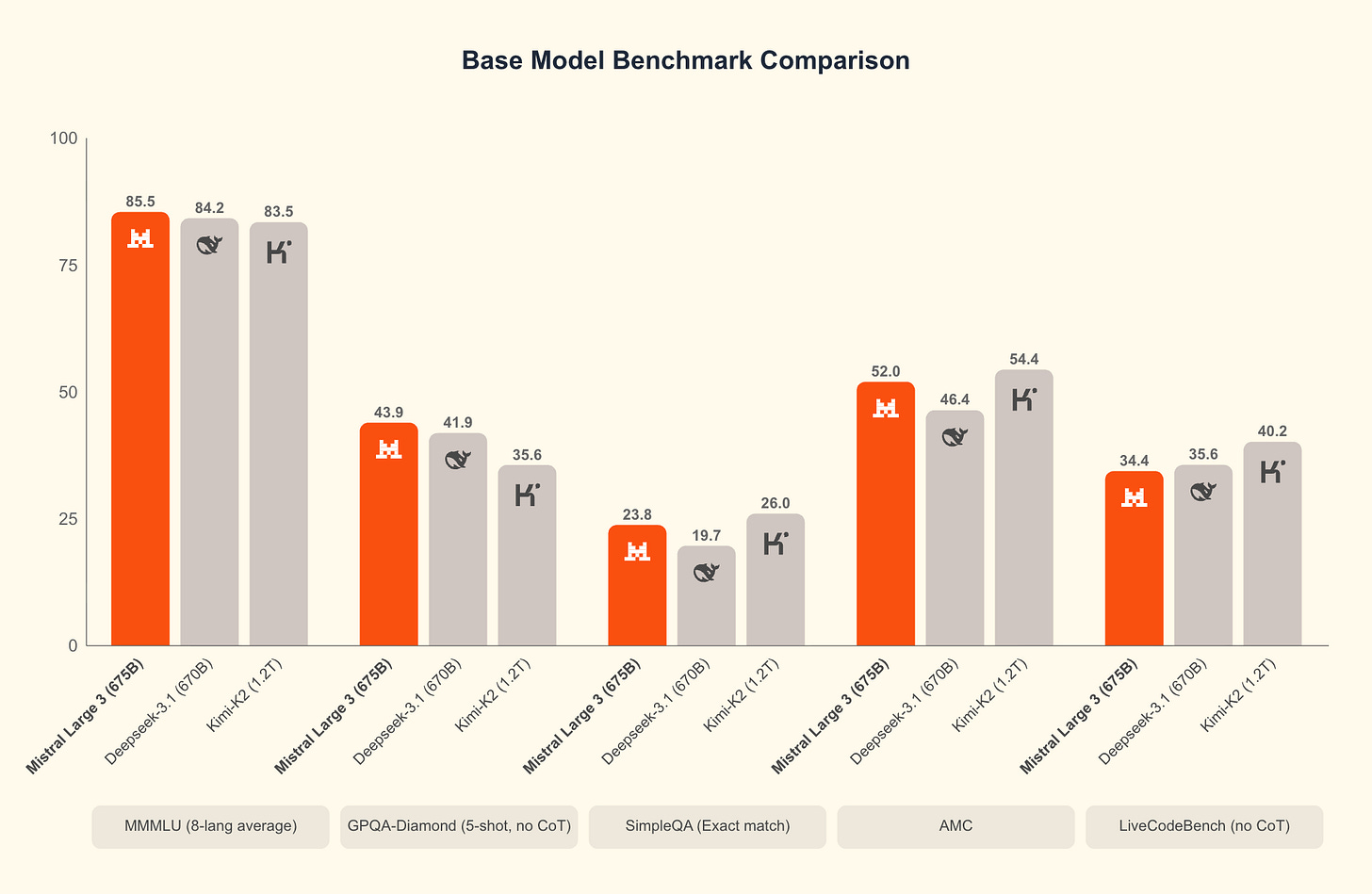

Mistral 3: Frontier Performance with Apache 2.0 Licensing

Estimated read time: 5 min

Mistral released a comprehensive model family including Mistral Large 3 (41B active parameters, 675B total), ranking #2 among open-source non-reasoning models on LMArena. Apache 2.0 licensing, image understanding, and 40+ language support.

The opportunity: Frontier-class performance under permissive licensing opens possibilities for production deployments where proprietary API dependencies are a concern.

RAPTOR: Autonomous Security Research Framework

Estimated read time: 7 min

Applying these tools to security workflows, RAPTOR combines Claude Code with security tools for autonomous vulnerability discovery, analysis, and remediation. Nine expert personas, integrated static analysis and fuzzing, plus conversational commands like /scan and /analyze for CI/CD integration.

What this enables: Security workflows that previously required deep expertise become more accessible—useful for teams without dedicated security researchers.

NEWS & EDITORIALS

OpenRouter’s 100 Trillion Token Analysis of Real AI Usage

Estimated read time: 18 min

OpenRouter’s analysis reveals programming now drives 50%+ of LLM queries, up from 11%, with prompts expanding 4x to 6K average tokens. Open-source models hold ~30% market share. Key finding: early adopters show exceptional retention via the “glass slipper” effect.

Why this matters: Real usage data from 100 trillion tokens reveals where the industry is actually headind, programming-centric workflows are now the dominant use case, not a niche.

OpenAI Declares ‘Code Red’ as Google Closes Gap

Estimated read time: 3 min

Sam Altman issued an internal “code red,” delaying ads, shopping agents, and Pulse assistant to focus on ChatGPT fundamentals—speed, reliability, and personalization. Google’s Gemini 3 surpassed competitors on benchmarks, completing a full-circle moment from Google’s post-ChatGPT code red.

The context: Intensifying competition at the frontier means faster improvements across all major platforms. The pace of capability advancement isn’t slowing down.

Bun Runtime Acquired by Anthropic

Estimated read time: 3 min

Meanwhile at Anthropic, the company acquired Bun, betting on the fast JavaScript runtime as infrastructure powering Claude Code, Claude Agent SDK, and future AI coding products. Signals commitment to building comprehensive AI development tools rather than relying on external infrastructure.

Worth watching: Deeper Bun integration across Claude’s coding tools could meaningfully improve execution speed and developer experience for JavaScript/TypeScript workflows.

How AI Is Transforming Work at Anthropic

Estimated read time: 10 min

Anthropic surveyed 132 engineers and researchers, finding Claude usage jumped from 28% to 59% of work with a 50% productivity boost. Engineers report becoming “full-stack,” handling tasks outside their expertise—though mentorship patterns are shifting as Claude becomes “the first stop for questions.”

Key point: First-party data on how AI-native teams are evolving their workflows. Useful for understanding where team dynamics may shift as AI adoption deepens.

Claude 4.5 Opus Soul Document Extracted

Estimated read time: 52 min

A researcher extracted what appears to be Claude’s internal “soul document” from model weights using consensus-based completions. The ~13,000-word document covers Anthropic’s guidelines on safety, helpfulness, honesty, and Claude’s identity—confirmed as used in supervised learning.

What’s interesting: A rare window into how AI character training shapes behavior at a foundational level. Helps demystify why Claude responds the way it does.