Weekly Review: Key Editorials, News, Tools, and Tutorial for Starting 2026

The week's curated set of relevant AI Tutorials, Tools, and News

Welcome to Altered Craft’s first weekly AI review for developers in 2026. Thank you for starting the new year with me. This edition leans heavily into editorials and retrospectives, with voices like Simon Willison, and Sebastian Raschka helping calibrate where we’ve been and where we’re heading. Alongside the reflection, you’ll find practical agent-building resources and a spirited debate about “vibe coding” versus intentional control. Here’s to building thoughtfully in 2026.

NEWS & EDITORIALS

Simon Willison’s 2025: The Year in LLMs

Estimated read time: 35 min

Simon Willison’s comprehensive retrospective covers 2025’s defining LLM developments. Reasoning models dominated. Agents became real. Claude Code’s February release proved most impactful. Chinese labs topped open-weight rankings. $200/month subscription tiers emerged. “Vibe coding” entered the lexicon.

The context: Willison’s annual review is essential for calibrating your mental model of the field. His practitioner perspective cuts through both hype and doomerism.

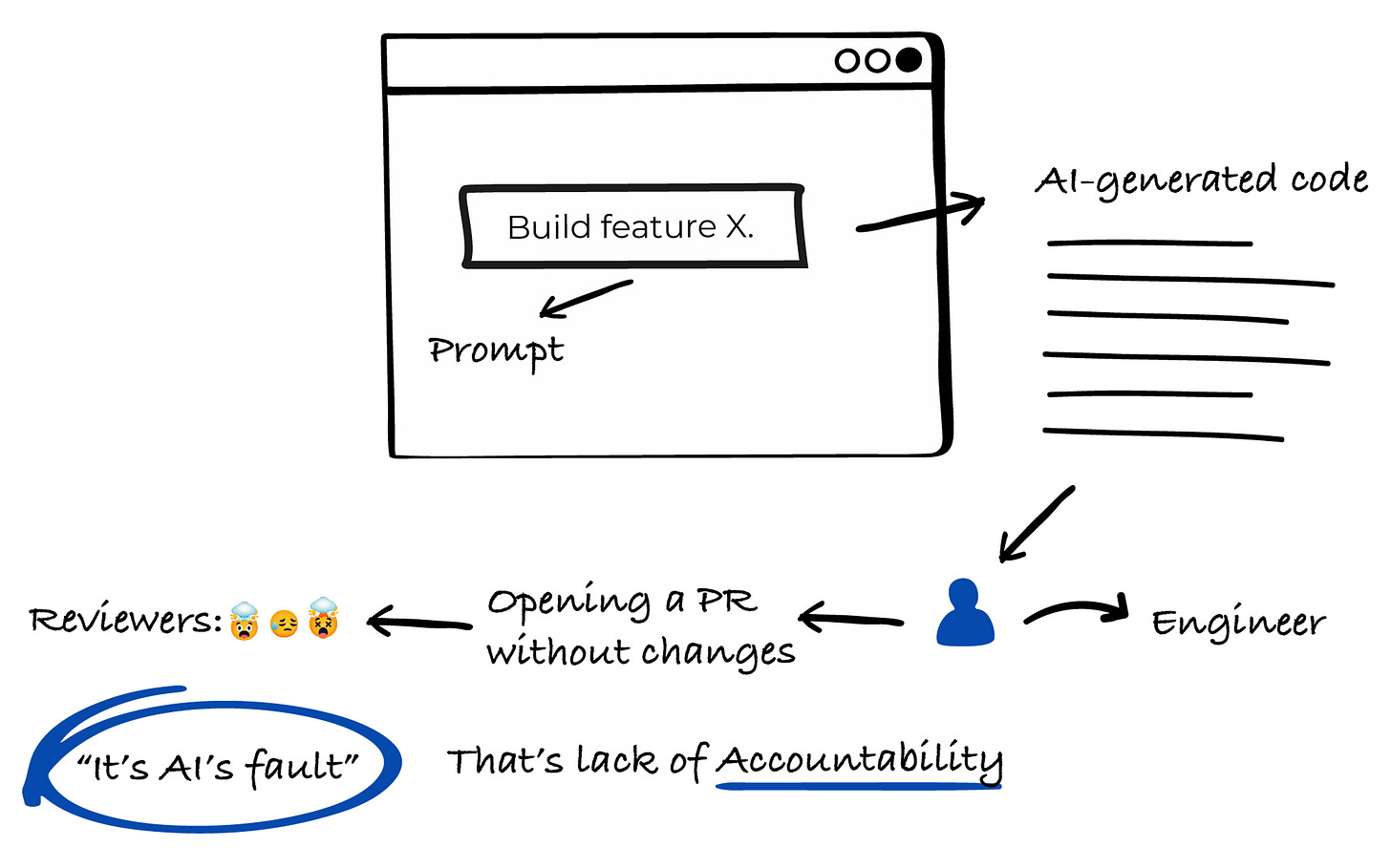

AI Coding Tools Are Not the Problem: Accountability Is

Estimated read time: 10 min

As the application layer matures, how developers use tools matters more than the tools themselves. This editorial confronts engineers who submit unreviewed AI-generated code and blame the tool. The author advocates treating AI as a thought partner requiring verification, not an autopilot.

Worth noting: A good framework for team conversations about AI tool adoption. The real debate isn’t “should we use AI” but “how do we maintain quality.”

Research: Professional Developers Control AI, They Don’t Vibe

Estimated read time: 15 min

Academic research supports this accountability perspective. This paper finds experienced developers prioritize intentional, methodical control over intuitive “vibing” approaches. Effective AI-assisted development requires structured oversight. Implications for tool designers: transparency and control serve practitioners better than convenience features.

What’s interesting: Empirical validation of what senior developers already know intuitively. Useful reference for advocating better tooling practices on your team.

How Vibe Coding Killed Cursor’s Utility

Estimated read time: 12 min

One developer applies control-first philosophy to tool evaluation. Anton Morgunov argues unrestricted “vibe coding” is economically unsustainable and has compromised Cursor. His solution: manually compile relevant files into single markdown documents, providing full context upfront. Alternatives: Google AI Studio or terminal-based OpenCode.

The takeaway: A practitioner’s critique with concrete alternatives. Worth reading if Cursor’s behavior has frustrated you, or to understand the context-management tradeoffs.

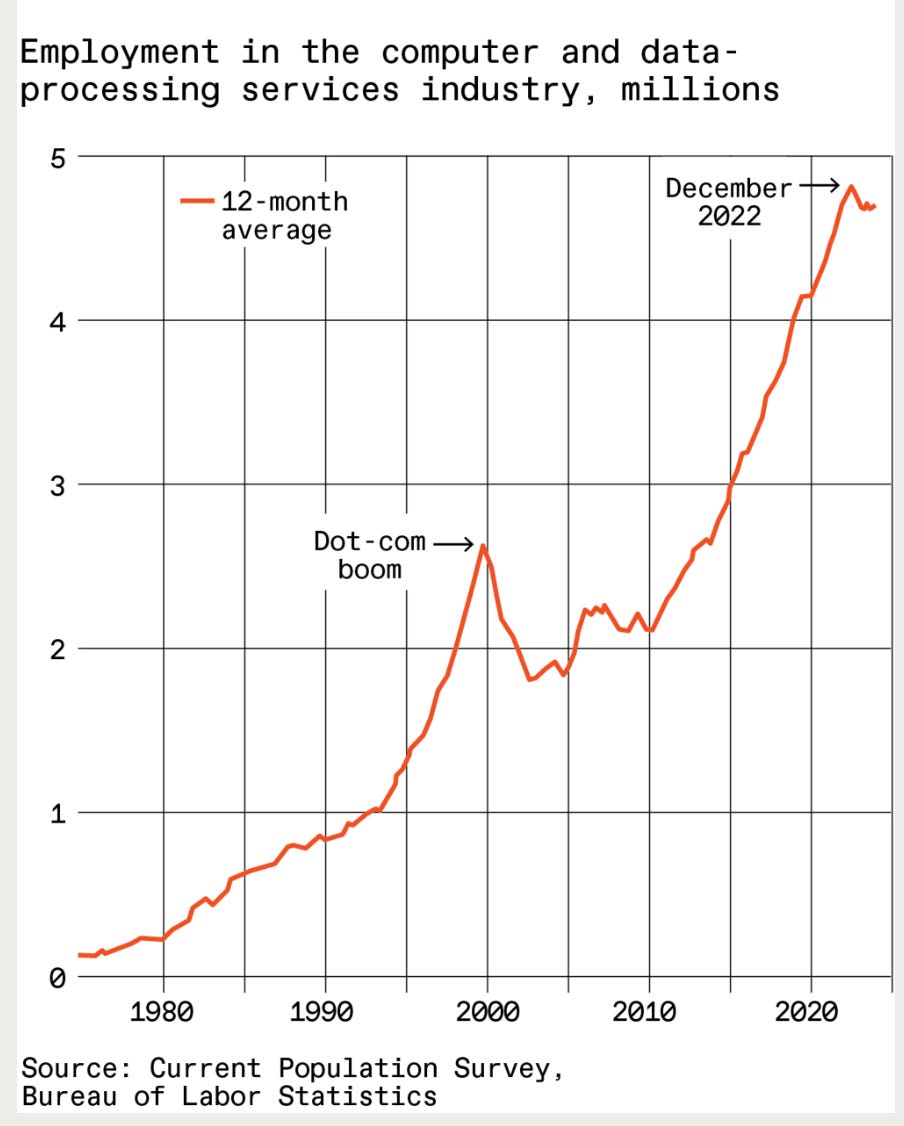

AI’s Effect on Entry-Level Jobs: Adaptation Required

Estimated read time: 8 min

These shifting expectations extend beyond individual developers to reshape the job market. IEEE Spectrum examines how AI is transforming entry-level job expectations. The premise: new workers must leverage AI tools strategically. Graduates who master AI collaboration gain competitive advantages.

Why this matters: If you’re mentoring junior developers or hiring, this frames the new baseline expectations. AI literacy is becoming table stakes.

Meta Acquires Manus AI Agent Platform After Rapid Growth

Estimated read time: 4 min

Continuing the acquisition momentum from our coverage of Nvidia’s $20B Groq purchase[1] last week, the industry consolidates around proven agent infrastructure. Manus joins Meta after remarkable traction: 147 trillion tokens processed, 80 million virtual computers created, $100M ARR in December. Subscriptions and Singapore operations continue unchanged.

The signal: Meta acquiring an agent platform at $100M ARR velocity signals where Big Tech sees the next platform war. Agents are infrastructure now.

[1] Nvidia Acquires Groq for $20 Billion

Elena Verna on AI Growth: Find Product-Market Fit Every Three Months

Estimated read time: 12 min

For another view on AI company growth, Lenny Rachitsky’s interview with Elena Verna reveals new playbooks for AI companies. Key insight: companies must find product-market fit every three months. At Lovable, 95% of growth comes from launching new features, not funnel optimization.

What this enables: Practical growth tactics if you’re building AI products. The “continuous PMF” framing is particularly useful for fast-moving markets.

TUTORIALS & CASE STUDIES

State of LLMs in 2025: Training, Reasoning, and Benchmarking

Estimated read time: 42 min

Sebastian Raschka delivers a comprehensive analysis of 2025’s LLM developments, covering DeepSeek’s $5M training revolution, RLVR reasoning techniques, and the “benchmaxxing” crisis. Key takeaway: post-training optimization and tool integration now matter more than raw scaling. He predicts inference-time compute will drive 2026 gains.

The context: This is essential reading for understanding where the field actually stands, cutting through marketing narratives with technical depth that informs better architectural decisions.

Deep Dive: How Claude Code’s Architecture Actually Works

View time: 66 min

Jared Zoneraich dissects Claude Code’s internal architecture, revealing its counterintuitive simplicity: a single-threaded “Master Loop” rather than complex agentic frameworks. The talk examines internal tools, the Todo planning mechanism, and why sandboxing matters. Success comes from trusting capable models over brittle DAG orchestration.

What’s interesting: Understanding why Anthropic chose simplicity over complexity helps you avoid over-engineering your own agent systems. Sometimes less infrastructure is more.

Claude Code in Action: Official Anthropic Course

Estimated course time: 3 hours (course)

For hands-on practice, Anthropic’s official course teaches developers to integrate Claude Code into professional workflows. Eight modules cover coding assistant architecture, multi-tool orchestration, context management, MCP server integration, and GitHub automation. Prerequisite: familiarity with CLI and Git basics.

The opportunity: Free, structured training directly from Anthropic. If you’re using Claude Code casually, this course surfaces capabilities you’re probably missing.

Building AI Agents: Will Larson’s Complete Series

Estimated read time: 45 min (full series)

Moving beyond Claude-specific tooling, Will Larson documents Imprint’s journey building internal agent workflows across nine articles. Topics span skill support, context compaction, evaluation frameworks, and subagent architecture. His recommendation: build your own framework as a learning exercise before adopting existing solutions.

Why this matters: Production-tested patterns from a respected engineering leader. These aren’t theoretical, they’re running in production at a real fintech company.

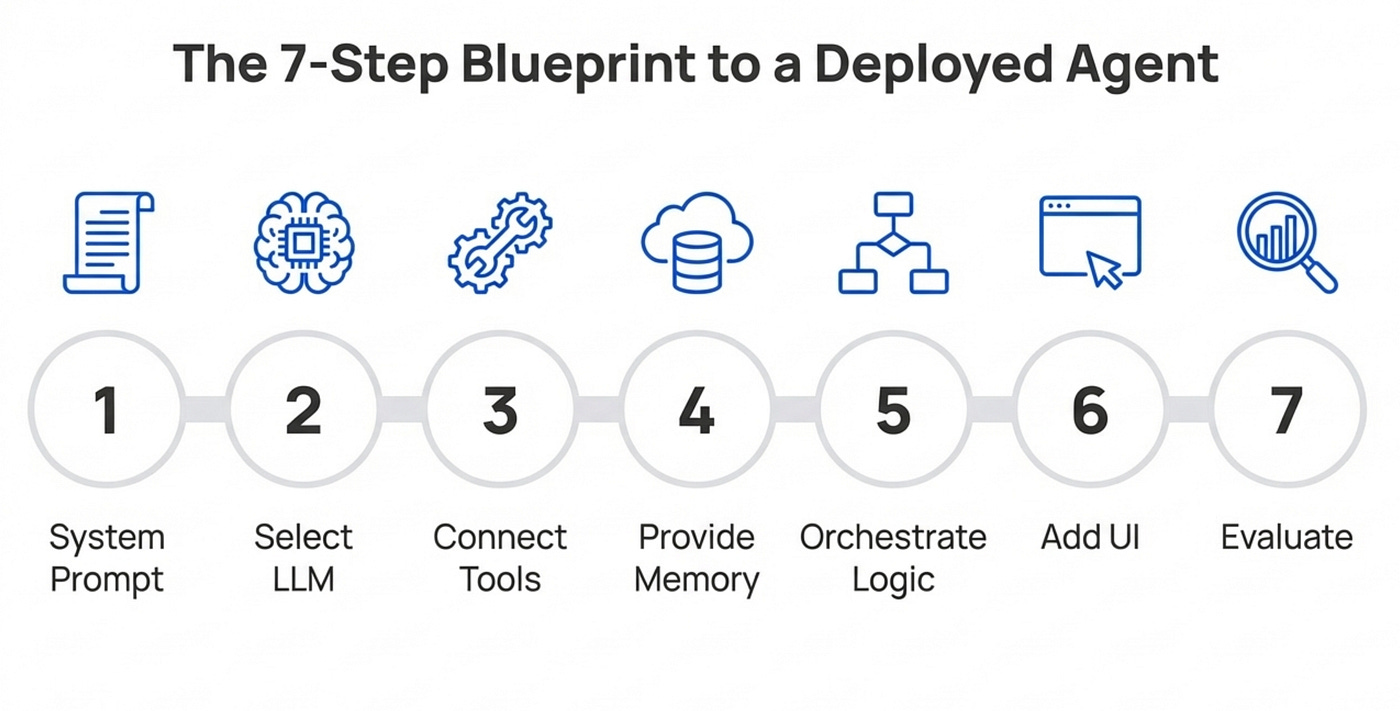

Build Your First AI Agent in Seven Practical Steps

Estimated read time: 10 min

For a condensed starting point, Paweł Huryn provides a practical framework for building AI agents from scratch. The seven steps cover system prompts, LLM selection, tools, memory, orchestration, UI, and evaluation. Key insight: start simple with one tool and no memory, then iterate.

Key point: If Larson’s series feels overwhelming, this is your on-ramp. A weekend project that builds real intuition for agent development.

Context Window Compaction for Long-Running AI Agents

Estimated read time: 8 min

Once your agents are running, managing context becomes critical. Building on our coverage of Factory.ai’s context compression evaluation framework[1] from last week, Will Larson shares a strategy for managing context exhaustion in extended workflows: real-time token monitoring, virtual file systems for large outputs, and automatic compaction at 80% capacity. Enables agents to operate indefinitely without losing critical state.

What this enables: Long-running agents that don’t degrade over time. Essential reading if you’re building anything beyond single-turn interactions.

[1] Probe-Based Context Compression Evaluation

TOOLS

LlamaParse v2 Simplifies Document Parsing with Tier System

Estimated read time: 5 min

LlamaIndex announces LlamaParse v2 with a streamlined four-tier approach replacing complex configurations. Choose from Fast (1 credit/page) to Agentic Plus (45 credits/page, 50% price cut) based on document complexity. Version pinning enables production stability while automatic routing eliminates manual configuration.

The takeaway: RAG pipelines just got simpler. If you’ve been manually tuning document parsing, this tier system reduces decision overhead significantly.

A2UI Enables AI Agents to Generate Secure User Interfaces

Estimated read time: 7 min

Google releases A2UI, an open standard for agents to generate interfaces via declarative JSON rather than executable code. Security-first design maintains approved component catalogs while enabling dynamic forms and dashboards. Framework-agnostic rendering works across Flutter, React, and Angular.

Why now: As agents gain more autonomy, UI generation becomes a security concern. A2UI offers a path forward that balances flexibility with safety.

Continuous Claude v2 Prevents Context Degradation

Estimated read time: 8 min

Also addressing agent infrastructure, Continuous Claude v2 tackles losing signal through repeated context compaction. It saves state to ledgers, clears context, and resumes fresh. Features include agent orchestration with isolated windows, pre-compaction auto-handoffs, and a searchable Artifact Index.

Worth noting: If you’ve experienced agents “forgetting” important details mid-session, this addresses a potential root cause rather than working around it.

LEANN: Privacy-First Vector Database Using 97% Less Storage

Estimated read time: 8 min

Taking a different approach to memory, LEANN enables semantic search on personal devices without cloud dependency. It computes embeddings on-demand via graph-based selective recomputation. Index 60 million text chunks in 6GB instead of 201GB. Supports MCP integrations and works with OpenAI, Ollama, or any compatible API.

What’s interesting: Privacy-preserving local AI just became practical for large datasets. Useful for anyone building personal knowledge systems or on-device RAG.

GW: Git Worktree Management for Parallel Development

Estimated read time: 5 min

This Rust-built CLI simplifies managing multiple git worktrees for parallel development. Commands like gw add and gw del replace verbose operations. Features include status snapshots across worktrees, branch integration via merge/squash/rebase, and shell integration for direct navigation.

The opportunity: If you’re constantly stashing changes to switch contexts, worktrees are a better workflow. This tool removes the friction of adopting them.

Z80-AI Fits a Language Model in 40KB of RAM

Estimated read time: 6 min

For a completely different optimization challenge, Z80-μLM runs conversational AI on a 1976 processor with 64KB RAM, packing inference, weights, and chat UI into 40KB. Technical innovations include 2-bit quantization, trigram hash encoding, and integer-only arithmetic.

Why this matters: A fascinating demonstration that challenges assumptions about minimum AI requirements. Sometimes constraints drive the most creative engineering.