Weekly review: Context Is King: Agent Specs, MCP Tools, and MIT's Long-Context Reality Check

The week's curated set of relevant AI Tutorials, Tools, and News

Welcome back to Altered Craft’s weekly AI review for developers. We appreciate you making this part of your week. A clear theme emerges this edition: context management has become the defining skill for effective agent work. You’ll find practical guides on structuring specs that don’t overwhelm AI agents, MCP tools that slash token consumption by 99%, and MIT research revealing why “long context” marketing claims fall short. Plus, the Redis creator makes a compelling case for embracing AI tools. Here’s what caught our attention.

TUTORIALS & CASE STUDIES

Writing Better Specs for AI Coding Agents

Estimated read time: 8 min

Addy Osmani explains why massive specifications fail with AI agents: context limitations cause performance to degrade as requirements pile up. The solution involves modular, smart specs structured like PRDs with six core sections, three-tier boundaries (always/ask/never), and self-verification checkpoints.

Why this matters: GitHub’s analysis of 2,500+ agent configs confirms these patterns. If you’re frustrated with inconsistent agent output, structured specs may be the missing ingredient.

Cursor’s Guide to Effective Agent Collaboration

Estimated read time: 7 min

Also emphasizing planning before implementation, Cursor’s official best practices reveal this approach delivers the greatest impact. The guide recommends Plan Mode with Shift+Tab for file research and approval workflows, fresh context when switching tasks, and test-driven development for clear success targets.

The takeaway: High-performing developers write specific prompts, add rules only after observing repeated mistakes, and treat agents as capable collaborators rather than code generators.

The Ralph Wiggum Technique for Autonomous AI Development

Estimated read time: 10 min

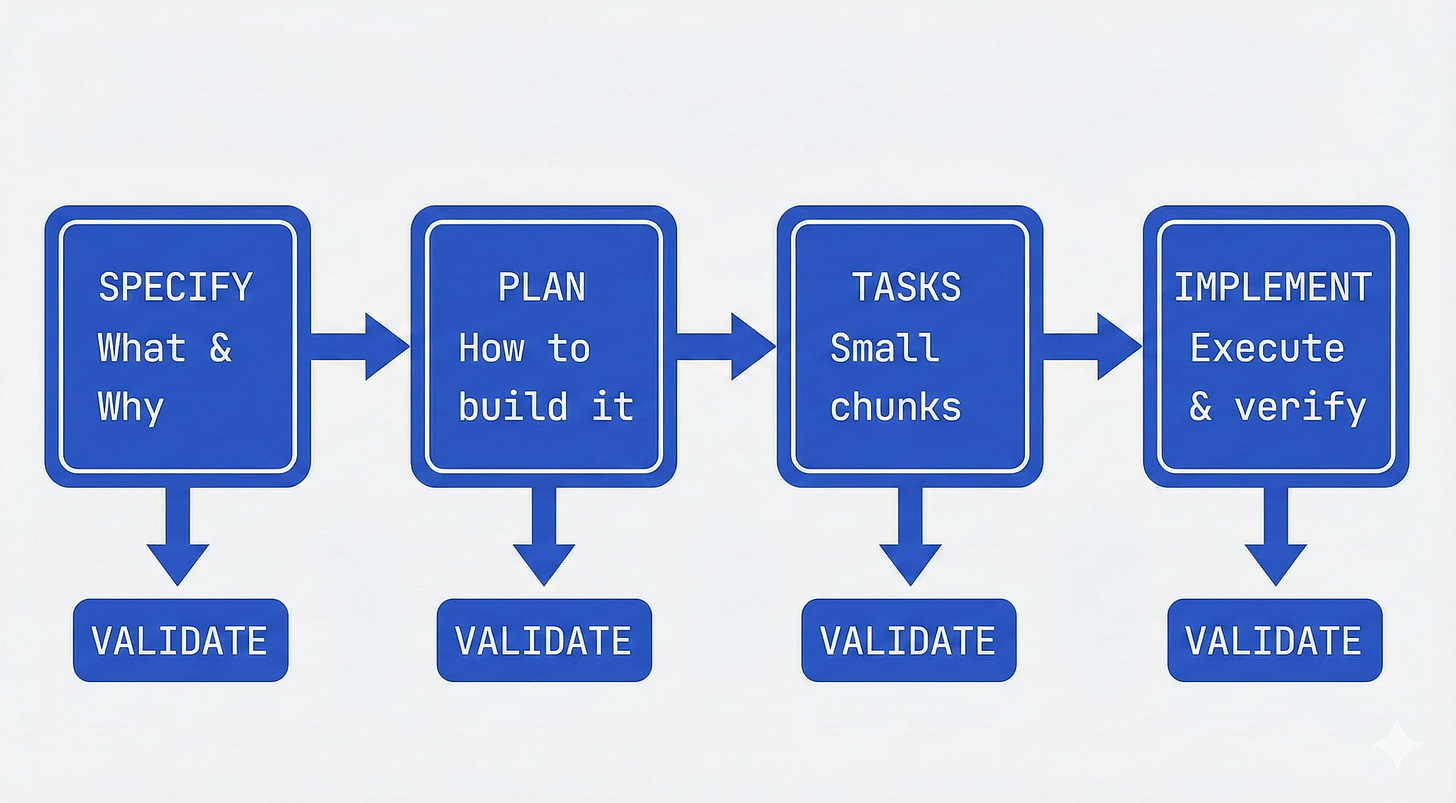

Taking planning further into autonomous territory, this methodology uses a three-phase funnel: define requirements through JTBD specifications, generate implementation plans via gap analysis, then execute tasks in a continuous loop with cleared context between iterations for peak efficiency.

What’s interesting: By running one task per loop and letting the AI self-correct through iteration, developers report achieving production-quality output at minimal supervision cost.

Agent Design Patterns for Context-Efficient Systems

Estimated read time: 6 min

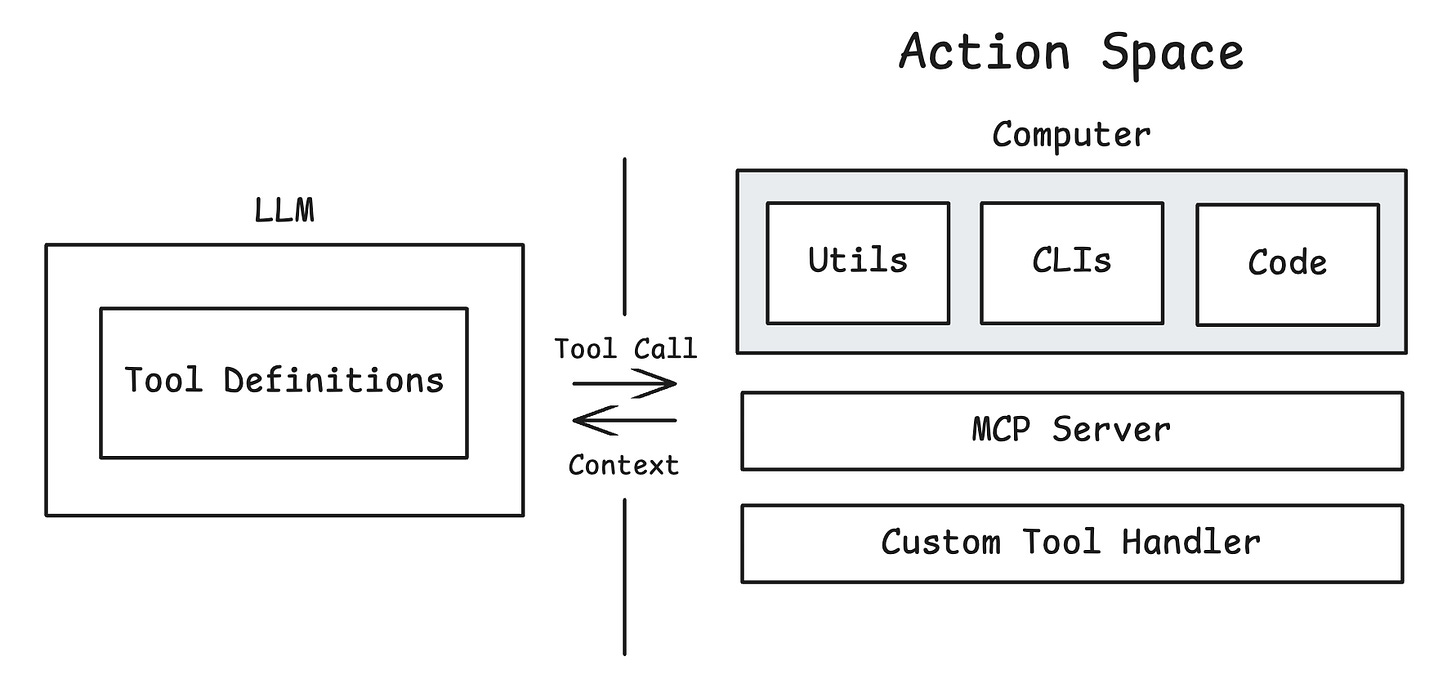

Extending our coverage of Cursor’s dynamic context discovery[1] from last week, this architectural guide treats context as a finite resource with diminishing returns. Key patterns include hierarchical action spaces with 10-20 atomic tools, progressive information disclosure, and filesystem offloading for intermediate results. Sub-agent isolation prevents any single context window from becoming prohibitive.

[1] Cursor’s Dynamic Context Discovery Reduces Agent Tokens by 47%

Key point: Prompt caching optimization can make higher-capacity models cheaper than alternatives, inverting traditional cost assumptions for complex agent workflows.

Building Agents with Gemini’s Interactions API

Estimated read time: 5 min

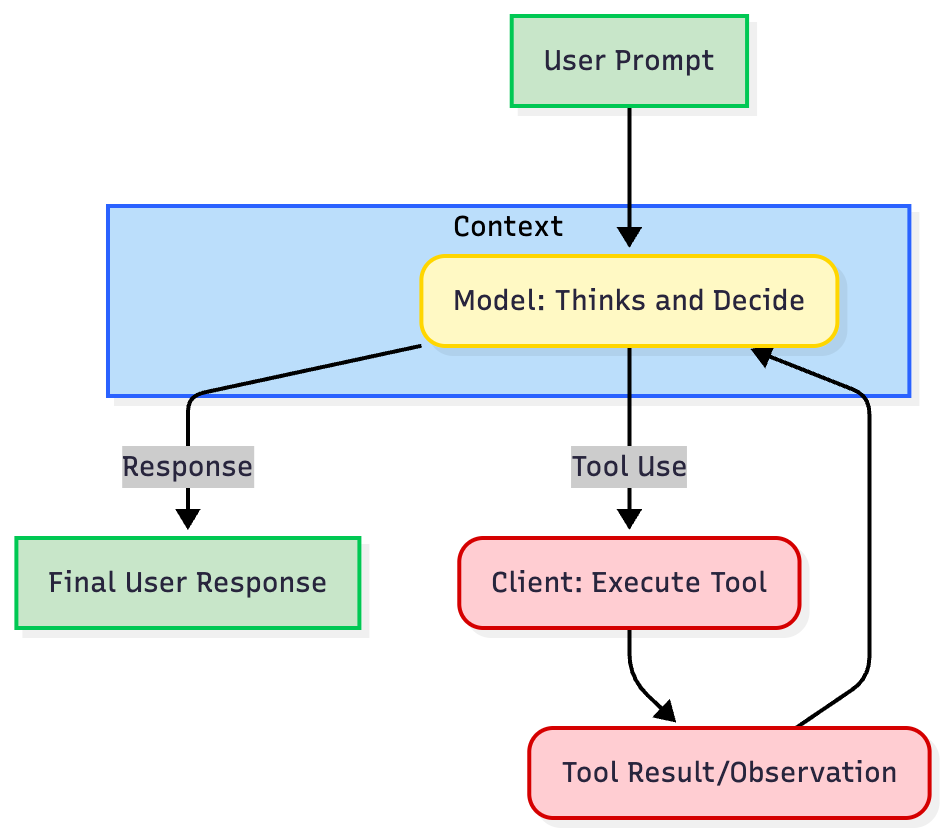

Putting these patterns into practice, this tutorial demonstrates that an AI agent is fundamentally “an LLM running in a loop, equipped with tools.” The Interactions API manages conversation state server-side using previous_interaction_id, and the guide walks through building functional agents in under 100 lines of Python.

The opportunity: Server-side state management eliminates manual history tracking, making this a clean starting point for developers new to agent architecture.

TOOLS

Anthropic’s Claude Cowork Brings Agents to Everyone

Estimated read time: 5 min

Simon Willison reviews Claude Cowork, Anthropic’s desktop agent extending Claude Code to non-technical users. Built on containerized sandboxing using Apple’s VZVirtualMachine framework, it lets users assign folders for AI-driven file analysis and web research. Available to Claude Max subscribers at $100-200/month.

Worth noting: The architecture demonstrates how agent tools are evolving beyond developer-only interfaces, while Willison’s prompt injection concerns remain relevant for anyone building similar systems.

Fly.io Launches Sprites for Sandboxed AI Agent Development

Estimated read time: 4 min

Also addressing agent sandboxing, Fly.io introduces Sprites.dev with stateful sandbox environments featuring ~300ms snapshots and scale-to-zero pricing. Pre-installed with Claude Code and configurable network policies, developers can test untrusted agent-generated code without risking their main systems.

What this enables: Safe experimentation with autonomous coding agents. The checkpoint/restore capability is particularly useful for debugging agent failures without losing state.

MCP Apps Brings Interactive UI to AI Tools

Estimated read time: 4 min

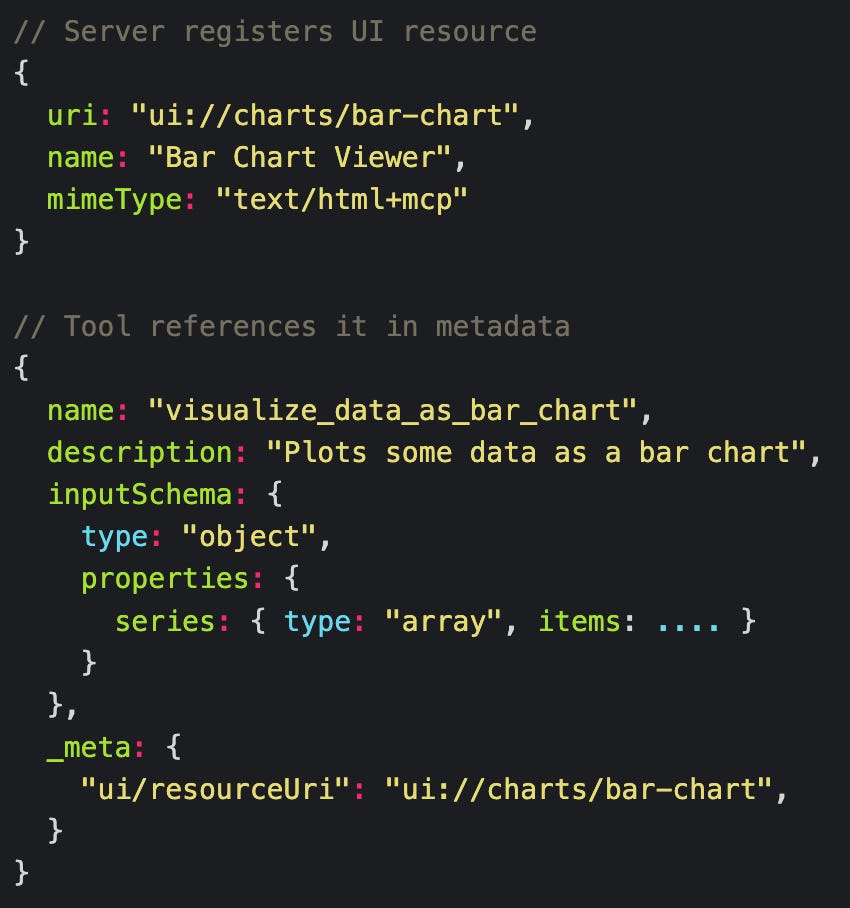

Shifting to the Model Context Protocol ecosystem, Anthropic, OpenAI, and the MCP-UI community introduce SEP-1865. MCP servers can now display complex visualizations through sandboxed HTML components using JSON-RPC over postMessage, with defense-in-depth security and backward compatibility.

Why now: Previously limited to text and structured data, MCP tools can finally show charts, forms, and rich interfaces. This opens new categories of interactive agent applications.

Anthropic Introduces Tool Search for Massive Tool Libraries

Estimated read time: 6 min

Also in the MCP space, Anthropic’s tool search enables Claude to work with thousands of tools through dynamic discovery. Instead of consuming 10-20K tokens loading 50 tool definitions upfront, Claude searches your catalog using regex or BM25 queries and loads only what it needs.

The context: As MCP ecosystems grow, context window management becomes critical. This feature supports deferred loading, custom embeddings, and scales to enterprise tool libraries.

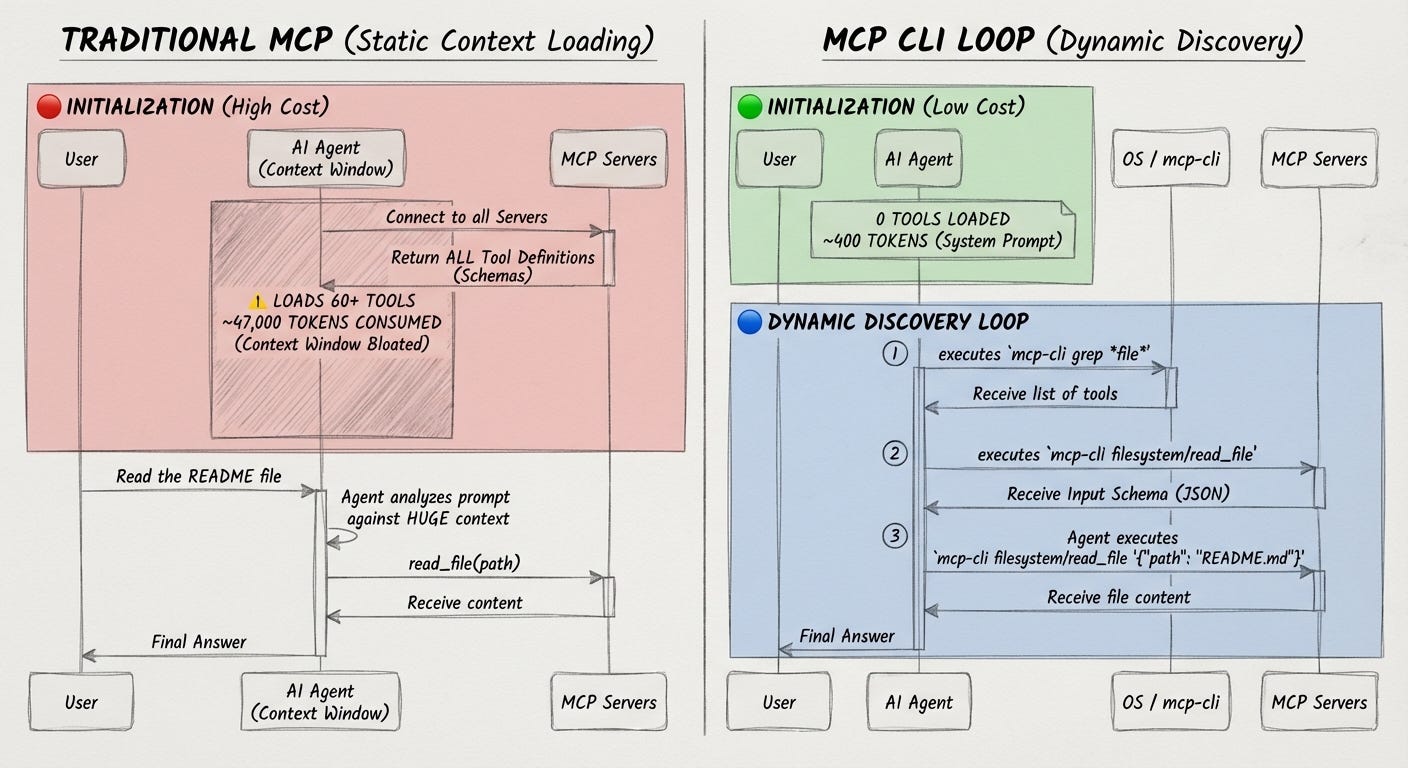

MCP CLI Slashes Context Window Consumption by 99%

Estimated read time: 4 min

Addressing the same context bloat problem, MCP CLI enables dynamic discovery of Model Context Protocol servers. Traditional integration loading all schemas upfront consumes ~47,000 tokens for 60 tools across 6 servers. With dynamic discovery, the same setup requires only ~400 tokens.

Key point: The single-binary tool supports both local and remote servers, making it practical for teams running multiple MCP integrations without overwhelming their context budgets.

Vercel Releases React Best Practices for AI Agents

Estimated read time: 5 min

Moving from infrastructure to application frameworks, Vercel’s react-best-practices encapsulates a decade of optimization knowledge into 40+ rules designed for AI agents. Rules include impact ratings from CRITICAL to LOW targeting async waterfalls, bundle bloat, and over-rendering.

What this enables: The framework compiles into AGENTS.md for consistent application across large codebases, letting AI-assisted refactoring tools apply institutional knowledge automatically.

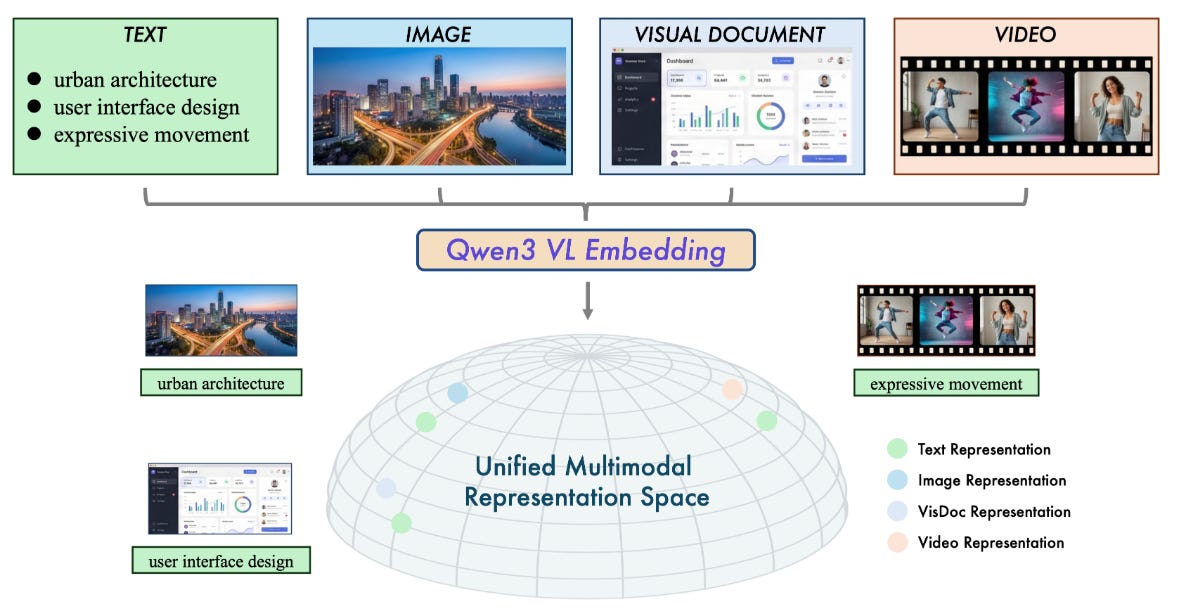

Qwen3-VL: Open-Source Multimodal Embedding and Reranking Models

Estimated read time: 7 min

For developers building retrieval systems, Qwen released Qwen3-VL-Embedding and Qwen3-VL-Reranker, unifying text, image, screenshot, and video retrieval in a single embedding space. Available in 2B and 8B variants with 30+ language support and state-of-the-art MMEB-v2 benchmark results.

The opportunity: Production-ready multimodal RAG without managing separate pipelines for different content types. Quantization and customizable dimensions make deployment practical.

NEWS & EDITORIALS

Redis Creator Urges Developers to Embrace AI Tools

Estimated read time: 5 min

Antirez, creator of Redis, argues that AI has fundamentally changed programming. He demonstrates completing significant projects in hours rather than weeks, including UTF-8 support, Redis test fixes, and a 700-line C library. His key insight: stop asking “what to code” and focus on “what to do.”

Worth noting: Antirez emphasizes testing AI tools for weeks, not minutes, to understand their true potential. The learning curve is real, but the productivity gains compound.

Anthropic’s Economic Index Reveals AI Productivity Patterns

Estimated read time: 8 min

Supporting these productivity claims with data, Anthropic’s January 2026 report analyzes Claude usage across 3,000+ work tasks. Coding dominates at 34-46% of traffic. Key finding: success decreases with complexity, but complex tasks yield greater time savings (12x speedup for advanced work).

What’s interesting: US regional AI adoption is converging 10x faster than historical tech diffusion patterns, suggesting rapid normalization of AI-assisted development practices.

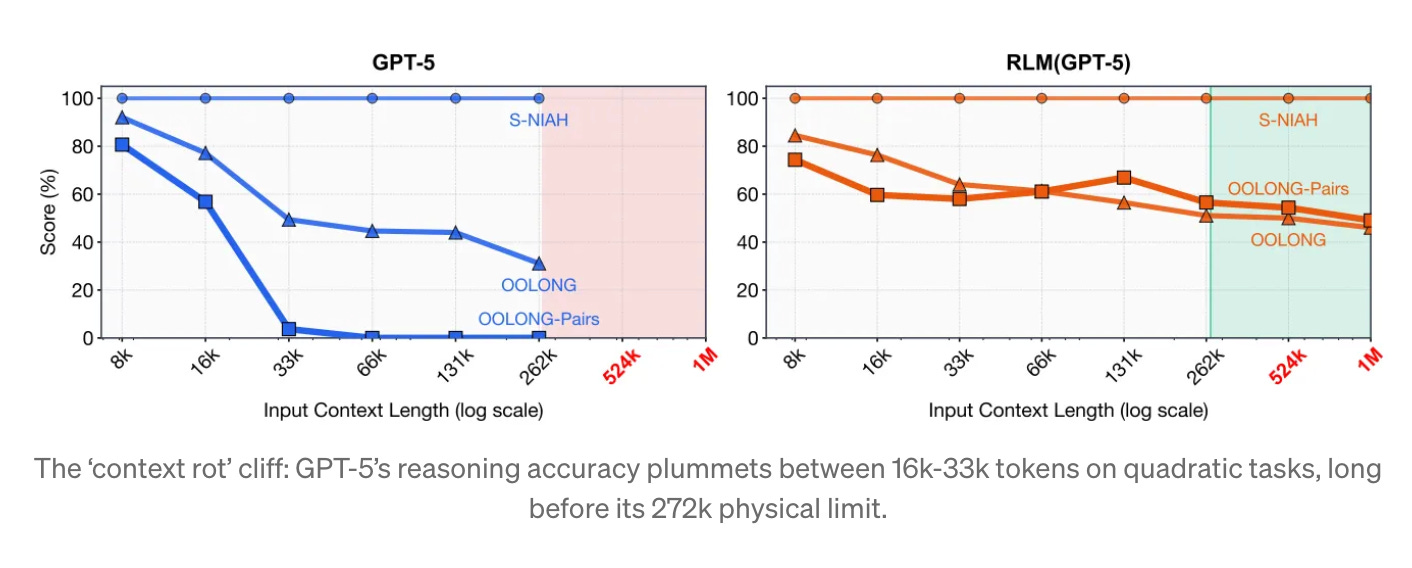

MIT Researchers Introduce Recursive Language Models

Estimated read time: 15 min

Addressing why complex tasks remain challenging, MIT researchers reveal that GPT-5’s accuracy collapses at 33k tokens on complex reasoning, far below its 272k advertised limit. Their solution, Recursive Language Models, spawns sub-LLMs with pristine context windows for parallel processing.

Why this matters: RLM maintains strong performance past 1M tokens where traditional transformers fail. This architectural approach aligns with the agent design patterns covered earlier in this edition.

Voice Computing Finally Ready for Mainstream Adoption

Estimated read time: 6 min

Shifting from how we code to how we interact, M.G. Siegler argues voice-controlled computing is finally viable after 15 years of false starts. The difference: LLMs provide contextual understanding far exceeding previous speech assistants. OpenAI, Amazon, and others are building voice-first form factors around this capability.

The context: Wearables, smart glasses, and pendants are launching with voice-first interfaces. For developers, this signals new interaction patterns worth exploring beyond traditional GUIs.

Mozilla Launches Open Source AI Strategy

Estimated read time: 7 min

On the question of who controls these AI systems, Mozilla positions open-source AI as a counterweight to closed platforms. The organization identifies a developer experience problem: closed APIs offer convenience while the open ecosystem remains fragmented. Mozilla’s 2026 initiatives include mozilla.ai’s any-suite modular framework.

The takeaway: Success requires making open tools genuinely superior, not just more principled. Mozilla’s approach focuses on developer experience gaps where openness can reset defaults.