The Ralph Wiggum Agent Loop Is Really About Engineering Discipline

A bash loop, a $297 API bill, and claims of a $50,000 contract delivered. The Ralph Wiggum technique went from meetup curiosity to Anthropic plugin in six months. Geoffrey Huntley’s approach captured developer attention through elegant simplicity: run the agent in a loop until the work is done.

But the viral spread has obscured what actually makes Ralph work. The bash loop gets the attention. The methodology behind it deserves it more.

Engineering Discipline, Applied to Agents

Ralph didn’t emerge from AI researchers or startup demos. It came from an experienced software engineer applying familiar discipline to a new tool. The principles aren’t novel: spec-driven development, automated testing as validation, incremental progress with clean handoffs. What’s interesting is seeing these engineering fundamentals resurface as the answer to agent reliability.

Anthropic’s own guidance on effective harnesses for long-running agents describes nearly identical patterns: fresh context per session, filesystem as memory, testing requirements for verification. Ralph is one implementation of a broader emerging consensus.

As AI Hero’s practical guide puts it: “Every tip works for human developers too. Feedback loops, small steps, explicit scope. These aren’t AI-specific techniques. They’re good engineering. Ralph makes them non-negotiable.” The philosophy: “Sit on the loop, not in it.”

What Ralph Actually Is

At its core, Ralph is a meta-harness. It wraps an existing agent harness like Claude Code and adds structure: iteration, persistence, and objective completion criteria. A minimalistic spec-driven development framework. Specifications drive everything. The loop handles execution.

The name comes from The Simpsons character Ralph Wiggum. Perpetually confused, always making mistakes, but never stopping. As Huntley describes it, the technique is “deterministically bad in an undeterministic world.”

The basic mechanism is deceptively simple:

while :; do cat PROMPT.md | claude-code ; doneThe excitement over the loop obscures the critical insight: what happens between iterations. Each iteration spawns a fresh context window. Memory persists only through the filesystem: git commits, markdown files, the codebase itself.

This distinction matters more than it appears. As The Real Ralph Wiggum Loop explains, this avoids what practitioners call “context rot.” When conversation history accumulates in a single session, the AI eventually compacts that history and loses information. Fresh context per iteration sidesteps this entirely. The how-to-ralph-wiggum repository captures the principle as: “Tight tasks + 1 task per loop = 100% smart zone context utilization.”

The Core Problem It Solves

AI coding agents have a fundamental behavioral pattern: they stop working when they think they’re done, not when the task is actually complete. This manifests as premature exits, declarations of success before meeting objective criteria, and fragility when complex tasks can’t complete in a single shot.

Ralph addresses this by making iteration the default rather than the exception. The agent runs until an objective signal confirms the work is complete. Tests pass. Build succeeds. An explicit completion marker appears.

This redefines the human role. Instead of approving each step, you design the validation criteria upfront. The agent handles the iteration. The tests and build process handle the judgment.

The Three-Phase Methodology

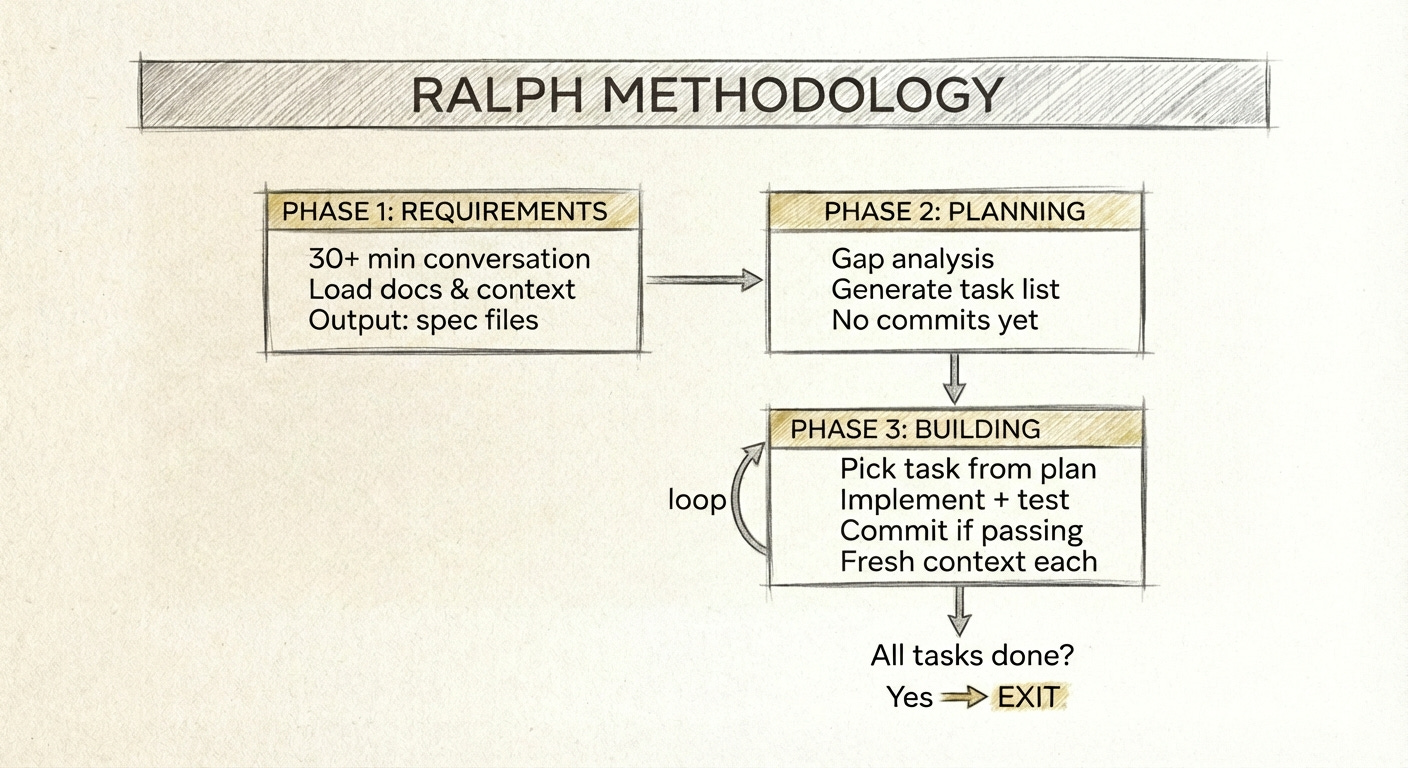

The loop is just the mechanism. According to the how-to-ralph-wiggum repository, Ralph is actually a structured methodology with three distinct phases.

Phase 1: Requirements Definition. This starts with extended conversation. Thirty minutes or more with the AI, exploring jobs-to-be-done, loading information from docs and URLs into context. The output is structured specification files. The Real Ralph Wiggum Loop emphasizes this phase: “Don’t start coding. Start talking. Spend 30+ minutes in conversation before writing a single spec.”

Phase 2: Planning Loop. The agent performs gap analysis between specs and existing code, generating a prioritized task list. No implementation happens here. No commits. The loop continues until the plan is complete and coherent.

Phase 3: Building Loop. With a plan in place, each iteration picks a task, implements it, runs tests, and commits. Fresh context each time, single task focus. The loop continues until all tasks are marked complete.

The guardrail mechanism throughout is what practitioners call “backpressure.” Rather than human approval on every action, automated signals reject invalid work. Test suites that must pass. Type checkers that must succeed. Linters that enforce standards. Build processes that must complete. You design the criteria once. The iteration handles enforcement.

Not Fire-and-Forget

Despite the “run it overnight” stories, Ralph done right is not a fire-and-forget mechanism. The human remains attentive to the agent’s activity, looking for opportunities to tune.

You spend significant time upfront defining requirements. You design the backpressure criteria that determine success. You watch for agents “going in circles” on impossible tasks. You adjust prompts when behavior degrades. You set iteration limits as circuit breakers. The Real Ralph Wiggum Loop describes an ongoing tuning process: when Ralph makes mistakes, you add “signs” to guide future behavior. The philosophy: “Never blame the model. Always be curious about what’s going on.”

Security is an explicit concern, not an afterthought. To run autonomously, Ralph requires bypassing normal approval prompts. The how-to-ralph-wiggum repository is direct about the risks: running without permissions safeguards in unsandboxed environments exposes credentials, SSH keys, and tokens. Sandboxing is mandatory, not optional. The guidance recommends isolating execution in containers with minimal access, restricted network connectivity, and no private data beyond what the task requires.

There’s also a failure mode worth understanding. When agents encounter impossible tasks or ambiguous requirements, they may iterate destructively. Supervising Ralph calls this “overbaking.” The iteration limit is a financial circuit breaker, but damage can occur in iteration two. For production use, additional governance may be warranted: tool call interception, behavioral monitoring, distinct agent identity for attribution.

Where It Fits (and Doesn’t)

Ralph excels at greenfield projects with room for iteration. Well-defined tasks with machine-verifiable success criteria. Batch operations like large refactors. Work that benefits from tireless persistence over speed.

It doesn’t work for everything. Exploration and iteration on design assumes clear specifications upfront. Tasks requiring human judgment or creative decisions need a different approach. If you can’t define “done” in terms a test suite can verify, Ralph can’t stop. Production debugging involves too much context-dependent reasoning. And critically, bad specifications cascade into bad outcomes. The methodology assumes you can write precise requirements.

Success varies by operator. Effective use requires skill in writing specifications, understanding how to design backpressure signals, recognizing when prompts need tuning, and judging when to regenerate plans versus push through.

A Note on the Official Claude Code Plugin

Anthropic released an official Ralph plugin for Claude Code in December 2025. Community reception has been mixed. As A Brief History of Ralph notes, the plugin “misses the key point of Ralph which is not ‘run forever’ but in ‘carve off small bits of work into independent context windows.’” The official plugin runs everything in a single context window rather than spawning fresh context per iteration. For practitioners who value the fresh-context principle, this is a meaningful gap. The methodology described above reflects the original technique, not the simplified plugin implementation.

The Methodology Matters More Than the Loop

What’s striking about Ralph isn’t the technique itself. The solution to “AI agents are unreliable” turned out to be “apply the engineering discipline we already know.” Specifications before code. Tests as validation gates. Small incremental commits. Fresh context to prevent accumulated errors. These patterns predate generative AI by decades.

The implication is worth sitting with: as AI tools become more capable, the skills that matter most may be the ones we’ve always had. Requirements decomposition. Test design. Knowing when to trust automation and when to intervene. Ralph works because it treats AI as a tool that benefits from the same constraints we’d apply to any other automated system.

Whether Ralph fits your workflow comes down to one question: can you define “done” in machine-verifiable terms? If yes, this methodology offers a framework for getting there. If your work is exploratory, ambiguous, or judgment-heavy, look elsewhere. The technique assumes you can write precise specifications, and that assumption is load-bearing.

Try It: Ralph Method Skill

I’ve built a Ralph Method Skill for Claude Code, based on Matt Pocock’s approach to Claude Code skills. When the skill is invoked it will place a ralph.sh and a BUILD_PROMPT.md into your repo if they do not exist. It then walks you through Phases 1 and 2, the requirements interview and implementation planning. At that point you run ralph.sh which will work through the generated spec to implement the goal.

Some features of this Ralph Skill:

Branch safety: Offers to create a feature branch if you’re on main

Namespaced specs:

specs/[task-name]/structure supports multiple concurrent tasksLiving AGENTS.md: Updated during building as the agent learns, not just read

Loop signals: COMPLETE and STUCK markers auto-stop the loop

Commit traceability: Commit hashes recorded in IMPLEMENTATION_PLAN.md

One task enforcement: Hard constraint at the top of BUILD_PROMPT.md

Tests-first backpressure: Green tests required before any commit

Installation (recommended): Install via the AlteredCraft plugin marketplace:

/plugin marketplace add AlteredCraft/claude-code-pluginsThis gives you the Ralph Method skill plus other dev tools, with easy updates as the plugins evolve.

Alternative: If you prefer a standalone install, copy the skill contents to ~/.claude/skills/ralph-method/.

Once installed, use it naturally: “Use your Ralph method skill to implement GitHub issue 9.” The agent will take you through Phases 1 and 2 of the Ralph methodology. When Phase 2 completes, you run ./ralph.sh to start the building loop.

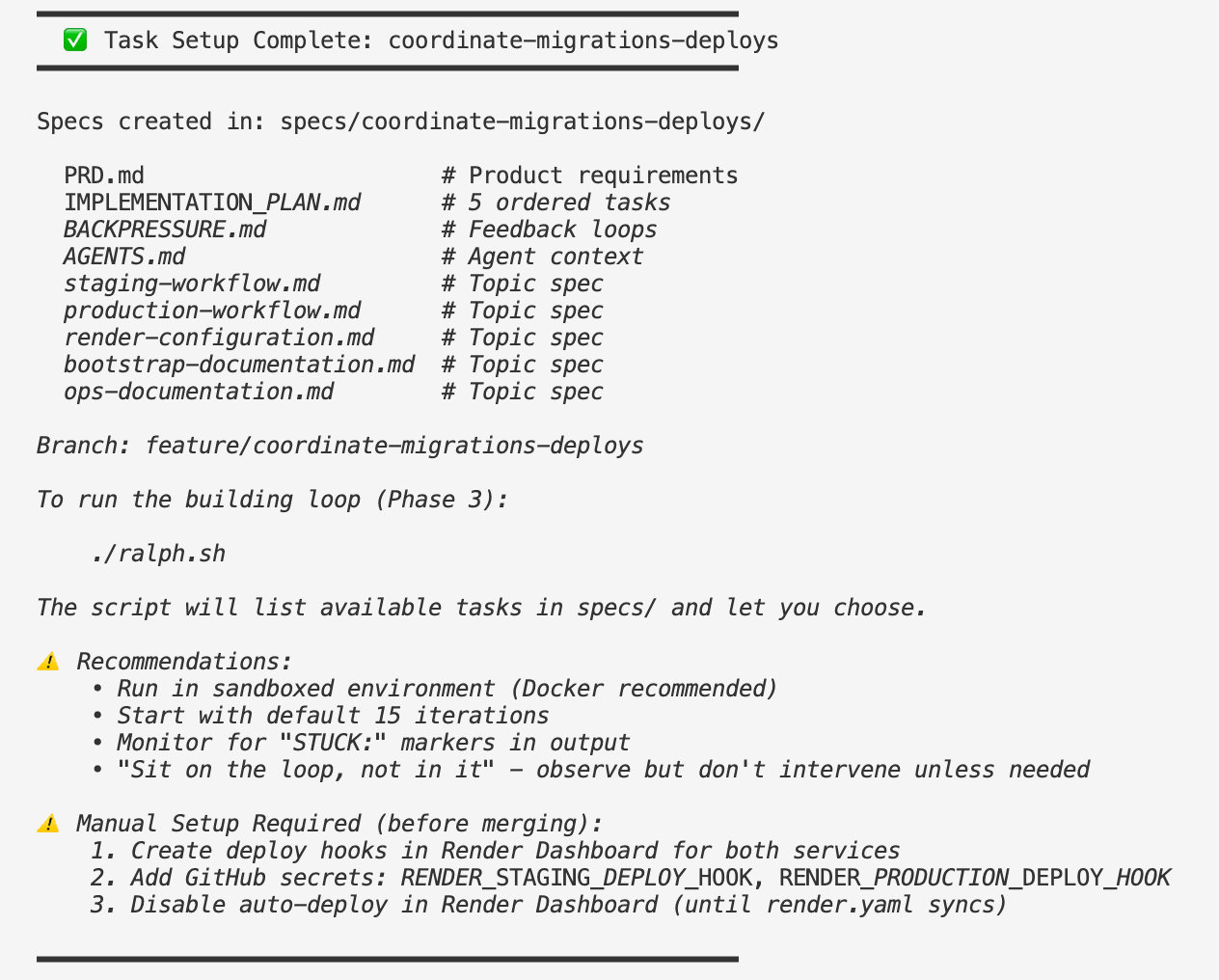

Here’s the skill after completing Phases 1 and 2, ready for the building loop:

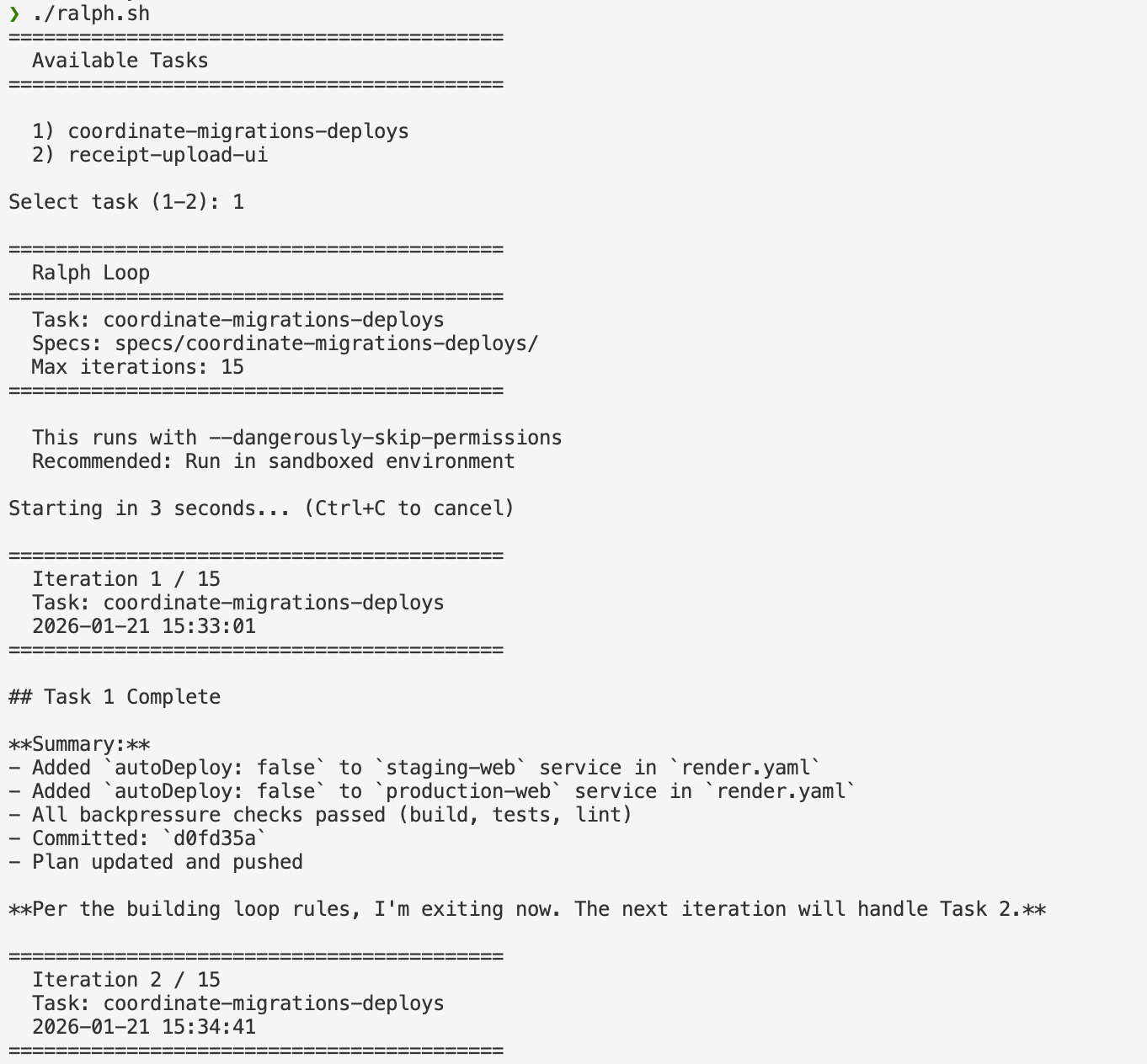

And the loop in action, working through IMPLEMENTATION_PLAN.md one task at a time:

Note that I’m currently in that “tuning phase” for this skill. Use with caution. The current version runs as an Away From Keyboard (AFK) loop. I plan to build a complementary ralph-one.sh that processes one task at a time with output piped to STDOUT for real-time monitoring.

As you note, these are core skills we already knew about for effective engineering. But I would add, they are not skills every SWE is necessarily good at. More reason to focus on the critical thinking, planning, and architecture skills in eng.