MCP Tools Should Handle Complete Workflows, Not Mirror APIs

PLUS - OpenAI Launches GPT-5-Codex for Advanced Coding

Welcome back to AlteredCraft's Delta Notes! Thank you for joining us as we explore the latest developments reshaping AI and software engineering. This edition dives into OpenAI's GPT-5-Codex launch for advanced coding, Meta's compact reasoning models that outperform giants, and crucial insights on why MCP tools should handle complete workflows rather than mirror APIs. Let's discover what's next.

TUTORIALS & CASE STUDIES

Optimize Claude Code for Domain-Specific Libraries

Estimated read time: 13 min

LangChain engineers discovered that combining concise Claude.md guides with documentation tools yields the best results when customizing Claude Code for domain-specific libraries. Their experiments showed that high quality, condensed information combined with tools outperformed raw documentation access alone. The team shares their evaluation framework, test results across LangGraph tasks, and practical takeaways for developers building coding agents for custom libraries.

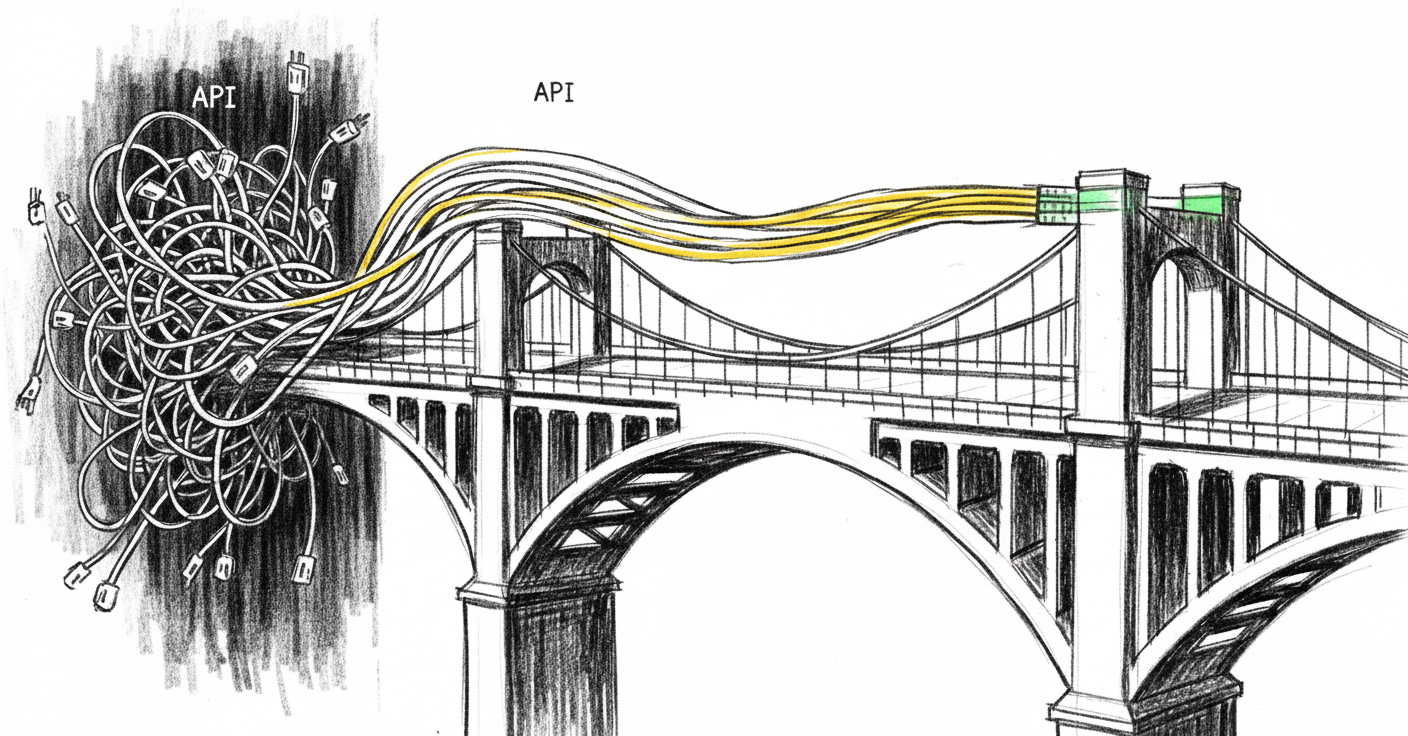

MCP Tools Should Handle Complete Workflows, Not Mirror APIs

Estimated read time: 4 min

Vercel explains why wrapping existing APIs for Model Context Protocol (MCP) fails: LLMs lack persistent state between conversations. Instead of exposing multiple low-level operations, developers should create single workflow-based tools that handle complete user intentions. This approach dramatically improves reliability and reduces orchestration complexity for AI agents.

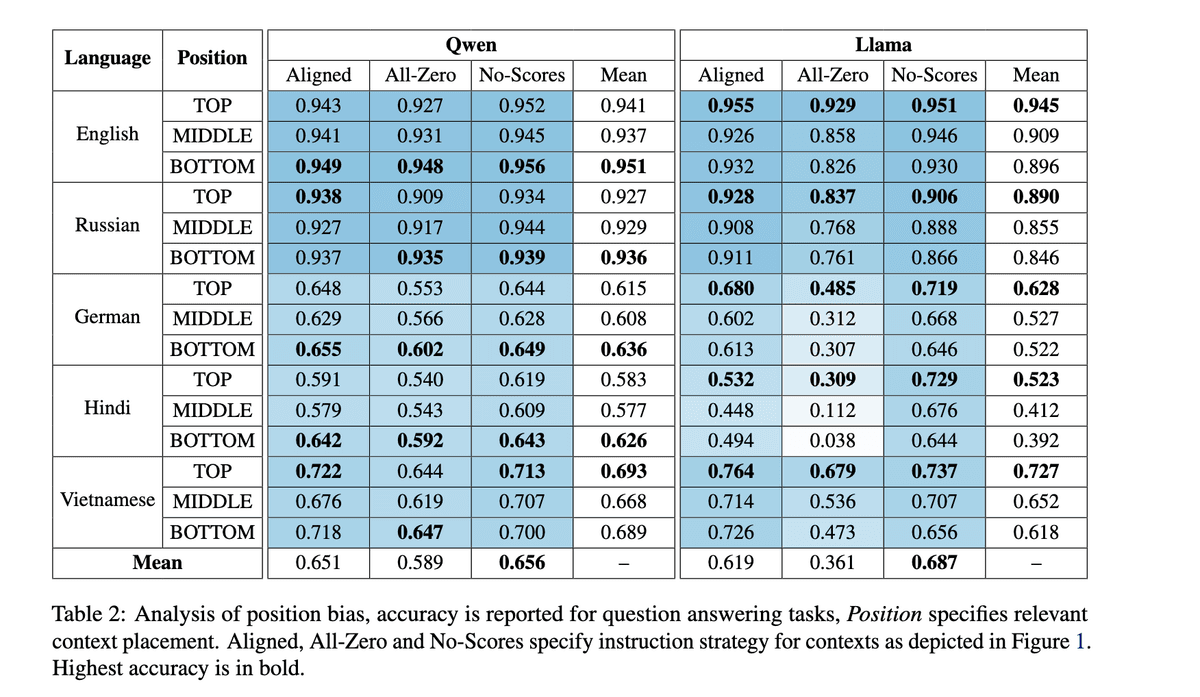

Rewrite Prompts When Switching AI Models

Estimated read time: 5 min

Max Leiter explains why developers should rewrite their prompts when switching between LLMs like GPT-4 and Claude. He identifies three key factors: prompt format preferences (markdown vs XML), position bias affecting where models pay attention, and intrinsic model biases from training. His advice for overfitting prompts to models challenges the common practice of reusing prompts across different AI systems.

How Language Models Pack Millions of Concepts

Estimated read time: 15 min

Discover how GPT-3's 12,288-dimensional embedding space can represent millions of concepts through high-dimensional geometry and the Johnson-Lindenstrauss lemma. This deep dive explores vector packing optimization, revealing that language models can store 10^73+ quasi-orthogonal vectors, far exceeding human knowledge requirements. Essential reading for developers building RAG systems or working with embeddings.

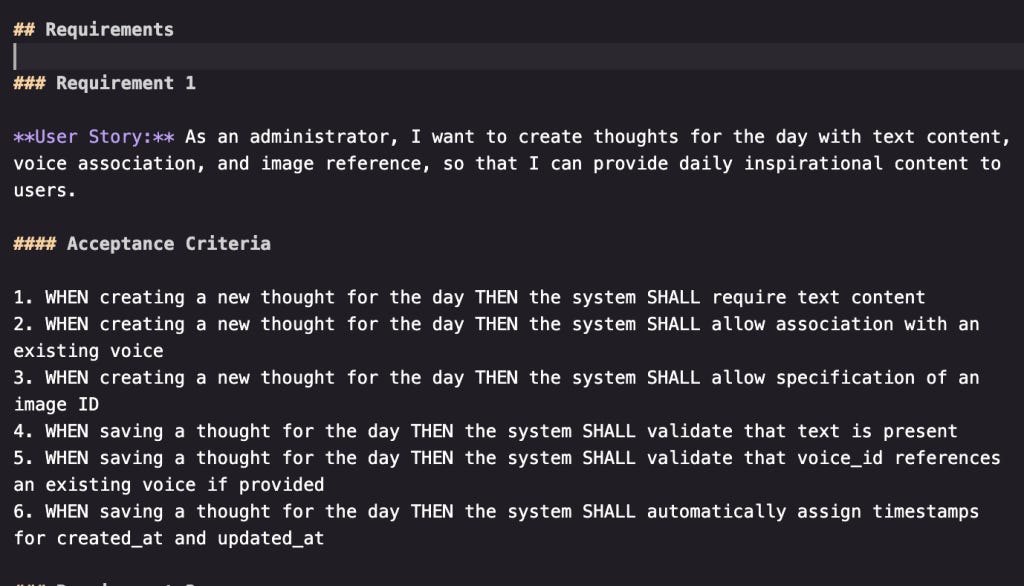

AWS Kiro Tests Spec-Driven AI IDE Development

Estimated read time: 8 min

AWS Kiro takes a different approach than "vibe coding" IDEs like Cursor and Windsurf by focusing on spec-driven development with formal requirements. The tool breaks development into three phases: generating user stories with acceptance criteria, creating technical designs, and implementing trackable tasks. This structured workflow produces shareable markdown artifacts suitable for team collaboration, though the UI needs refinement for broader adoption.

TOOLS

Moondream 3: Frontier Visual AI in 2B Parameters

Estimated read time: 8 min

Moondream 3 Preview introduces a revolutionary 9B MoE architecture with only 2B active parameters, achieving frontier-level visual reasoning while maintaining blazing speed. This hybrid reasoning model excels at object detection, pointing, OCR, and structured output generation with 32K context length. Perfect for developers building real-world vision AI applications requiring fast, trainable, and cost-effective inference.

GitHub Launches MCP Registry for AI Agent Discovery

Estimated read time: 6 min

GitHub introduces the MCP Registry, a centralized hub for discovering Model Context Protocol (MCP) servers that enable AI agents and tools to communicate seamlessly. The registry features one-click VS Code installation, community-driven curation, and partnerships with Figma, Postman, HashiCorp, and Dynatrace. This initiative addresses the fragmented ecosystem of MCP servers scattered across repositories, making it easier for developers to build agentic workflows with GitHub Copilot and other AI tools.

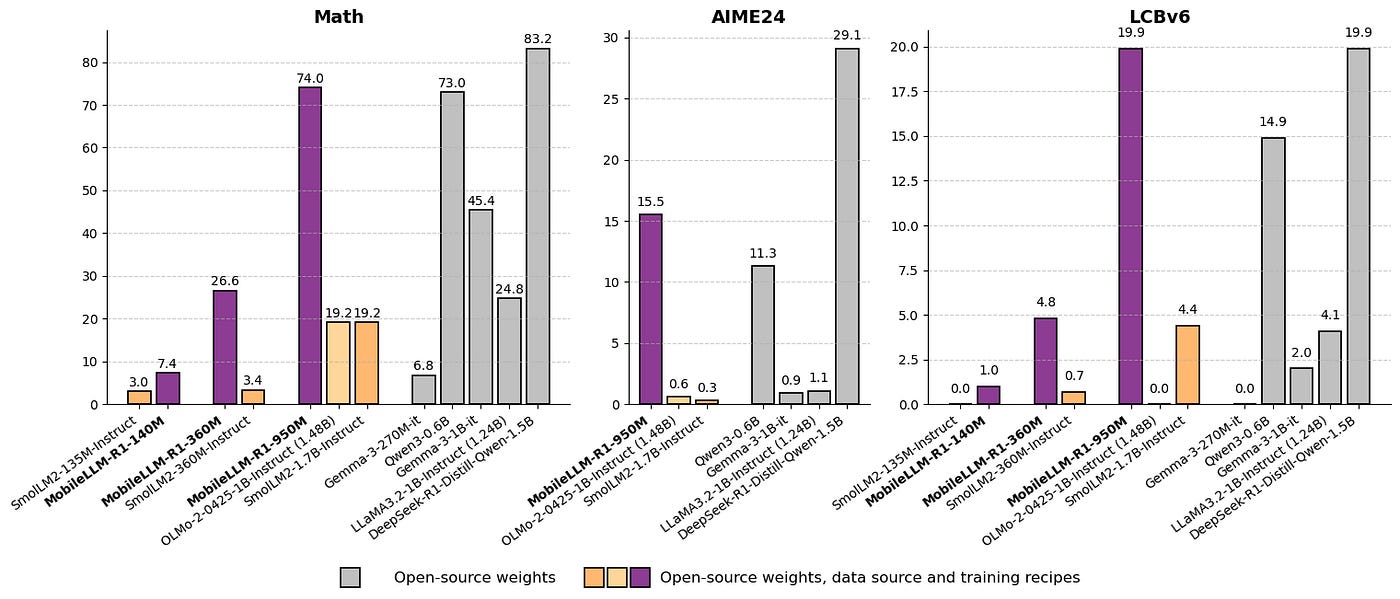

Meta's MobileLLM-R1 Outperforms Larger Reasoning Models

Estimated read time: 6 min

Meta released MobileLLM-R1, a family of sub-1B parameter reasoning models that outperform much larger competitors. The 950M model beats Qwen3 0.6B despite training on 5T tokens versus 36T, achieving 5× better accuracy than Olmo 1.24B on math tasks. These lightweight models excel at coding and mathematical reasoning, offering developers efficient AI capabilities for resource-constrained applications.

Hugging Face Enables Open-Source LLMs in GitHub Copilot

Estimated read time: 3 min

Hugging Face's new VS Code integration lets developers use open-source LLMs like Kimi K2, DeepSeek V3.1, and GLM 4.5 directly within GitHub Copilot Chat. This breakthrough eliminates vendor lock-in, providing instant access to hundreds of specialized models through Hugging Face's Inference Providers API, enabling developers to leverage task-specific AI models without switching platforms.

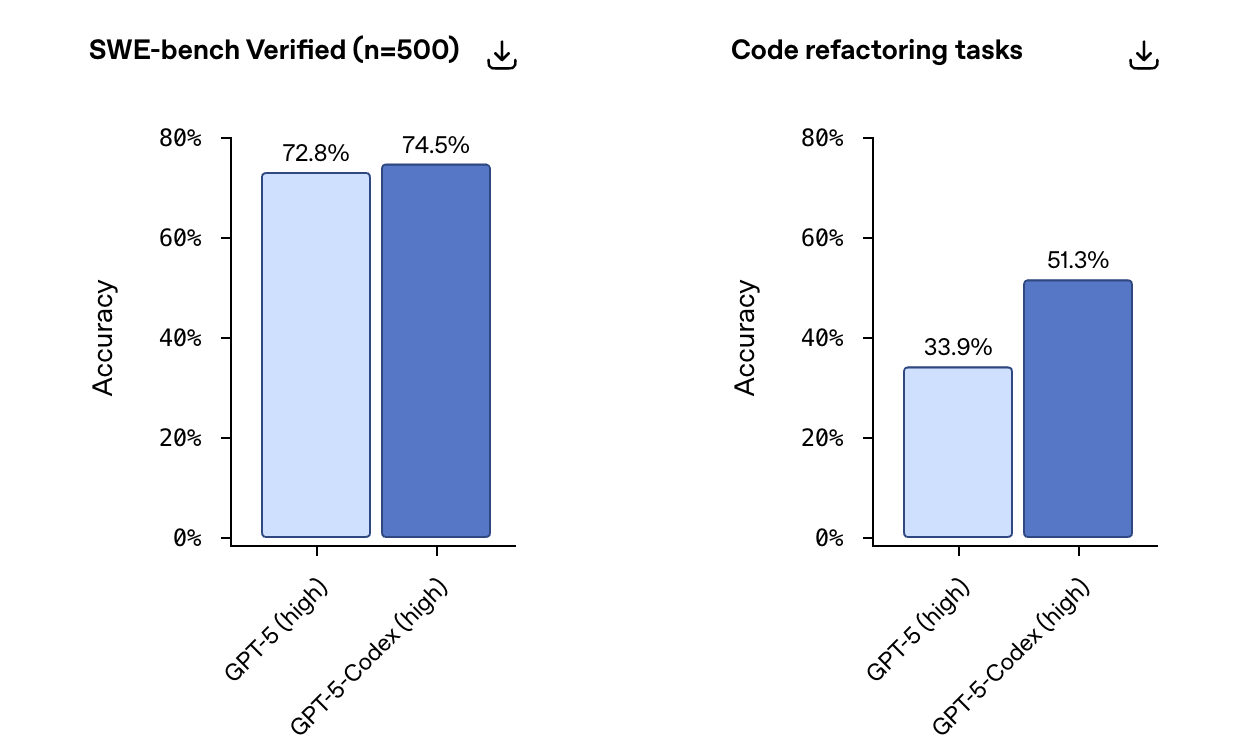

OpenAI Launches GPT-5-Codex for Advanced Coding

Estimated read time: 8 min

OpenAI introduces GPT-5-Codex, an AI coding agent optimized for real-world software engineering. It handles complex tasks independently for 7+ hours, performs code reviews to catch critical bugs, and integrates seamlessly with IDEs, terminals, and GitHub. Available with ChatGPT Plus/Pro subscriptions, it features enhanced security sandboxing and dynamic reasoning capabilities.

NEWS & EDITORIALS

AI Coding Agents Transform Software Development Workflows

Estimated read time: 13 min

Coding has become the epicenter of AI progress, with CLI agents revolutionizing development workflows. Tools like Claude Code and OpenAI's Codex enable developers to build complex projects in hours instead of weeks. The article explores how these agents are merging over a million PRs, democratizing software creation, and shifting coding from manual implementation to asynchronous, AI-driven development.

AI Rollups Transform Traditional Industries Through Ownership

Estimated read time: 38 min

Venture firms are backing AI-powered rollups that acquire traditional businesses rather than just selling software to them. Companies like Metropolis (parking), Crete (accounting), and Crescendo (call centers) demonstrate how owning the service layer enables deeper AI integration and margin expansion. This shift from selling tools to operating businesses directly addresses the 42% failure rate of enterprise AI initiatives.

MIT Symposium Reveals Future of Generative AI Beyond LLMs

Estimated read time: 8 min

MIT's inaugural Generative AI Impact Consortium Symposium brought together researchers and industry leaders to explore AI's future. Meta's Yann LeCun revealed that world models learning like infants will surpass current LLMs, while Amazon's Tye Brady showcased generative AI's transformative impact on robotics and warehouse automation. The event highlighted practical applications for developers building RAG systems and AI agents.

AI Agents Finally Get Clear Definition for Developers

Estimated read time: 8 min

Simon Willison proposes a practical definition for AI agents that developers can actually use: "tools in a loop to achieve a goal". This clarity helps developers building RAG frameworks and multi-LLM systems communicate effectively about agent architectures. He distinguishes between technical implementations and misleading "human replacement" definitions, while noting OpenAI's confusing multiple interpretations of the term.

Anthropic's Infrastructure Bugs Degraded Claude's Performance

Estimated read time: 10 min

Anthropic reveals how three overlapping infrastructure bugs caused degraded Claude responses between August-September 2024. The issues included context window routing errors, output corruption producing unexpected characters, and XLA:TPU compiler bugs affecting token selection. This detailed postmortem offers valuable insights for developers building AI systems about infrastructure complexity, debugging challenges, and quality assurance in production LLM deployments.