Mapping AI Agent Adoption Across 217,000 GitHub Repositories

Which AI agents are developers actually using?

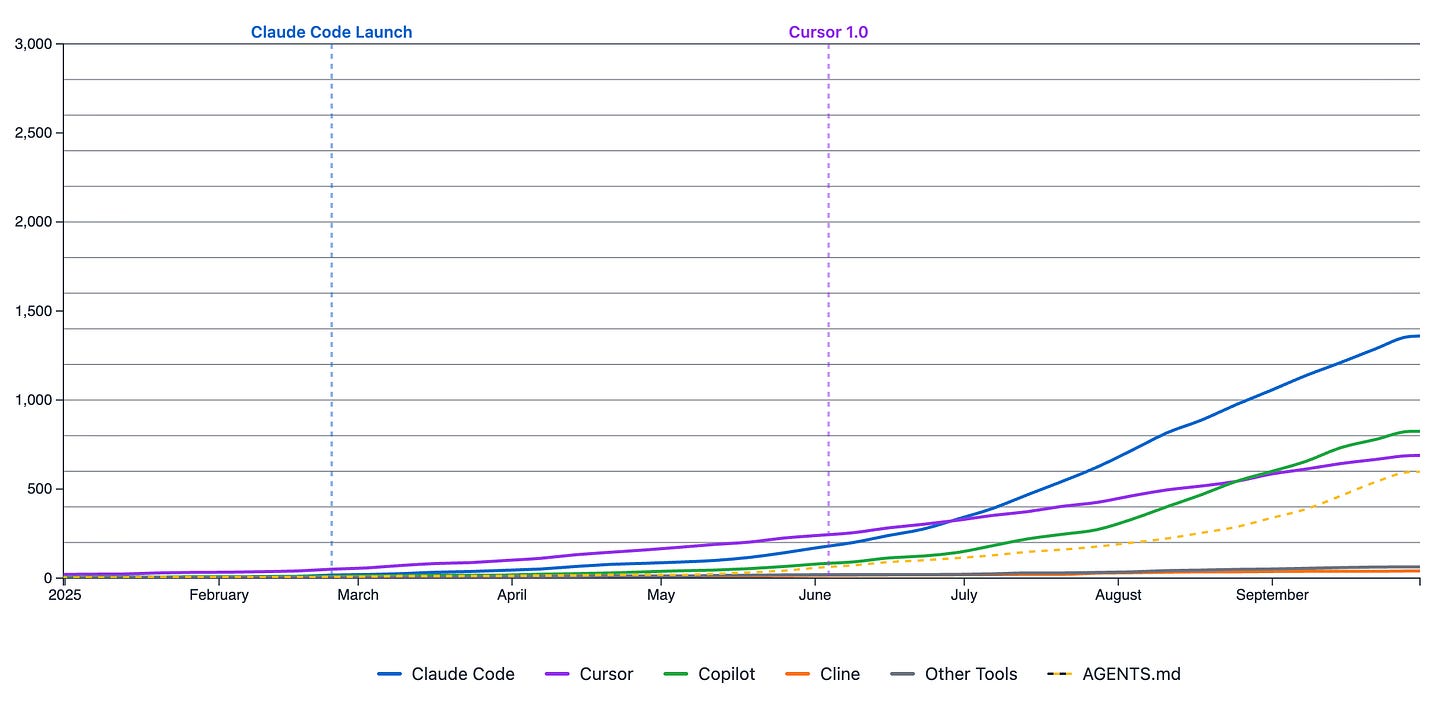

I scanned 217,000+ active public GitHub repositories in early October 2025 to map how developers are adopting AI coding agents. The overall adoption rate is 4.9%. The data reveals three patterns: Claude Code is leading the adoption race, new repositories adopt agents at triple the rate of established projects, and 13.4% of adopters run multiple tools side-by-side.

These patterns matter because they show how developers actually use agents in production rather than how they talk about using them. Configuration files committed to repositories represent deliberate choices about tools and workflows in real projects.

The Scanning Methodology

AI coding agents started gaining considerable tractions in early 2025, triggered in part by the release of “reasoning” LLMs. Cursor was first to capture significant market share. Claude Code later launched in February 2025. GitHub evolved Copilot toward agent-like capabilities. Smaller tools and open standards emerged alongside these major platforms.

Developers who adopt agents often add configuration files to their repositories. A .cursorrules directory specifies how Cursor should behave on a project, a CLAUDE.md configures Claude Code. When a repository adds these configurations, the commit history offers a good signal as to when adoption happened and which tool the team chose.

The scan covered all repositories within the 200 to 430,000 stars range. This resulted in a data set of 217,000+ public repositories. I excluded repositories without a commit in the last 90 days to ensure analysis of active projects. For each repository, I checked for agent configuration files, recorded which tools were present, and logged commit dates to track adoption timing. Due to GitHub’s power law distribution of lower starred repos, scanning below 200 stars was impractical.

You can explore the data interactively online, where you can zoom in on the adoption curves and view trends by individual tool or as combined adoption rates.

What This Data Shows (and Doesn’t Show)

The most important limitation: this dataset covers only public GitHub repositories, a large but limited slice of all source code. Private repositories, where most commercial development happens, don’t appear in the data. The agents enterprise teams use, adoption rates in commercial settings, and tool preferences of developers working on closed-source projects are not visible in this analysis.

Agent configuration files are also optional. Many developers use agents without committing config files, keep settings in global preferences rather than per-repository files, or use agents through IDE features that don’t require separate configuration. The data shows committed configurations only. Actual agent usage is higher than these numbers indicate.

The 200-star threshold is practical given GitHub’s power law distribution. This excludes the long tail of personal projects, early experiments, and niche tools. I may investigate sampling strategies in the future to explore this lower-starred set.

What the dataset reveals is relative trends and adoption velocity among developers who work publicly and choose to document their agent setup. These boundaries contextualize rather than invalidate the findings.

The Results

Configuration files show when teams adopted agents and which tools they chose, but not why they made those decisions or what factors drove specific choices.

To understand the reasoning behind these patterns, I’m interviewing maintainers of repositories in the dataset. These conversations explore what drove adoption decisions, what challenges emerged during integration, and how teams view agents as development infrastructure versus productivity experiments.

The three patterns below show what’s happening in the data. The interviews will reveal why.

Claude Code Overtakes First-Mover Cursor

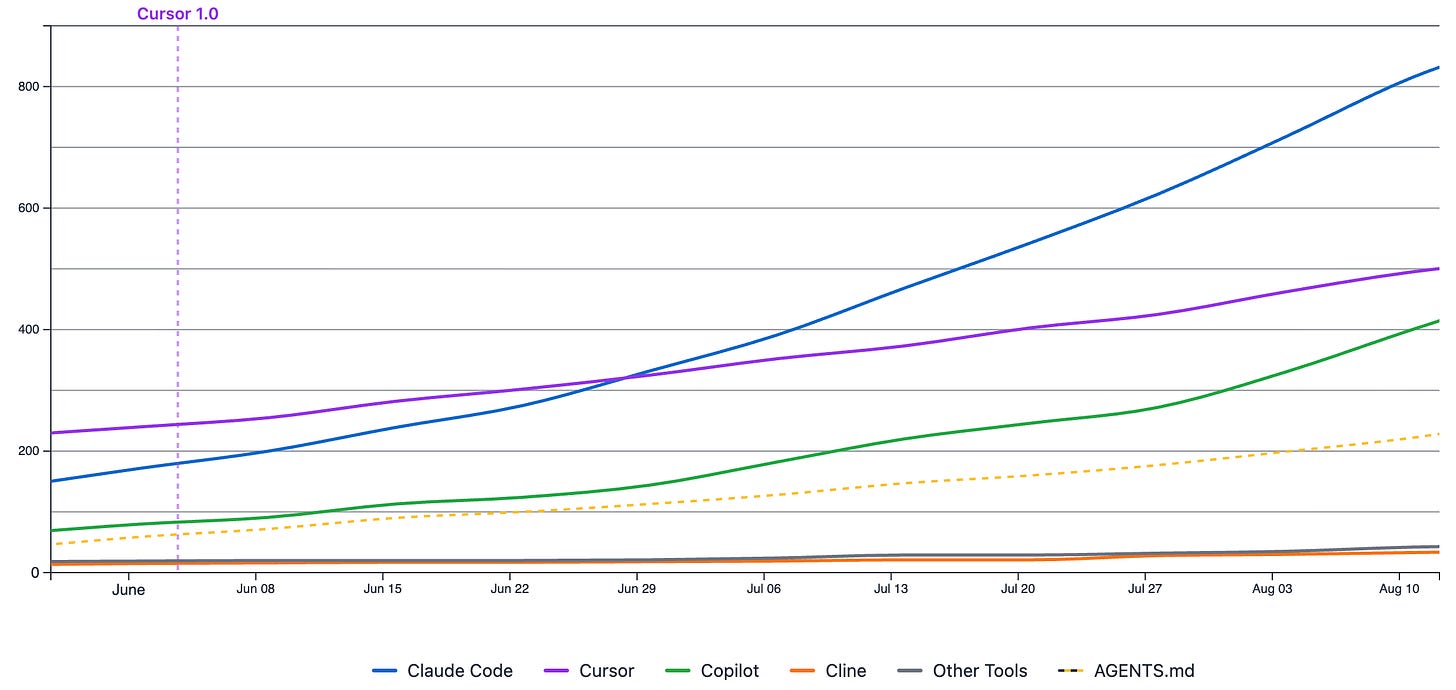

First-mover advantage often dominates developer tool adoption. Cursor had press, community momentum, and network effects when Claude Code launched in February 2025. By late June, among the repositories in this dataset, Claude Code had overtaken Cursor in adoption rates, with its lead increasing at an accelerating rate through October.

Claude Code introduced a different approach to coding agents. As a CLI-based tool, its growth could signal that command-line interfaces are a market fit for developers. The adoption pattern suggests Claude Code offered value beyond what Cursor provided, enough to overcome the switching costs of learning new patterns and converting workflows.

This theory is supported by a not too uncommon reaction I get when introducing developers to Claude Code:

This reaction captures something the numbers can’t: the moment when a tool clicks with how developers actually want to work. What drove the shift? The aforementioned interviews will help answer these questions.

GitHub Copilot showed an adoption uptick starting in August 2025. By late August, Copilot overtook Cursor in the data, reflecting GitHub’s evolution from autocomplete tool to full coding agent with CLI capabilities.

Copilot’s distribution advantages (GitHub interface integration, existing user base, Microsoft’s resources) make it structurally different from standalone agents. If Copilot adoption continues accelerating, it could reshape the competitive landscape significantly.

New Projects Adopt Agents at 3x the Rate

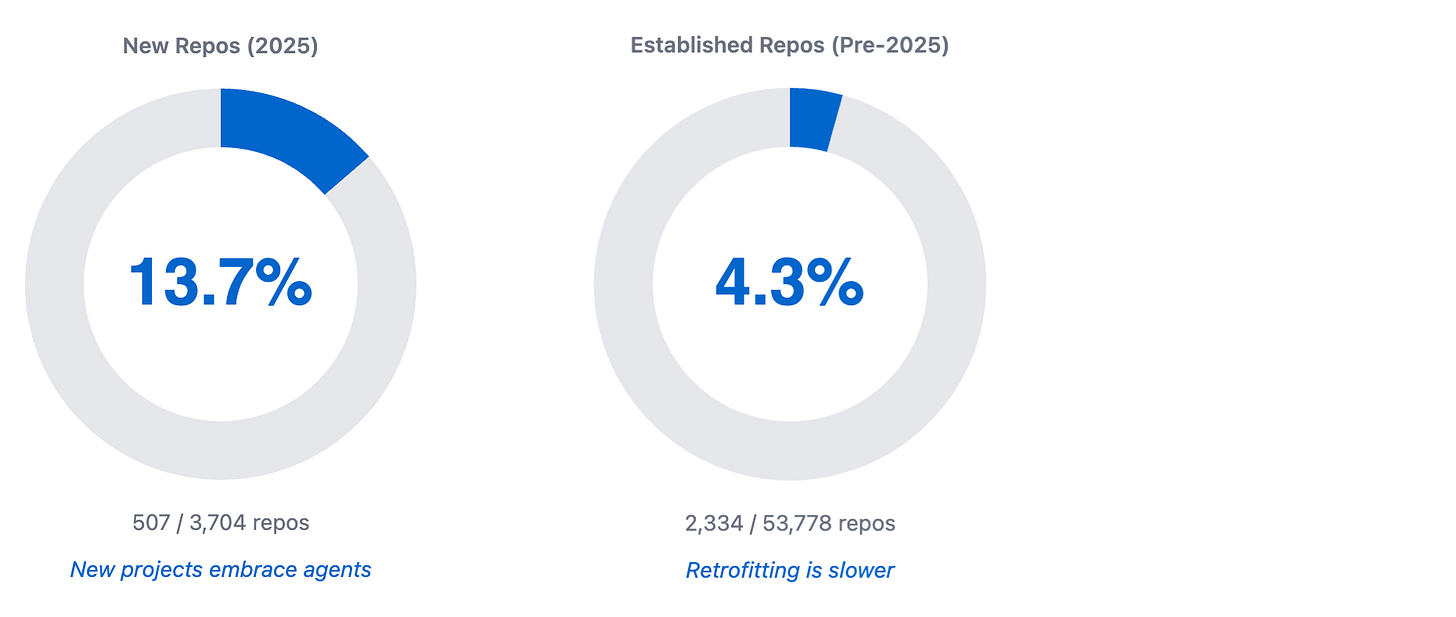

Repositories created in 2025 show 13.7% agent adoption. Projects that predate 2025 show 4.3% adoption. This pattern isn’t new. Greenfield development has always made it easier to adopt new paradigms than retrofitting established codebases.

What’s notable is what the gap might signal: the normalization of AI coding agents. Is the industry trending toward a default where starting a new project means using AI? The data suggests this direction but doesn’t confirm it.

New projects can build around agent capabilities from day one. Established projects carry accumulated patterns, team habits, and workflow conventions that make integration more complex. This doesn’t make established projects wrong. Many teams rightly prioritize stability and proven workflows over adopting pre-stable tools.

The selection effect is real. Projects started in 2025 skew toward developers comfortable with emerging tools and willing to experiment with pre-stable technology.

Multi-Tool Configurations at 13.4%

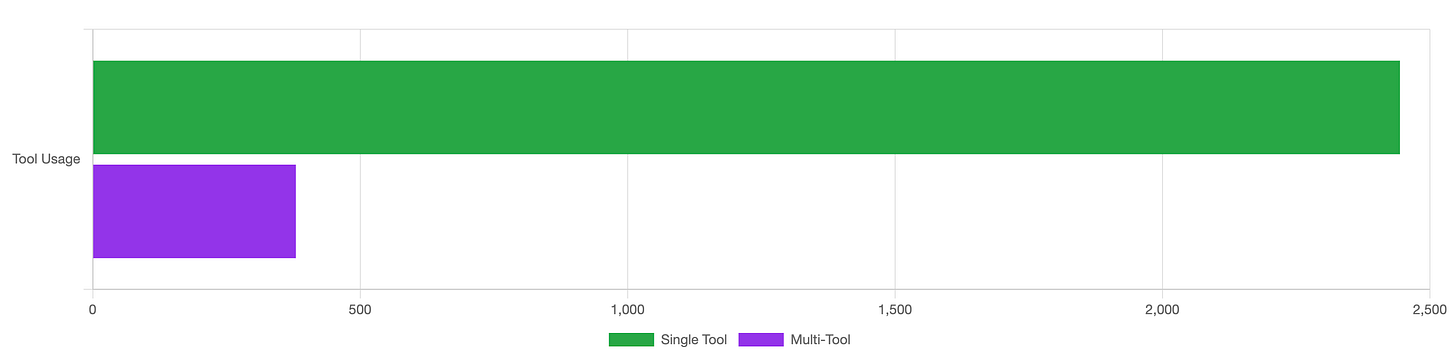

Most developers stick with one agent: 86% of repositories with agent configurations use a single tool. The remaining 13.4% run multiple configurations side-by-side. The 13.4% likely reflects a combination of early experimentation, individual developer preferences on teams, and repositories in transition between tools.

These are early days for AI coding agents. The multi-tool rate is worth tracking over time. Growth would suggest developers find value in using different agents for different tasks. Decline would indicate convergence on single solutions.

What the Data Can’t Tell Us Yet

Why did Claude Code gain momentum? What makes teams choose multi-tool setups? How do established projects approach agent integration compared to new projects building agent-first? What friction points emerge during adoption?

Repository scanning can’t answer these questions. The interviews I’m conducting with repository maintainers will help answer them.

The scan will continue monthly to track how patterns evolve.

The patterns forming now will shape whether these tools become fundamental development infrastructure or remain task-specific productivity aids. The monthly data collection tracks the trends. The developer interviews reveal the why behind the patterns.

If you’d like to understand adoption patterns in your own repositories, I’m developing an open source version of the scanning tool you can run locally on your public and private repos. Understanding your team’s position in the broader landscape can inform better tooling decisions. DM me or comment below if you’re interested.