Claude Code's Autonomous Sessions, Anthropic's Agent Evals Guide, Cursor's 47% Token Reduction & much more

The week's curated set of relevant AI Tutorials, Tools, and News

Thanks for joining Altered Craft’s weekly AI review for developers. No splashy model announcements this week, and that’s actually interesting. Instead, we’re seeing practitioners put December’s releases to work: hour-long autonomous coding sessions with Claude Code, practical agent-building guides, and real adoption stories from engineering leaders. You’ll also find new agent frameworks, MCP integration patterns, and a thoughtful look at where different software markets are actually heading. Let’s dig in.

TUTORIALS & CASE STUDIES

How One Engineer Incorporated AI Into Daily Workflows

Estimated read time: 10 min

Skydio engineering director Elliot Graebert shares how he moved past initial AI excitement into sustained daily use. His approach: start by replacing Google searches where ChatGPT answers your exact question. Progress to pair programming with context, then brainstorming unknown unknowns.

The takeaway: A refreshingly honest progression from someone who initially dismissed AI as “cool but not for me.” His framework helps you find where AI actually fits your workflow.

Database Development with AI: Who Adopts First

Estimated read time: 6 min

Also examining AI adoption barriers, report writers and data engineers will likely adopt AI tooling before mission-critical database developers. SQL’s stability theoretically helps AI, but chaotic legacy databases with cryptic naming create real barriers. The bottleneck: developers don’t document their databases.

Worth noting: If you work with legacy databases, this explains why AI tools feel less helpful than promised. Better documentation becomes a force multiplier.

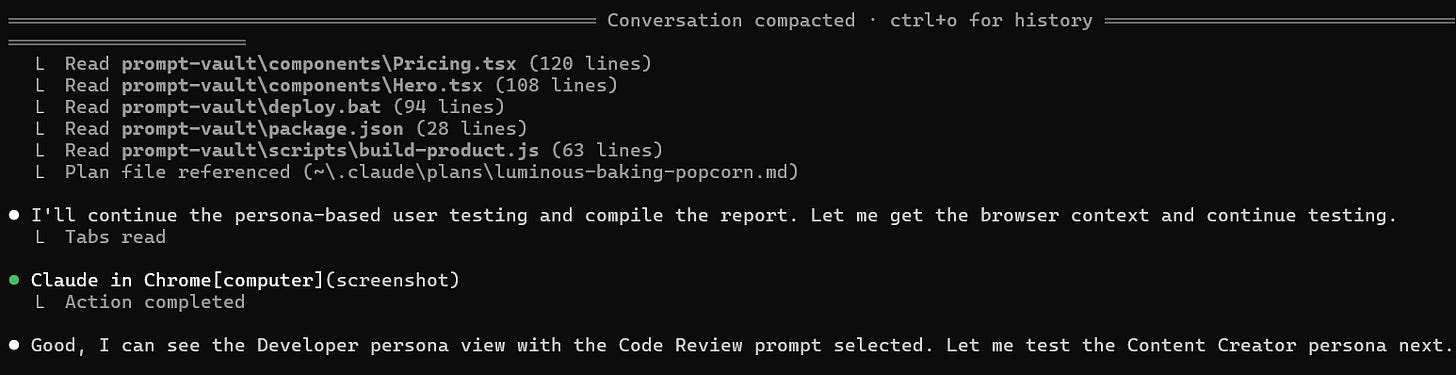

Claude Code Demonstrates Autonomous Hour-Long Coding Sessions

Estimated read time: 7 min

Ethan Mollick describes Claude Code completing an hour-long autonomous session, creating hundreds of files and deploying a functional website. The power comes from context compacting, loadable skills, subagents, and MCP integration. Andrej Karpathy noted he’s never felt this behind as a programmer.

Why this matters: This demonstrates what’s possible when you combine current capabilities thoughtfully. The techniques here, like context compacting and subagents, are patterns you can adopt today.

Complete Guide to Building Agents with Claude SDK

Estimated read time: 9 min

For those ready to build their own agents, the Claude Agent SDK provides eight built-in tools for file operations, terminal commands, and web interaction. This guide walks through building a code review agent that analyzes codebases for bugs with structured JSON feedback and cost tracking.

What this enables: A practical starting point if you’ve been curious about agents but unsure where to begin. The code review example is immediately useful and teaches transferable patterns.

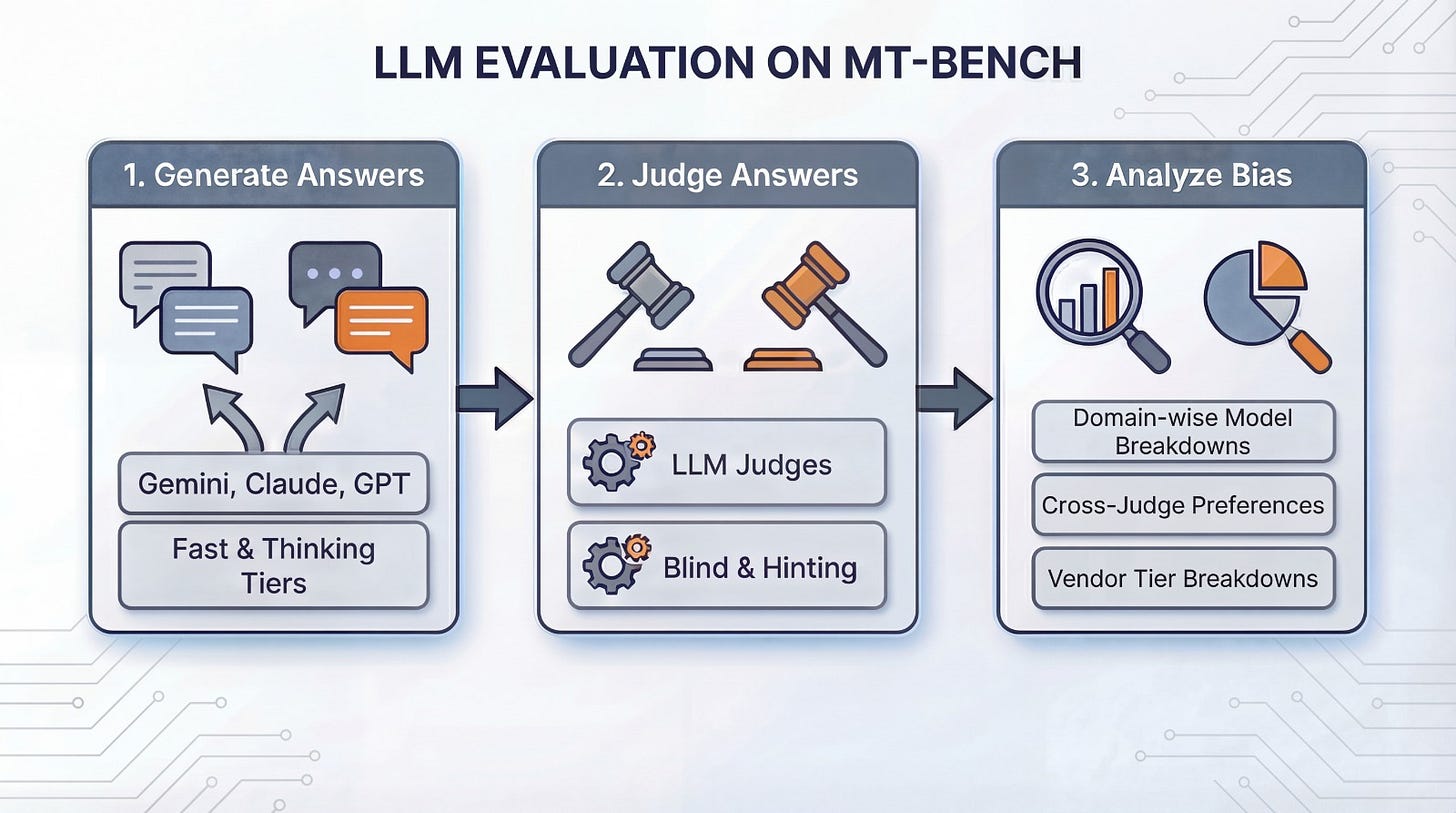

LLM Judges Show Significant Self-Preference Bias

Estimated read time: 8 min

When using LLMs to evaluate other models, self-preference bias emerges. GPT judges favor GPT answers by roughly 47 percentage points above baseline. This research provides a reproducible evaluation pipeline testing three vendors across writing, math, coding, and reasoning domains.

Key point: If you’re using LLM-as-judge for evals, use multiple judge models and blind your evaluations. Single-vendor judging can significantly skew your results.

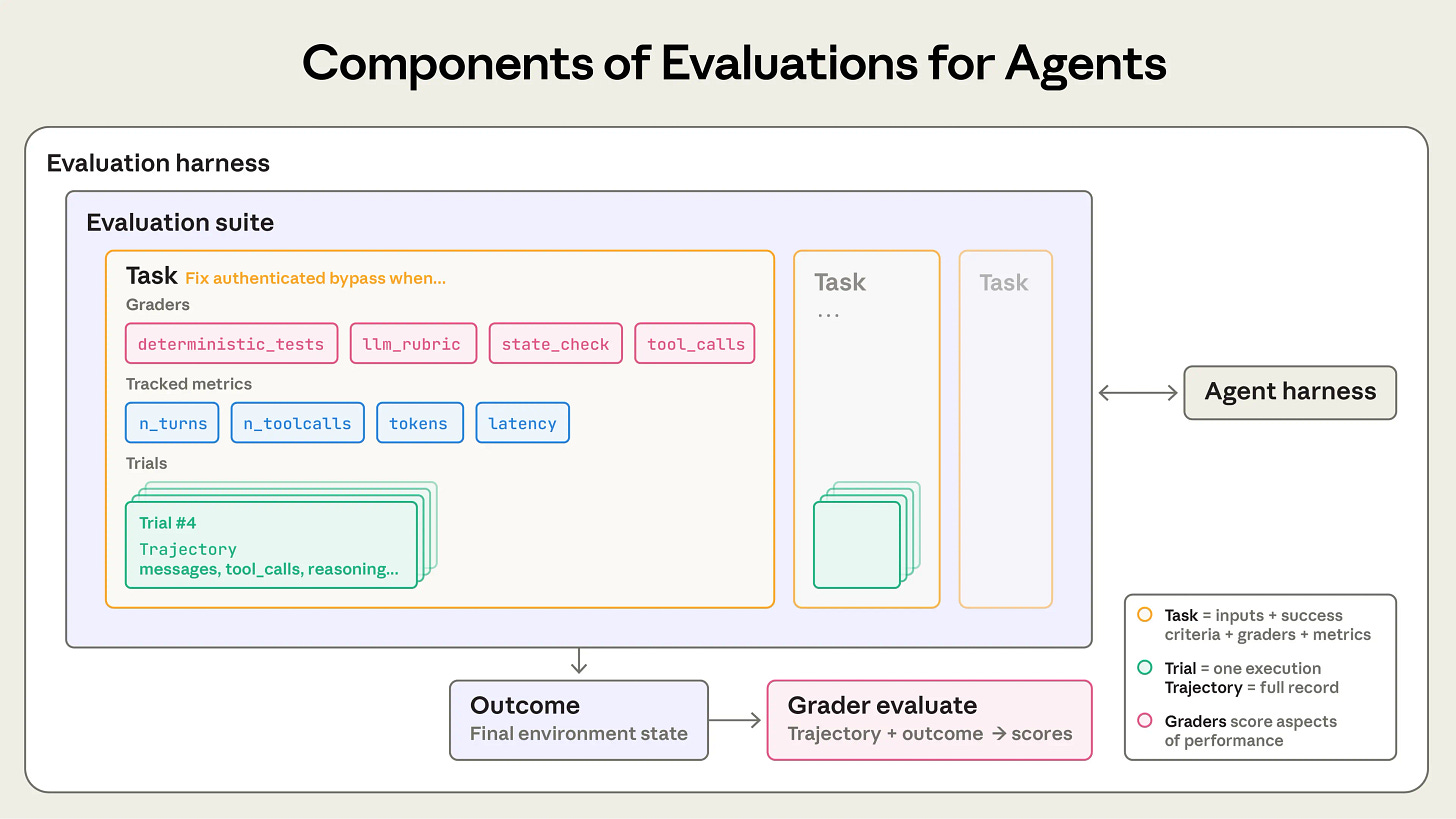

Anthropic’s Engineering Guide to AI Agent Evaluations

Estimated read time: 10 min

Also in the evaluation space, Anthropic shares their methodology combining code-based, model-based, and human graders. Start with 20-50 simple tasks from actual failures. Design graders that evaluate outcomes rather than specific execution paths, since agents may find valid solutions your tests don’t anticipate.

The opportunity: Some teams skip rigorous agent evaluation because it seems complex. This guide makes it accessible with specific approaches for coding, conversational, and research agents.

TOOLS

agent.cpp: Build Local AI Agents in C++

Estimated read time: 5 min

Mozilla AI releases a C++ library for building autonomous agents that run language models locally using llama.cpp. The framework provides an agent loop with lifecycle callbacks for message manipulation and error handling. Supports GGUF-format quantized models with CUDA and OpenBLAS acceleration.

What’s interesting: For teams needing agents without cloud dependencies, or embedded scenarios where Python isn’t viable, this fills a real gap in the ecosystem.

Youtu-Agent: Tencent’s Modular Framework for Autonomous Agents

Estimated read time: 4 min

Also in the agent framework space, Tencent releases Youtu-Agent supporting SimpleAgent (ReAct-style) and OrchestraAgent (multi-agent coordination). YAML-based configuration with pre-built toolkits for web search, file manipulation, and code execution. Includes standardized benchmarking for automated evaluation.

Worth noting: The combination of YAML configuration and built-in benchmarking makes this approachable for teams wanting to experiment with agents without deep framework investment.

MCPs Unlock Cross-Tool Workflows, Not CLI Replacement

Estimated read time: 6 min

Developers dismiss MCPs when comparing them to CLI tools, but that misses the value proposition. The real power emerges in cross-tool integration scenarios, like discussing bugs in Slack, analyzing code, and opening PRs without switching applications.

The context: If you’ve wondered why MCPs matter when CLIs work fine, this reframes the question. It’s about workflow integration across tools, not replacing any single one.

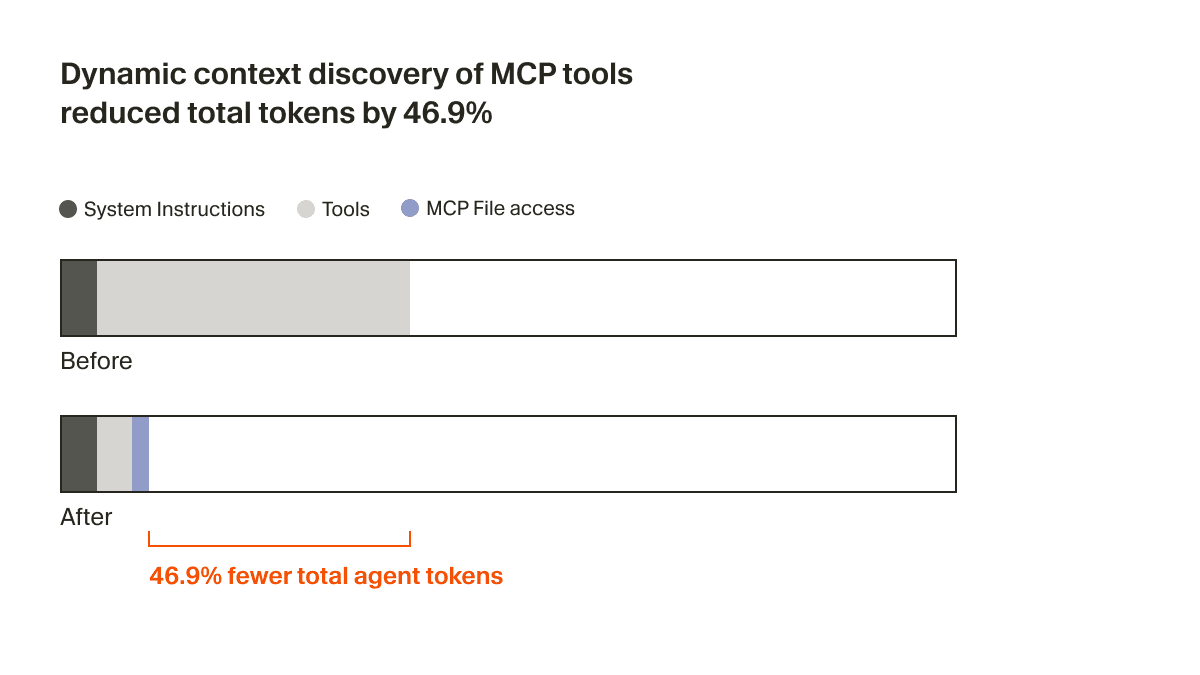

Cursor’s Dynamic Context Discovery Reduces Agent Tokens by 47%

Estimated read time: 5 min

Addressing some concerns from our coverage of Anton Morgunov’s Cursor critique[1] last week, Cursor shifts from loading all context upfront to on-demand retrieval. Testing showed MCP tool optimization reduced total agent tokens by 46.9%. The approach includes writing long outputs to files for selective reading and storing skills as discoverable files.

Why now: As context windows grow, smart retrieval matters more than cramming everything in. This pattern applies whether you’re building agents or just using them effectively.

[1] How Vibe Coding Killed Cursor’s Utility

Agentic Coding Flywheel: VPS to AI Dev Environment in 30 Minutes

Estimated read time: 6 min

Transform a fresh Ubuntu VPS into a fully-configured AI development environment with a single command. Pre-configures Claude Code, Codex CLI, Gemini CLI, 30+ developer tools, and agent coordination capabilities including multi-agent mail. Idempotent design means safe re-runs.

What this enables: Useful for spinning up disposable development environments or onboarding new team members with a consistent AI-ready setup. The idempotent design is thoughtful.

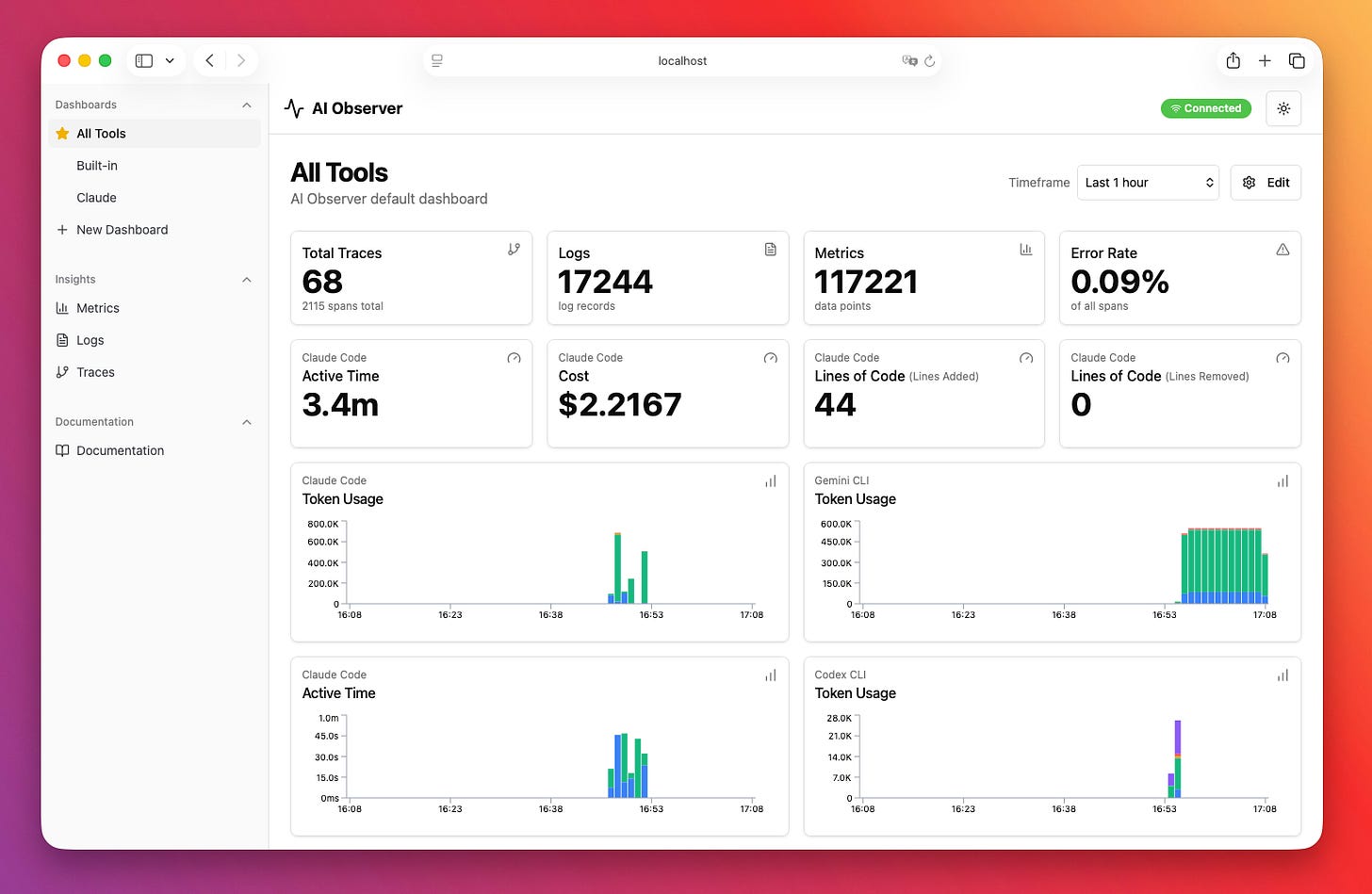

AI Observer: Self-Hosted Telemetry for AI Coding Tools

Estimated read time: 4 min

Monitor Claude Code, Gemini CLI, and Codex CLI usage with this self-hosted observability backend. The 54MB binary includes pricing for 67+ models, real-time WebSocket dashboard updates, and DuckDB storage. Keep telemetry local without third-party dependencies.

The opportunity: Finally track what AI tools actually cost and how you use them. Particularly valuable for teams trying to understand adoption patterns or justify AI tooling budgets.

Liquid AI Releases LFM2.5: Powerful 1.2B Parameter On-Device Model

Estimated read time: 5 min

Liquid AI’s LFM2.5-1.2B substantially outperforms competitors at its size, achieving 86.23 on IFEval versus Llama 3.2 1B’s 52.37. Specialized variants for Japanese, vision-language, and audio processing. Open-weight models support llama.cpp, MLX, vLLM, and ONNX inference.

Why this matters: On-device models keep improving faster than expected. This opens possibilities for offline applications, privacy-sensitive use cases, and reduced inference costs.

Claude Code Team Open Sources Code Simplifier Agent

Estimated read time: 2 min

Anthropic’s Claude Code team releases their internal code simplifier as an installable plugin. Clean up complex PRs or refactor after long coding sessions via claude plugin install code-simplifier. Designed to make code more maintainable by simplifying structure and logic.

Key point: This is what Anthropic’s own team uses internally. A useful addition to your Claude Code toolkit, especially after extended agentic sessions produce sprawling code.

NEWS & EDITORIALS

AI Has Moved Beyond “Just Predicting the Next Word”

Estimated read time: 6 min

Former OpenAI safety researcher Steven Adler challenges the dismissal that AI systems merely predict next words. Modern systems are “path-finders” using reinforcement learning and reasoning models that tackle problems through backtracking and verification. Both DeepMind and OpenAI achieved gold-medal Math Olympiad performance.

Worth reading: A useful article for conversations with skeptics who dismiss AI capabilities. Adler provides concrete examples that move past the “stochastic parrot” framing.

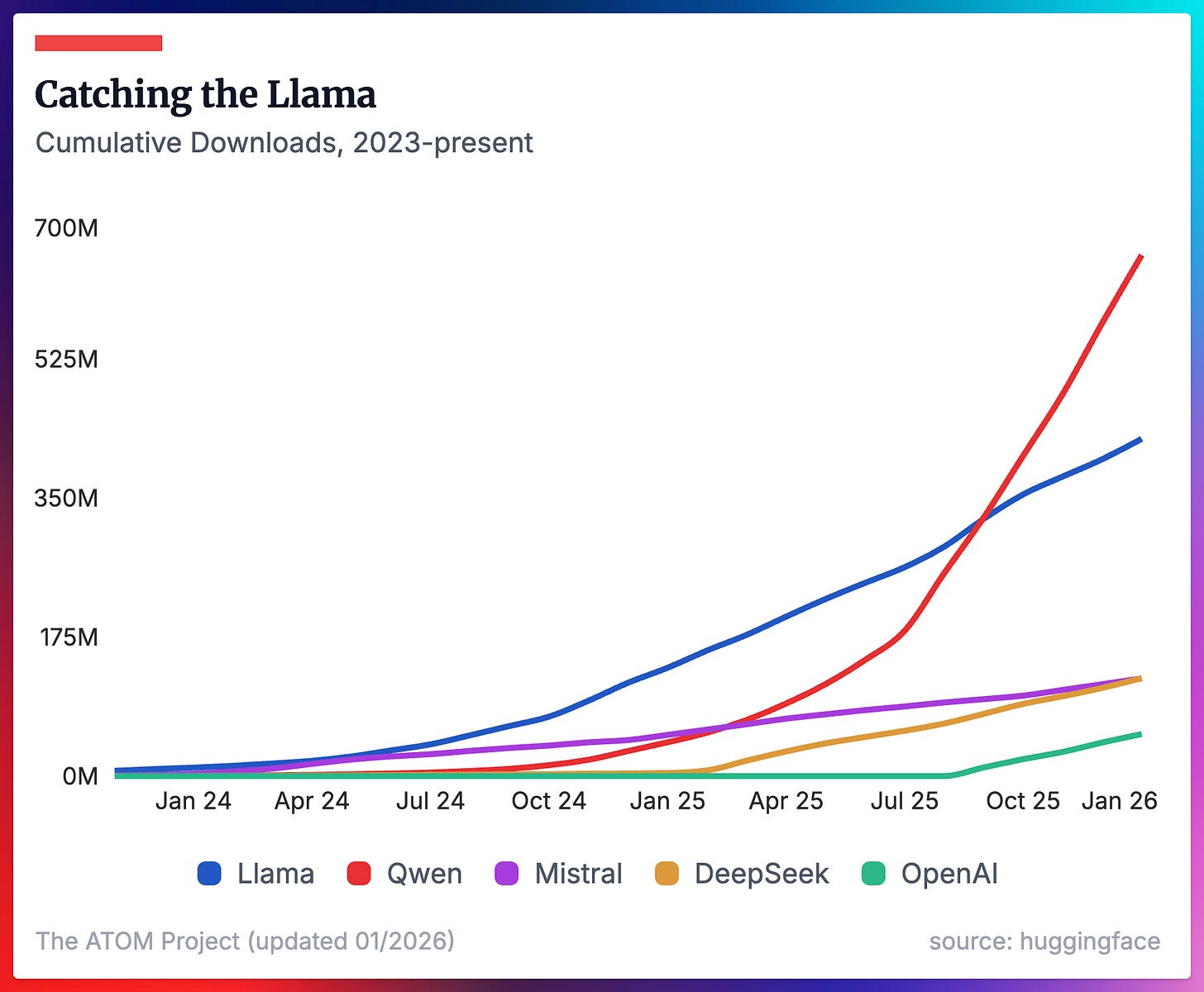

8 Plots Reveal Chinese Dominance in Open AI Models

Estimated read time: 7 min

Qwen has become the default open model choice, with Chinese models dominating adoption metrics. Despite discontinuation, older Llama models remain the most downloaded Western option due to installed base momentum. DeepSeek leads at larger scales, revealing Qwen’s weakness there.

The context: Understanding where open models come from affects your technology choices. DeepSeek’s strength at scale represents a potential opening for teams building larger customized systems.

Eight Software Markets Face Divergent AI Disruption

Estimated read time: 7 min

Continuing the market analysis, different software markets respond distinctly to AI coding tools. Enterprise internal tools see backlogs become buildable. Personal software will explode as skill barriers collapse. Safety-critical systems actively resist AI adoption due to certification requirements.

Key point: Your AI strategy depends heavily on which market you’re in. The “AI will change everything” narrative obscures that different domains face very different trajectories.

Software Acceleration Risks Breaking Feedback Loops

Estimated read time: 8 min

Shifting from market dynamics to development process, accelerating individual tasks risks desynchronization. Code reviews aren’t just error detection; they spread knowledge and enforce norms. Removing these touchpoints may seem faster locally but breaks critical feedback pathways.

The takeaway: A thoughtful counterpoint to “AI makes everything faster” enthusiasm. Sometimes strategically slowing one activity accelerates others by maintaining stability across interconnected workflows.