Build Better AI Agent Tools Using Evaluations

PLUS - SemTools Supercharges CLI Agents for Document Search

Welcome back to AlteredCraft's Delta Notes! Thank you for joining us as we explore the latest developments in AI engineering. This edition calls out practical techniques for building better AI agents, from Anthropic's evaluation methods to new MCP tools that enhance coding accuracy. We're also examining why startups might want to train their own models and how semantic search is transforming CLI-based document analysis. Let's explore what's new in the world of AI development.

TUTORIALS & CASE STUDIES

Build Better AI Agent Tools Using Evaluations

Estimated read time: 15 min

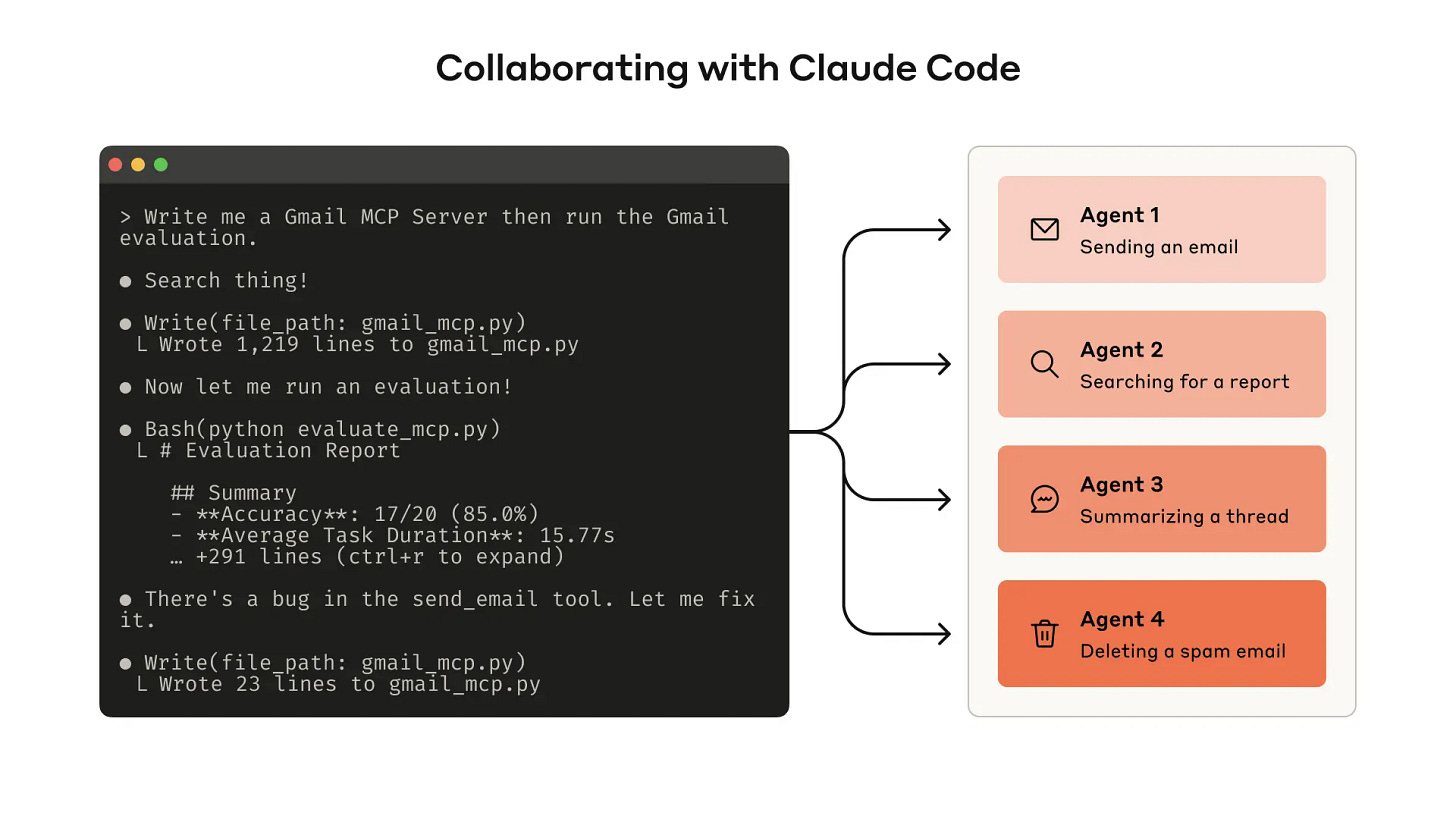

Anthropic shares proven techniques for creating effective tools that AI agents can use reliably. Learn how to prototype, evaluate, and optimize your Model Context Protocol (MCP) tools by collaborating with Claude Code. Key principles include choosing focused tools over many generic ones, returning meaningful context, and prompt-engineering tool descriptions for maximum agent performance.

Build Custom AI Research Agents for Tech Intelligence

Estimated read time: 10 min

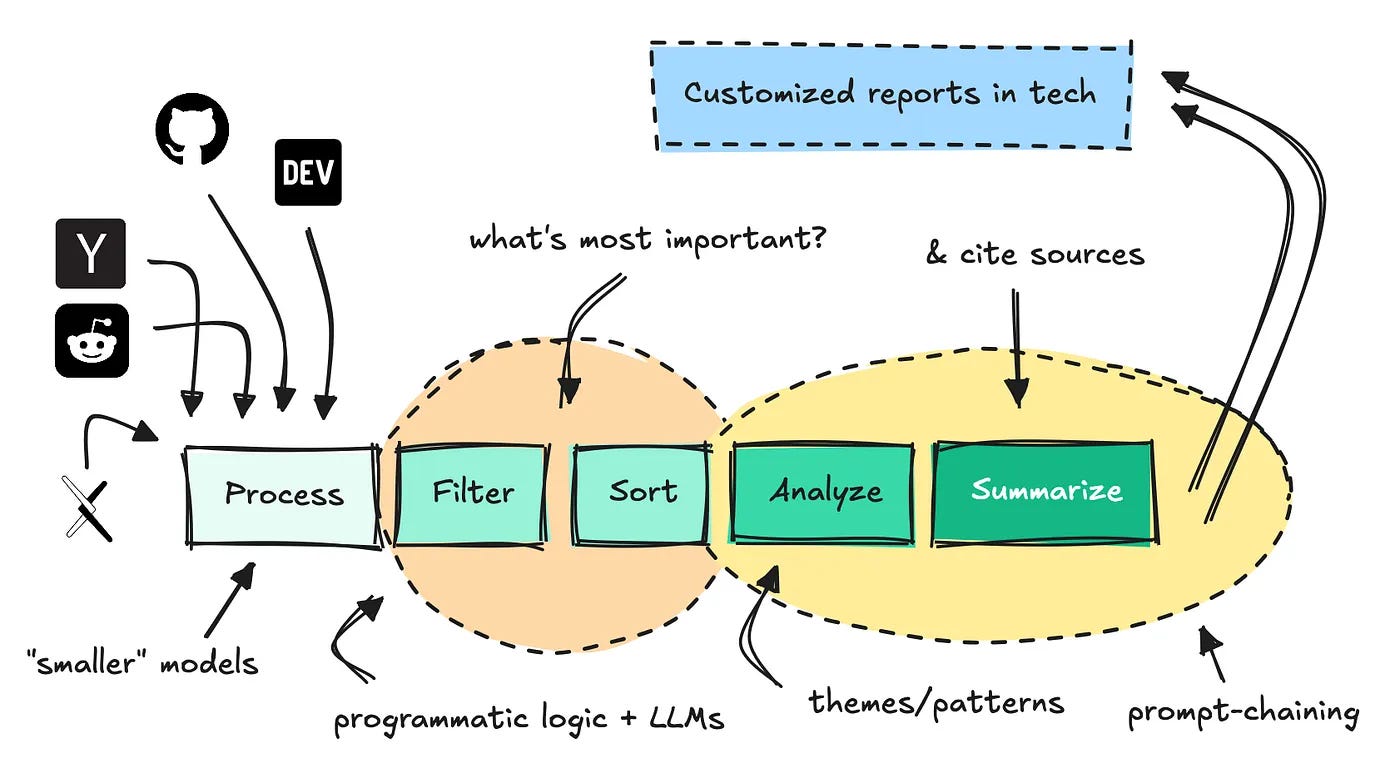

Learn how to create specialized AI agents that scout tech forums and aggregate millions of texts to deliver personalized insights. This tutorial demonstrates building a research agent using prompt chaining with small and large language models, data caching strategies, and structured workflows that outperform generic ChatGPT searches for developer-specific tech trend analysis.

Practical Guide to Choosing Open Source LLMs

Estimated read time: 10 min

This guide explains how to select the right open source LLM for your specific project needs, moving beyond benchmark scores to practical considerations like hardware constraints, deployment complexity, and real-world performance. It introduces AI Sheets for side-by-side model testing with actual data, covers VRAM requirements for different model sizes, and compares inference providers including Groq and Cerebras for production deployments.

OpenAI Studies Why Language Models Hallucinate

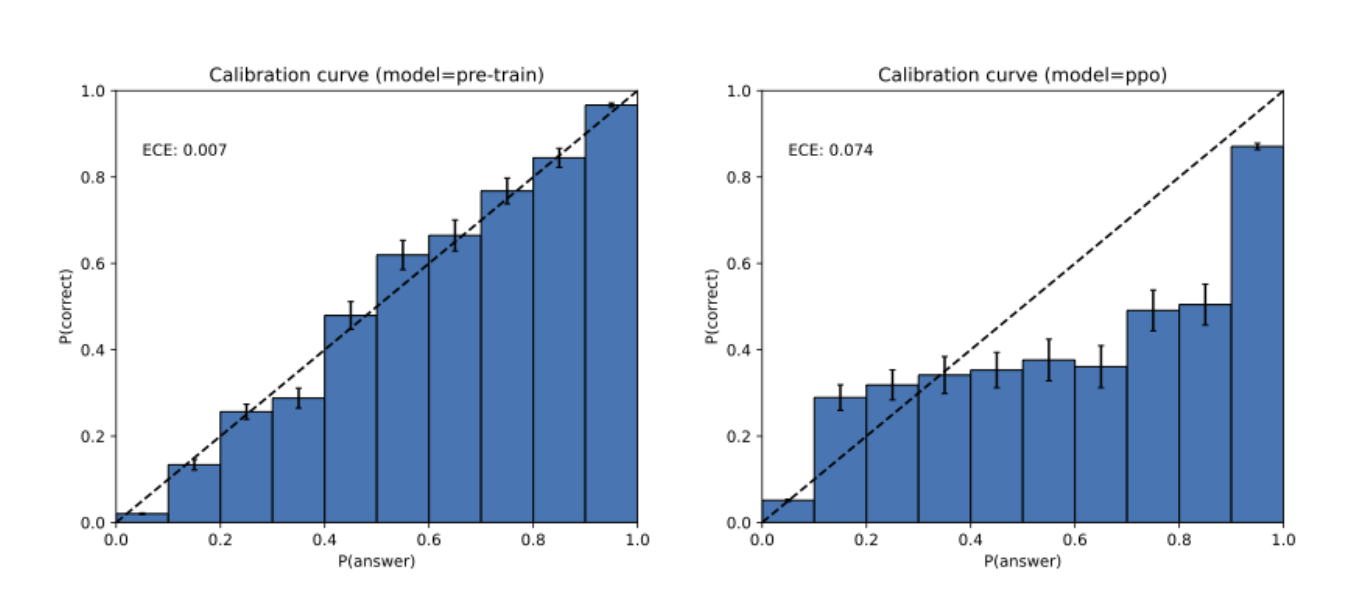

Estimated read time: 8 min

OpenAI's new research explains that language models hallucinate because evaluation methods reward guessing over admitting uncertainty. For developers building RAG systems and AI agents, this insight suggests prioritizing confidence scores and uncertainty handling in production applications. The paper challenges common misconceptions, showing that smaller models can actually be better calibrated than larger ones.

Disciplined AI Software Development Methodology

Estimated read time: 15 min

A structured methodology for collaborating with AI on software projects addresses common issues like code bloat and architectural drift. The four-stage approach uses systematic constraints including 150-line file limits, mandatory benchmarking infrastructure, and focused component implementation. Includes practical tools, example projects, and model-specific guidance for developers using AI assistants.

TOOLS

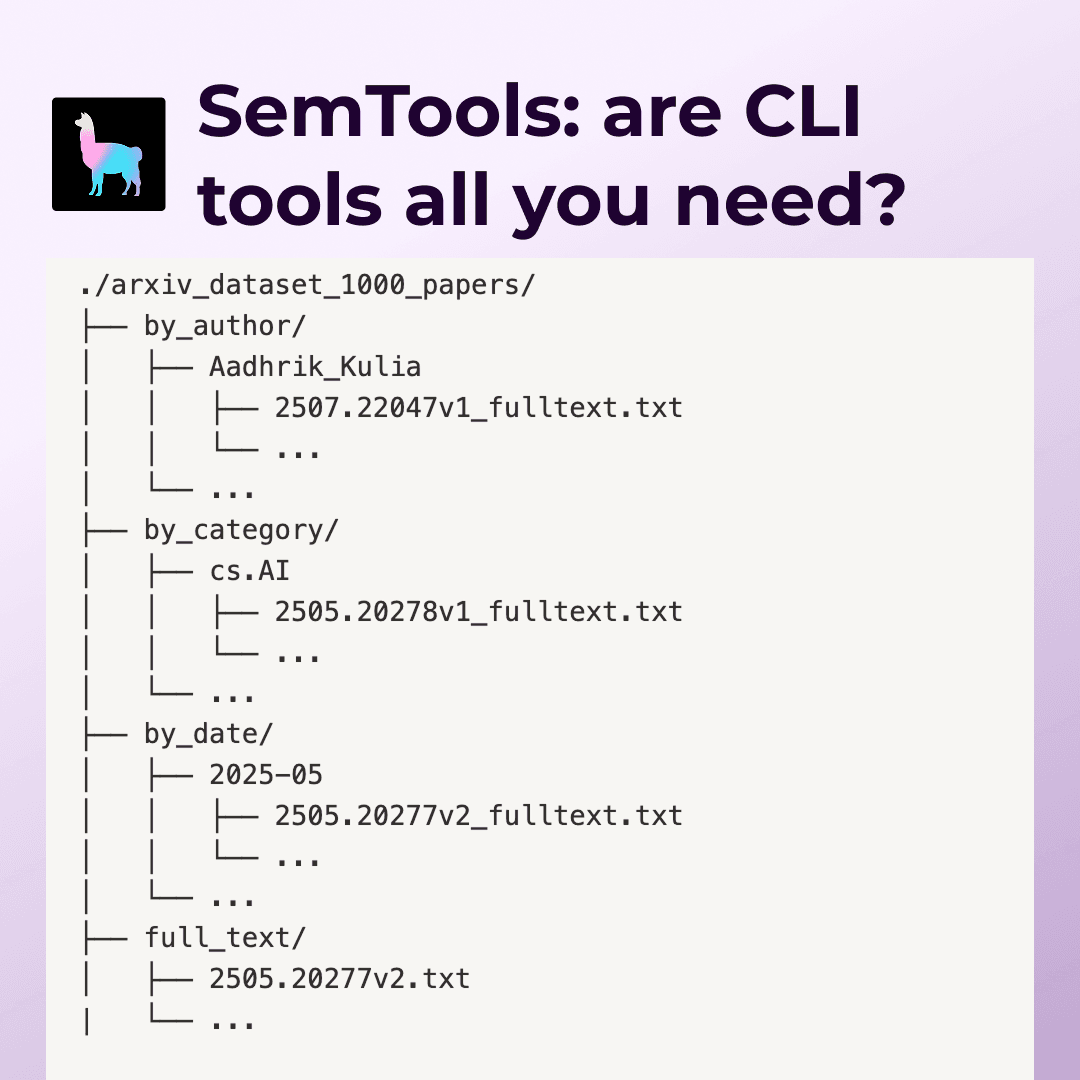

SemTools Supercharges CLI Agents for Document Search

Estimated read time: 8 min

LlamaIndex introduces SemTools, a CLI toolkit that enhances coding agents like Claude Code with semantic search capabilities. Testing on 1000 ArXiv papers showed that combining Unix tools with semantic search significantly improves document analysis accuracy and detail compared to traditional grep-based approaches, making CLI-based agents a powerful alternative to custom RAG implementations.

Chroma's MCP Server Enhances AI Coding Accuracy

Estimated read time: 3 min

Chroma introduces a Model Context Protocol (MCP) server that improves AI coding performance by exposing source code dependencies. The Package Search MCP Server provides three tools for semantic and regex-based code exploration, reducing hallucinations in AI-generated code.

Google Launches MCP Servers for Cloud AI Integration

Estimated read time: 8 min

Google has released open-source Model Context Protocol (MCP) servers that enable AI assistants to interact with Google Cloud services using natural language. The gcloud-mcp server allows developers to automate complex cloud workflows, execute gcloud commands through AI agents, and integrate with popular tools like Claude Desktop, Cursor, and Gemini CLI. Additional servers include observability-mcp for accessing logs, metrics, and traces.

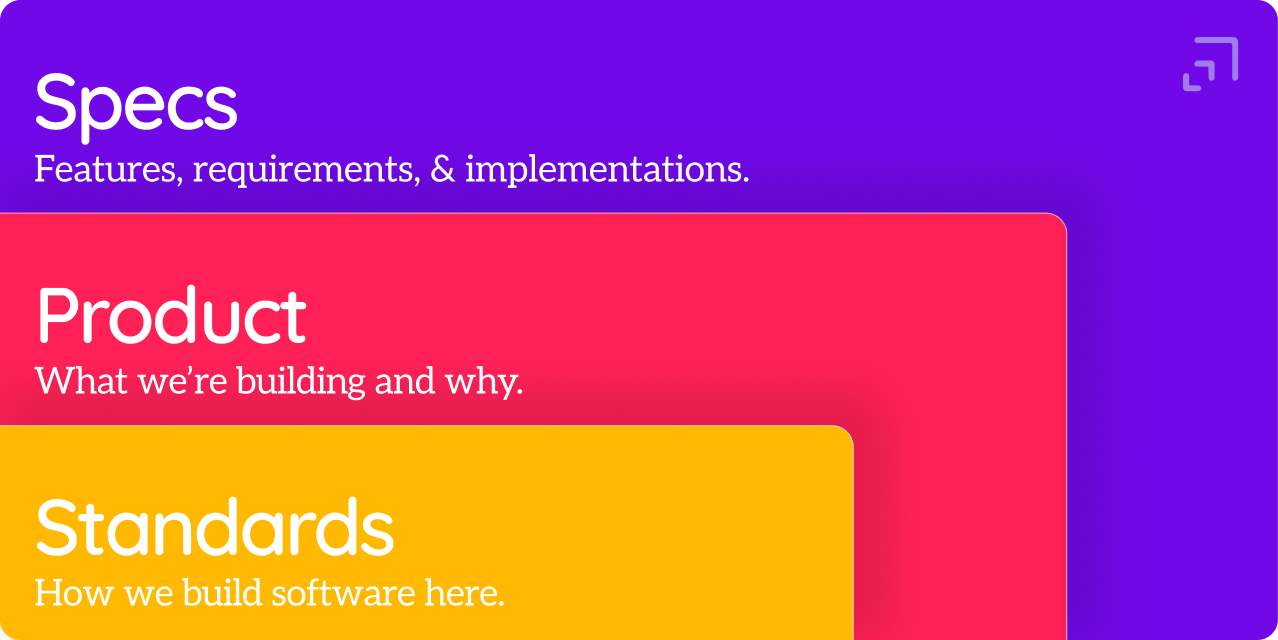

Agent OS Transforms AI Coding Agents Into Trusted Developers

Estimated read time: 15 min

Agent OS is a free open-source system that revolutionizes how AI coding agents understand and work with your codebase. By providing three layers of context—standards, product details, and specs—it ensures AI agents write code that matches your style consistently. The framework replaces chaotic prompting with structured workflows, supporting both Claude Code and Cursor while remaining completely customizable to your team's development practices.

Claude API Adds Web Fetch Tool with Security Safeguards

Estimated read time: 5 min

Anthropic introduces a new web fetch tool for Claude API that allows LLMs to retrieve content from URLs while implementing security measures against prompt injection attacks. The tool restricts URL access to prevent data exfiltration, only allowing fetches from user-provided URLs or previous search results, not arbitrary Claude-generated URLs. Developers can further enhance security using domain allow-lists.

NEWS & EDITORIALS

AGI Evangelism, Scaling Costs, and Developer Tradeoffs

Estimated read time: 6 min

TechCrunch’s interview with Karen Hao dissects how OpenAI’s AGI-first race reshaped the field: prioritizing speed and scale over algorithmic advances, safety, and efficiency. For developers, it spotlights rising infra spend, data/energy strain, and externalized labor harms—while arguing for domain-specific systems (e.g., AlphaFold) that deliver measurable value with less compute. Practical takeaway: optimize for targeted capability, not maximal model size.

Why AI Startups Must Train Their Own Models

Estimated read time: 8 min

AI startups currently rely on lab APIs, but the barrier to training custom models is rapidly collapsing. With DeepSeek achieving o1-level reasoning for just $6M through distillation, companies like Cursor are already transitioning from API wrappers to proprietary models. The key insight: whoever controls the Token Factor Productivity and gathers user interaction data will dominate the AI application landscape.

Mistral AI Secures €1.7B for Enterprise AI Solutions

Estimated read time: 3 min

Mistral AI announced a €1.7B Series C funding round led by ASML, valuing the company at €11.7B. The investment will accelerate development of custom decentralized frontier AI solutions for complex engineering challenges. This partnership signals growing enterprise demand for tailored AI models beyond general-purpose LLMs, offering developers opportunities in specialized industrial AI applications.