Weekly review: Advanced Context Engineering for AI Coding Agents

PLUS - Chrome DevTools MCP Brings AI Debugging Power

Welcome back to AlteredCraft’s weekly review! Thank you for joining us as we explore the rapidly evolving AI development landscape. This edition dives into critical security vulnerabilities in MCP implementations, breakthrough techniques for handling 300k LOC codebases with AI agents, and how developers are running powerful coding assistants completely offline. Plus, discover why the DORA report says AI amplifies what you already have—not what you wish you had.

TUTORIALS & CASE STUDIES

How Claude Code Revolutionizes AI-First Software Development

Estimated read time: 15 min

Anthropic’s Claude Code generates $500M+ annual revenue while transforming how engineering teams build software. The tool, where 90% of code is written by itself, enables engineers to prototype 10+ variations daily and ship 5 releases per engineer. Built with TypeScript, React, and Ink, Claude Code demonstrates AI-first development practices including automated code reviews, test-driven development renaissance, and subagent architectures that accelerate iteration cycles.

Connect Your LLM to External Systems with MCP from Real Python

Estimated read time: 35 min

Learn how the Model Context Protocol (MCP) standardizes LLM interactions with external systems through a client-server architecture. This tutorial shows you how to build Python MCP servers with custom tools for e-commerce data queries, test them effectively, and integrate with AI agents like Cursor. You’ll create reusable prompts, resources, and tools that any MCP-compatible client can leverage, eliminating boilerplate code for common integrations.

RAG Reranking: Boost Answer Quality with Cross-Encoders

Estimated read time: 8 min

Learn how reranking with cross-encoders dramatically improves RAG pipeline accuracy by refining initial retrieval results. This tutorial demonstrates implementing two-stage retrieval with reranking using Python, showing how cross-encoders jointly embed queries and documents for better relevance scoring than cosine similarity alone, leading to more accurate LLM responses.

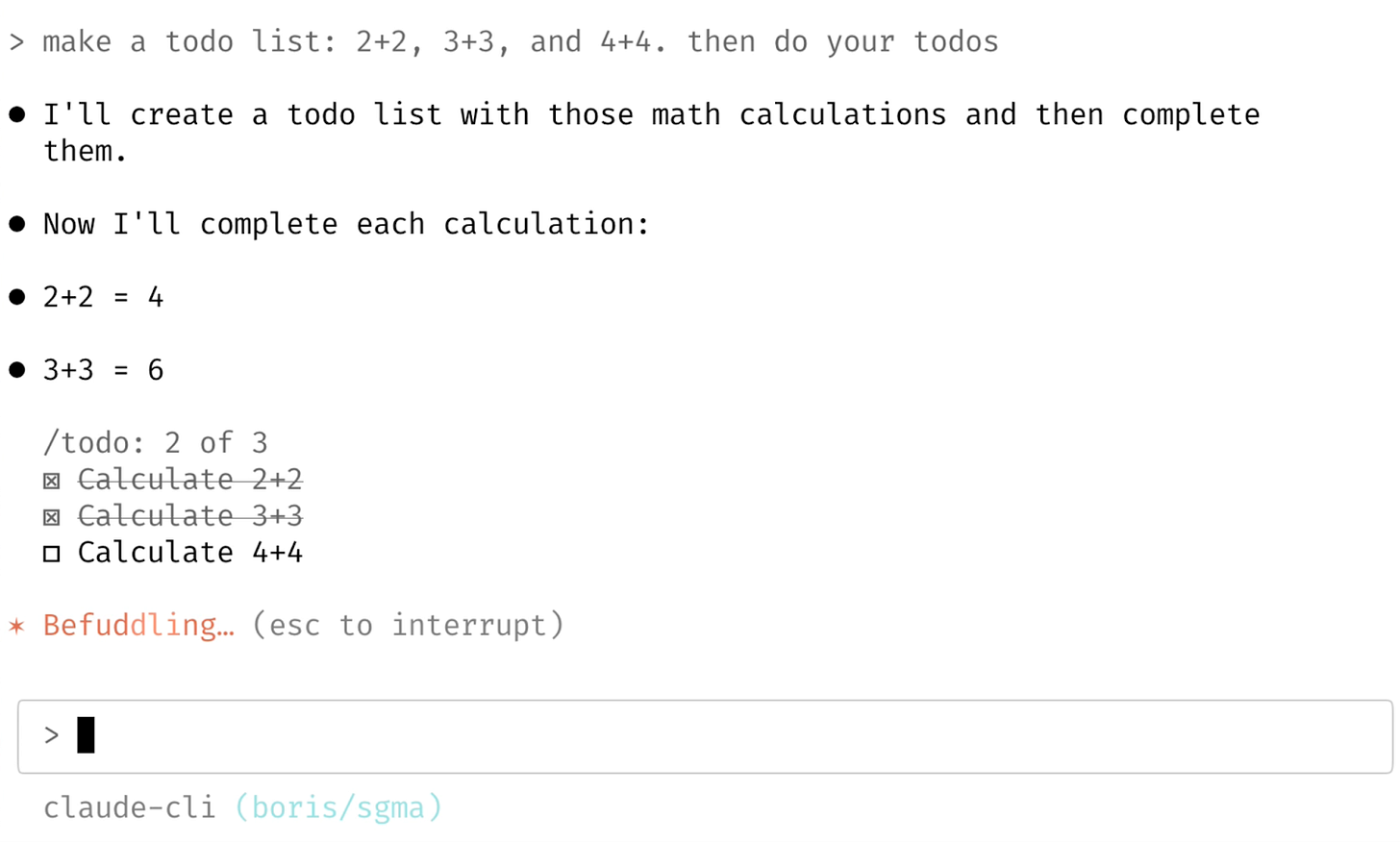

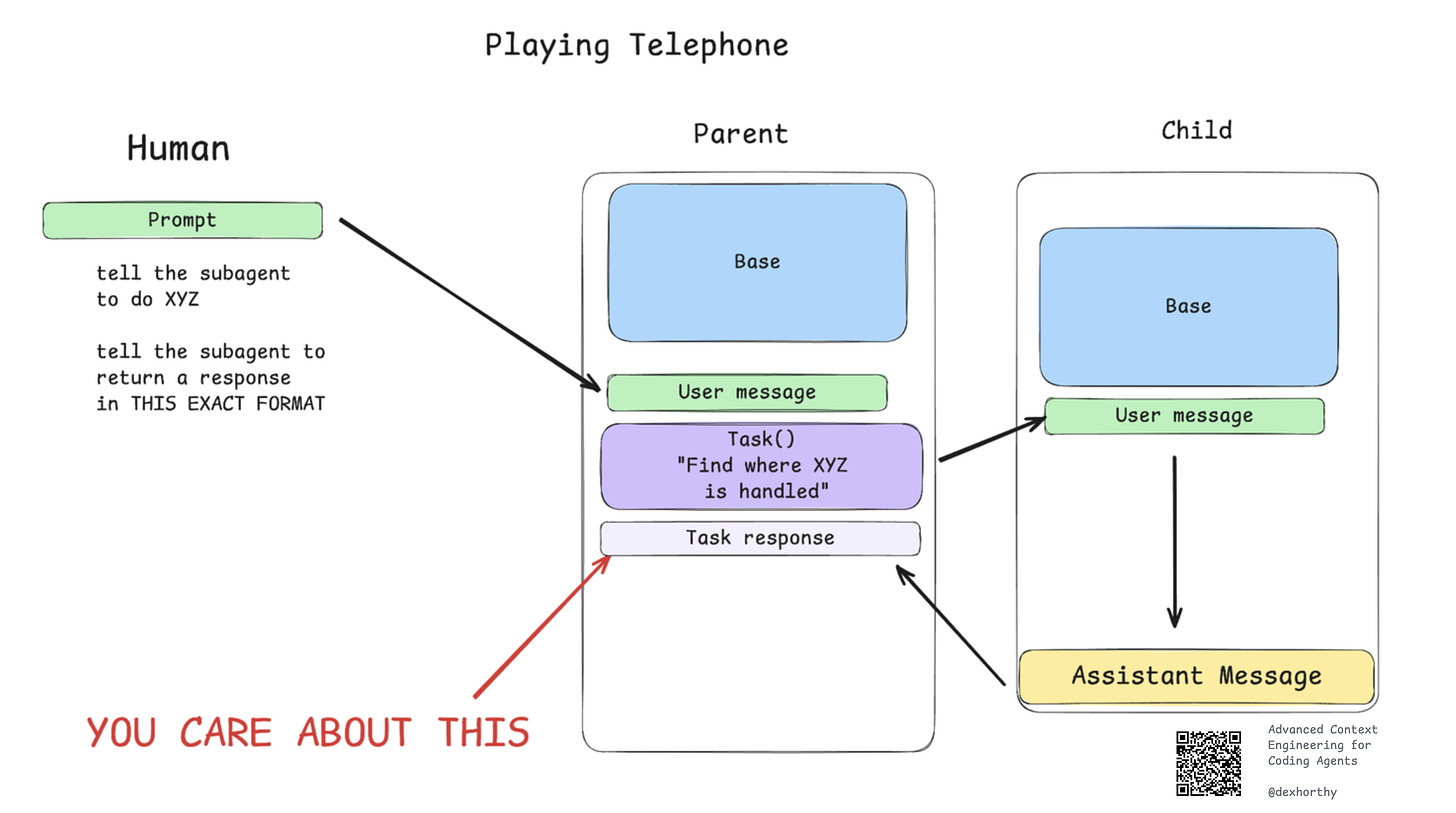

Advanced Context Engineering for AI Coding Agents

Estimated read time: 25 min

Discover how frequent intentional compaction techniques enable AI coding agents to handle 300k LOC production codebases effectively. This deep dive reveals practical workflows combining research, planning, and implementation phases that helped ship 35k lines of quality code in just 7 hours, challenging the notion that AI tools only work for greenfield projects.

Critical MCP Authentication Flaws Enable Remote Code Execution

Estimated read time: 14 min

Security researchers discovered severe vulnerabilities in Model Context Protocol (MCP) implementations that allow attackers to execute arbitrary code through malicious OAuth authentication URLs. Popular AI coding tools including Claude Code, Gemini CLI, and Cloudflare’s use-mcp library were affected, potentially giving attackers complete control over developer machines. The flaws highlight critical security gaps in AI tool integrations that developers must address.

TOOLS

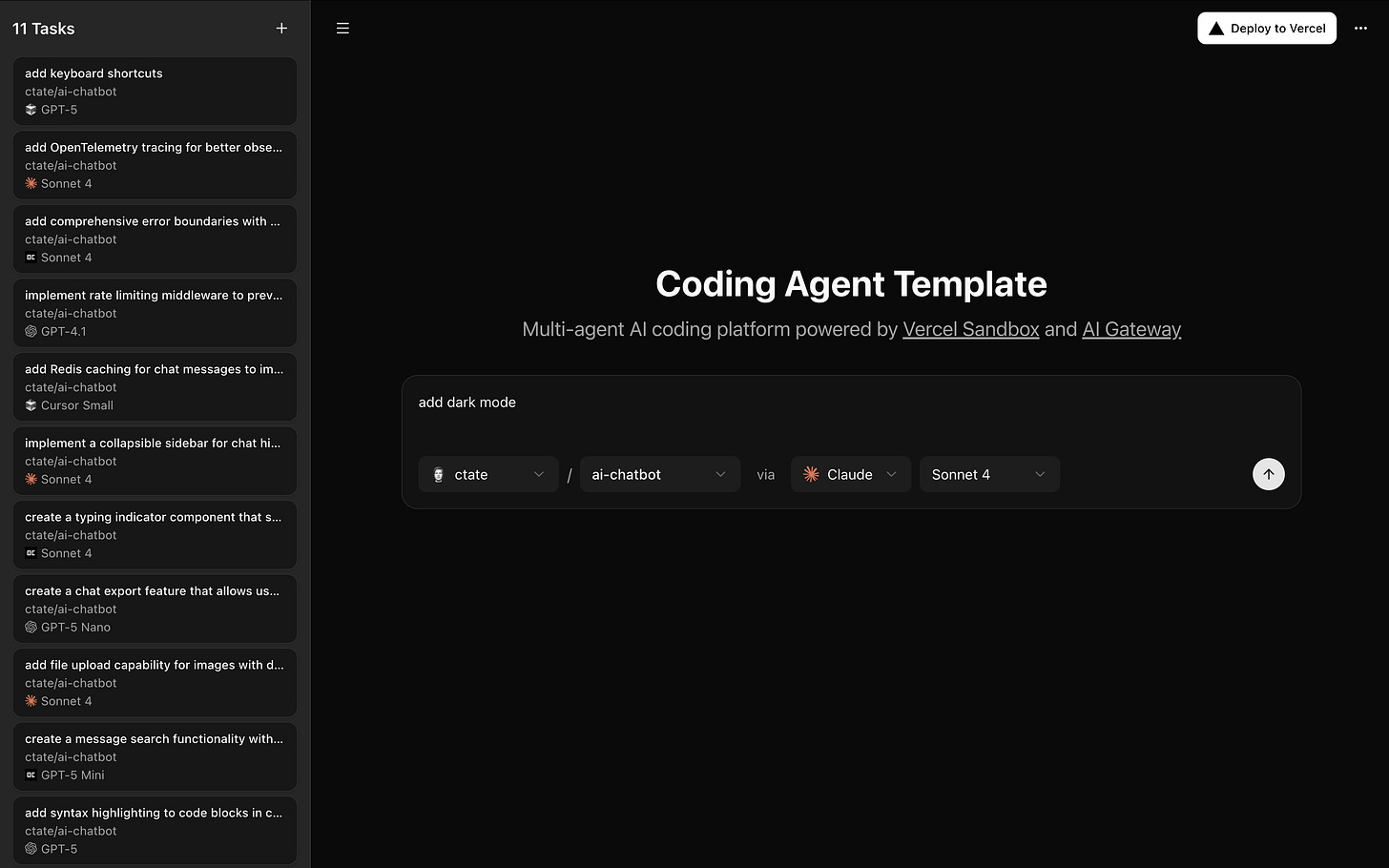

Vercel’s Multi-Agent AI Coding Platform Template

Estimated read time: 8 min

Vercel Labs released a powerful template for building AI-powered coding agents supporting Claude Code, OpenAI Codex CLI, and Cursor CLI. The platform executes coding tasks automatically in secure sandboxes, generates AI-powered branch names, and integrates seamlessly with Vercel AI Gateway for model routing and observability.

Ollama Launches Web Search API for AI Agents

Estimated read time: 8 min

Ollama introduces a powerful web search API that enables developers to augment their AI models with real-time web data, reducing hallucinations and improving accuracy. The API offers a generous free tier and integrates seamlessly with Ollama’s Python and JavaScript libraries, supporting tool-calling models like qwen3 and gpt-oss for building sophisticated search agents. Developers can now create AI applications that fetch current information, conduct research tasks, and integrate with popular tools like Cline and Codex through MCP servers.

Turso Brings Native Vector Search to SQLite Evolution

Estimated read time: 8 min

Turso revolutionizes SQLite with built-in vector search capabilities for AI applications and RAG workflows. This next-generation database engine offers async design, cloud-native architecture, and on-device processing perfect for AI agents. Developers building with LLMs can leverage native similarity search without extensions, making it ideal for local-first AI assistants and scalable multi-tenant applications.

LlamaIndex Launches High-Performance CLI Tools for Semantic Search

Estimated read time: 8 min

LlamaIndex introduces SemTools, a Rust-based CLI toolkit combining document parsing via LlamaParse API with local semantic search using multilingual embeddings. Features include workspace management for large collections, Unix-friendly design, concurrent processing, and integration examples with coding agents and MCP. Perfect for developers building RAG applications needing fast, reliable document processing and semantic search capabilities.

Chrome DevTools MCP Brings AI Debugging Power

Estimated read time: 8 min

Google launches Chrome DevTools Model Context Protocol (MCP) server, enabling AI coding assistants to debug web pages directly in Chrome. This open-source standard for connecting LLMs to external tools solves the “blindfold programming” problem by letting AI agents verify code changes, diagnose network errors, simulate user behavior, debug styling issues, and automate performance audits in real-time.

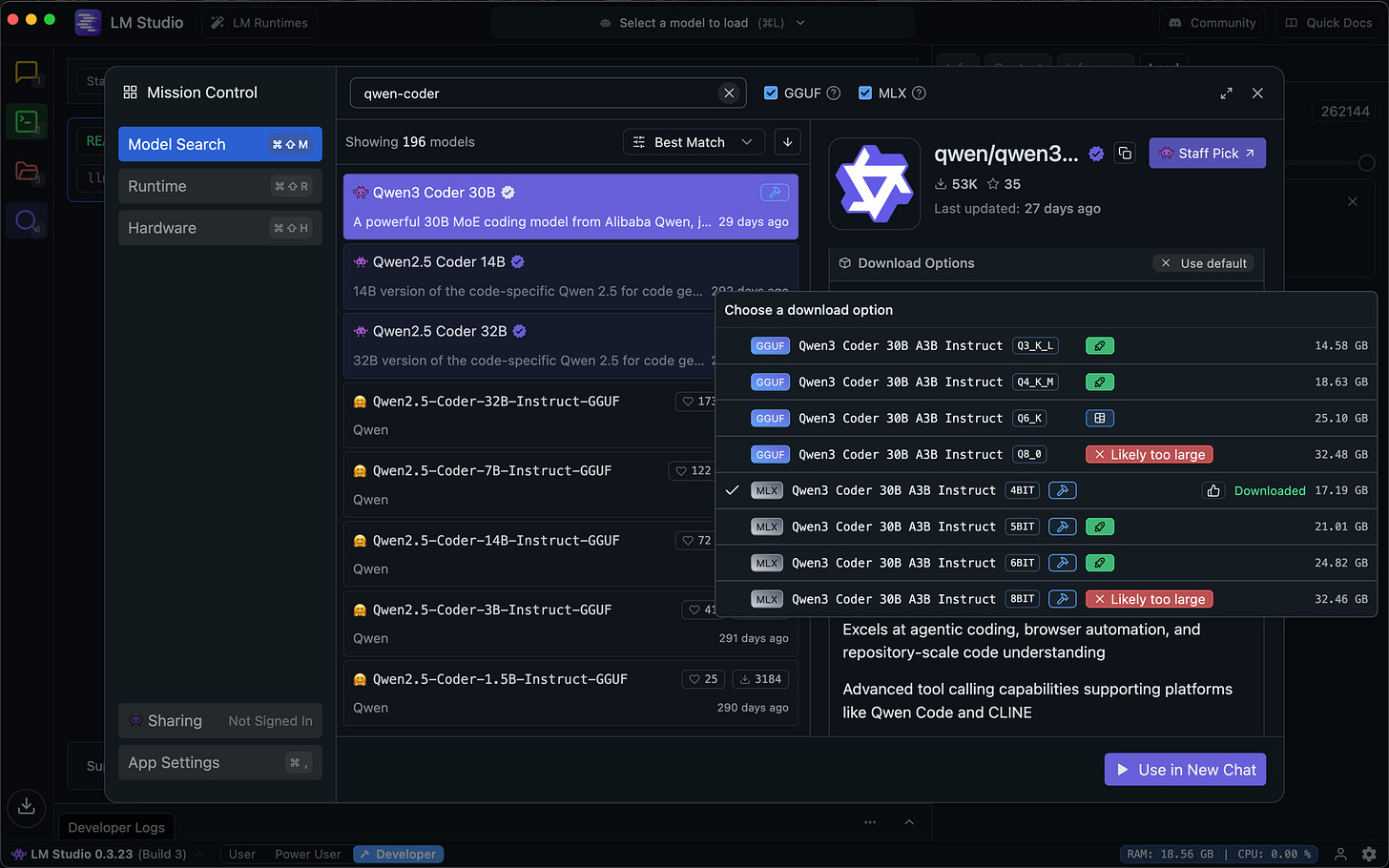

Run AI Coding Agents Completely Offline with Cline

Estimated read time: 8 min

Developers can now run AI coding agents entirely offline using Cline with LM Studio and Qwen3 Coder 30B. This local stack enables private, cost-free development with no API dependencies. The setup provides 256k context, repository-scale understanding, and real coding capabilities on consumer hardware, particularly optimized for Apple Silicon, making AI-assisted development possible without internet connectivity.

NEWS & EDITORIALS

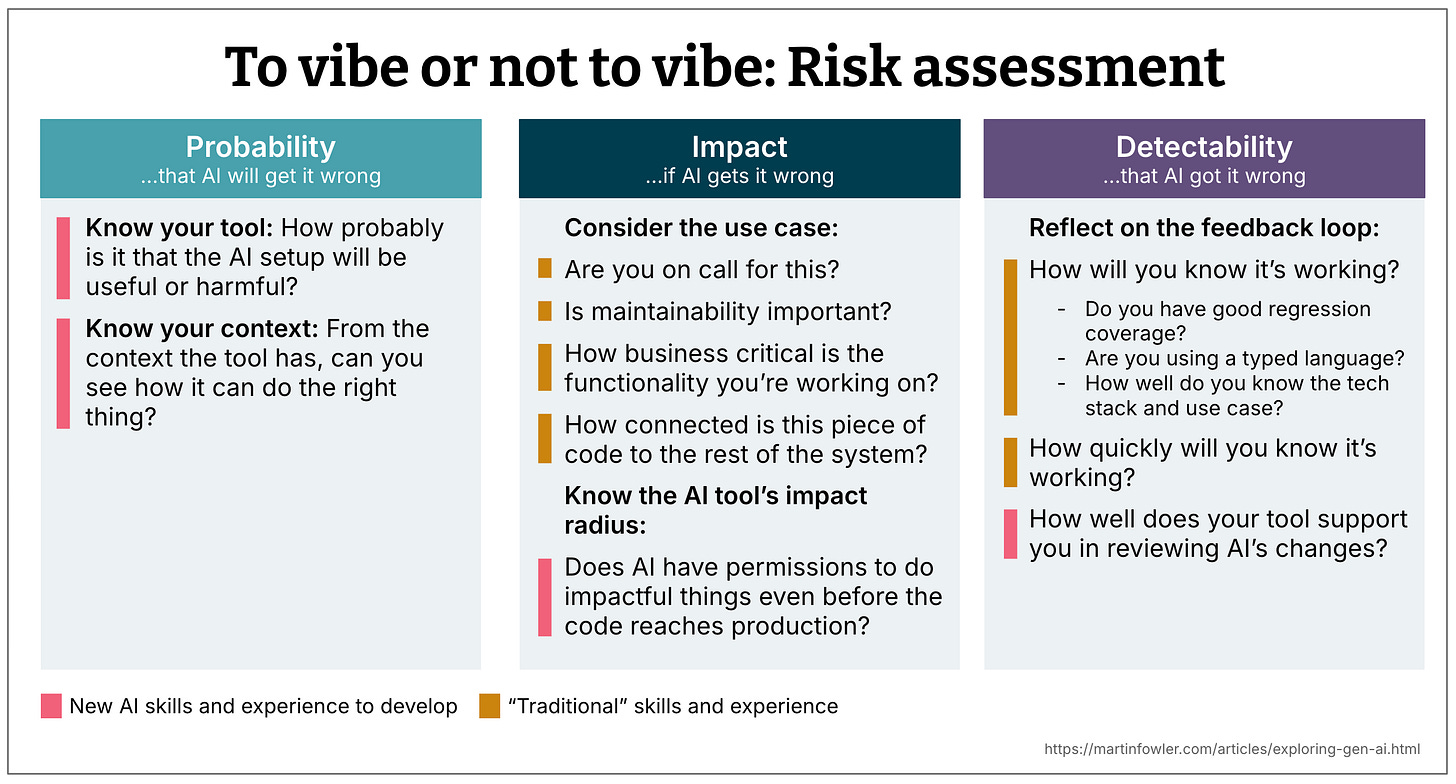

AI Code Review Risk Assessment Framework

Estimated read time: 8 min

Martin Fowler introduces a practical framework for deciding when to trust AI-generated code using three dimensions: probability of errors, impact of mistakes, and detectability of issues. The article explains how developers can make intuitive risk assessments by considering factors like tool quality, available context, and existing safety nets to balance AI’s speed with code reliability.

28 AI Agent Ideas for Developers to Build

Estimated read time: 8 min

A developer shares 28 practical AI tool concepts ranging from specialized code agents to semantic search engines. Notable ideas include agents for automatic theming, minified code decompilation, multi-day research tasks, and a marketplace for hyper-specific AI agents. These concepts showcase opportunities for developers to build focused, single-purpose AI tools rather than general-purpose solutions.

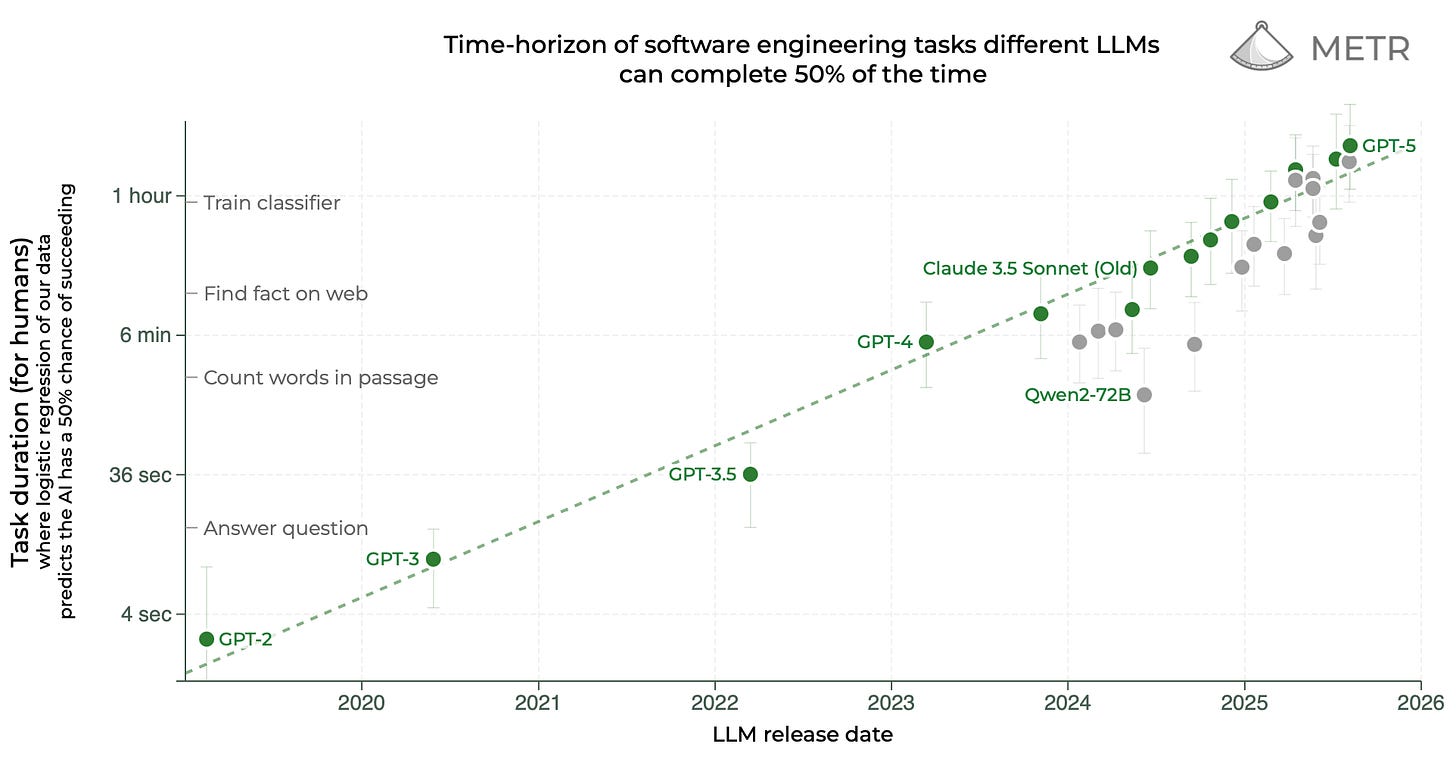

RL Training Inefficiency Threatens AI Scaling Progress

Estimated read time: 10 min

The shift from pre-training to reinforcement learning in frontier AI models like GPT-5 and o1 introduces a 1,000,000x information efficiency penalty per training hour. While RL excels at specific reasoning tasks, this inefficiency limits generality—a critical concern for developers building RAG systems and AI agents requiring broad capabilities across diverse domains.

DORA Report: AI Amplifies Your Organization’s Existing Capabilities

Estimated read time: 13 min

The 2025 DORA report reveals that AI doesn’t create excellence—it amplifies what already exists. For developers building RAG systems and AI agents, success requires seven foundational capabilities including clear AI policies, healthy data ecosystems, and user-centric focus. Without these organizational foundations, AI adoption can actually harm team performance.